Blog

Install VirtualBox over Fedora with SecureBoot enabled

24 March 2025

Not too long ago, I upgraded my computer and got a new Lenovo ThinkPad X1 Carbon (a great machine so far!).

Since I was accustomed to working on a gaming rig (Ryzen 7, 64GB RAM, 4TB) that I had set up about five years ago, I completely missed the Secure Boot and TPM trends—these weren’t relevant for my fixed workstation.

However, my goal with this new laptop is to work with both Linux and Windows on the go, making encryption mandatory. As a non-expert Windows user, I enabled encryption via BitLocker on Windows 11, which worked perfectly... until it didn’t.

The Issue with Secure Boot and VirtualBox/VMware

This week, I discovered that BitLocker leverages TPM (the encryption chip) and Secure Boot if they’re enabled during encryption. While this is beneficial for Windows users, it created an unexpected problem for me: virtualization on Linux.

Let me explain. Secure Boot is:

...an enhancement of the security of the pre-boot process of a UEFI system. When enabled, the UEFI firmware verifies the signature of every component used in the boot process. This results in boot files that are easily readable but tamper-evident.

This means components like the kernel, kernel modules, and firmware must be signed with a recognized signature, which must be installed on the computer.

This creates a tricky situation for Linux because virtualization software like VMware or VirtualBox typically compiles kernel modules on the user’s machine. These modules are unsigned by default, leading to errors when loading them:

# modprobe vboxdrv

modprobe: ERROR: could not insert 'vboxdrv': Key was rejected by service

A good way to diagnose this is to check dmesg for messages like:

[47921.605346] Loading of unsigned module is rejected

[47921.664572] Loading of unsigned module is rejected

[47932.035014] Loading of unsigned module is rejected

[47932.056838] Loading of unsigned module is rejected

[47947.224484] Loading of unsigned module is rejected

[47947.257641] Loading of unsigned module is rejected

[48291.102147] Loading of unsigned module is rejected

How to Fix the Issue with VirtualBox Using RPMFusion and Akmods

Oracle is aware of this issue, but their documentation is lacking. To quote:

If you are running on a system using UEFI (Unified Extensible Firmware Interface) Secure Boot, you may need to sign the following kernel modules before you can load them:

vboxdrv,vboxnetadp,vboxnetflt,vboxpci. See your system documentation for details of the kernel module signing process.

Fedora’s documentation is sparse, so I spent a lot of time researching manual kernel module signing (Fedora docs) and following user guides until I discovered that VirtualBox is available in RPMFusion with akmods support.

Some definitions:

- RPM Fusion is a community repository for Enterprise Linux (Fedora, RHEL, etc.) that provides packages not included in official distributions.

- Akmds automates the process of building and signing kernel modules.

Here’s the step-by-step solution:

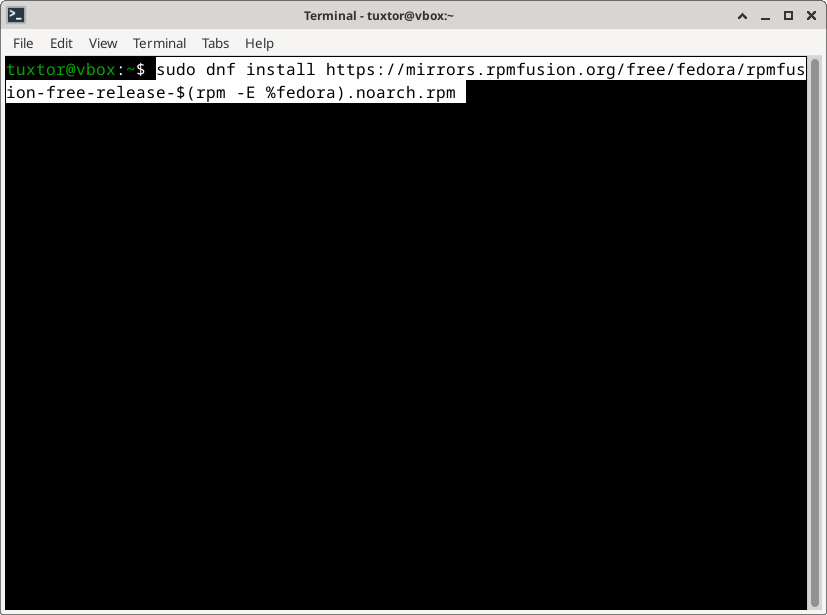

1. Enable RPM Fusion (Free Repo)

Install the RPM Fusion free repository:

sudo dnf install https://mirrors.rpmfusion.org/free/fedora/rpmfusion-free-release-$(rpm -E %fedora).noarch.rpm

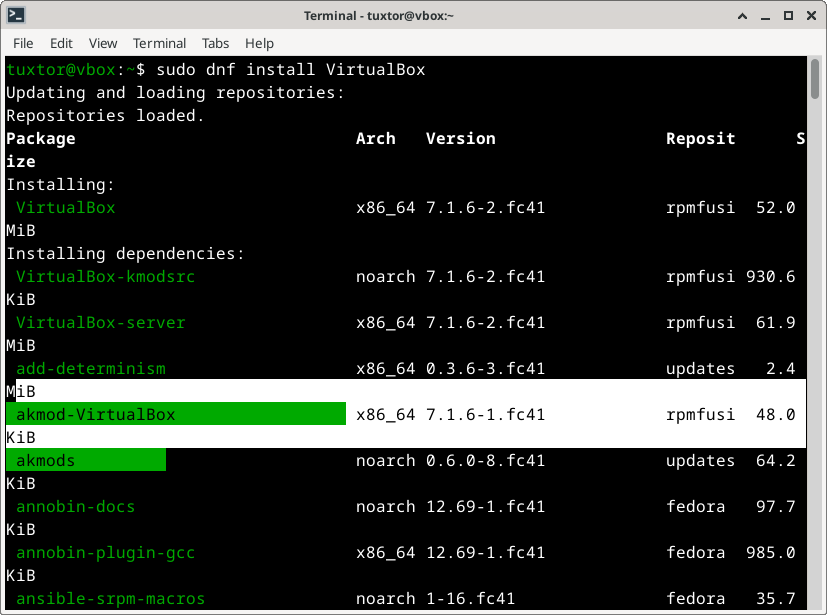

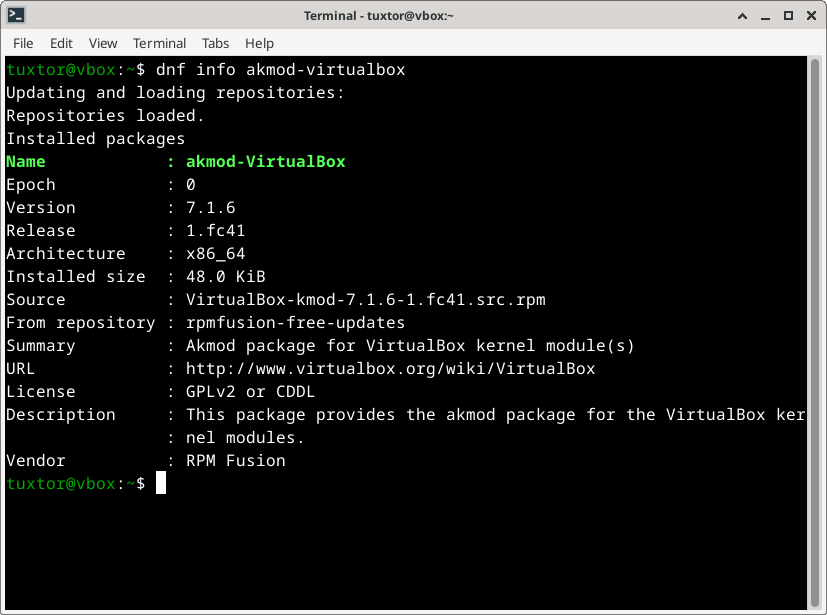

2. Install VirtualBox (with Akmods)

Ensure VirtualBox is installed from RPMFusion (akmods will be a dependency):

sudo dnf install virtualbox

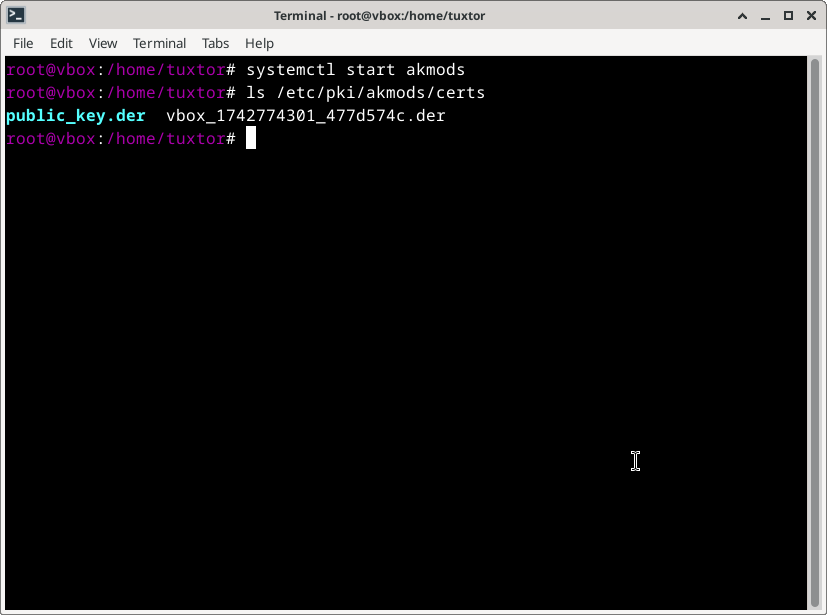

3. Start Akmods to Generate Keys

Akmods will automatically sign the modules with a key stored in /etc/pki/akmods/certs:

sudo systemctl start akmods.service

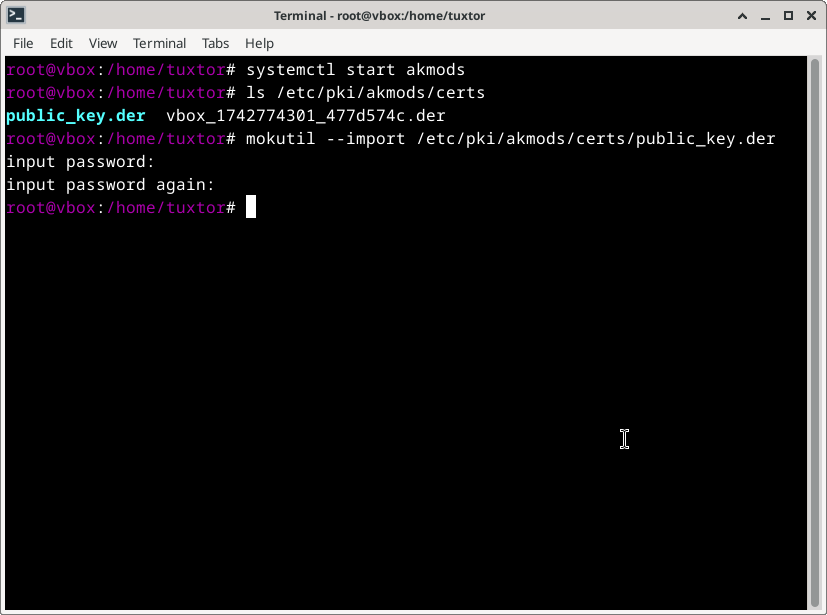

4. Enroll the Key with Mokutil

Use mokutil to register the key in Secure Boot:

sudo mokutil --import /etc/pki/akmods/certs/public_key.der

You’ll be prompted for a case-sensitive password—remember it for the next step.

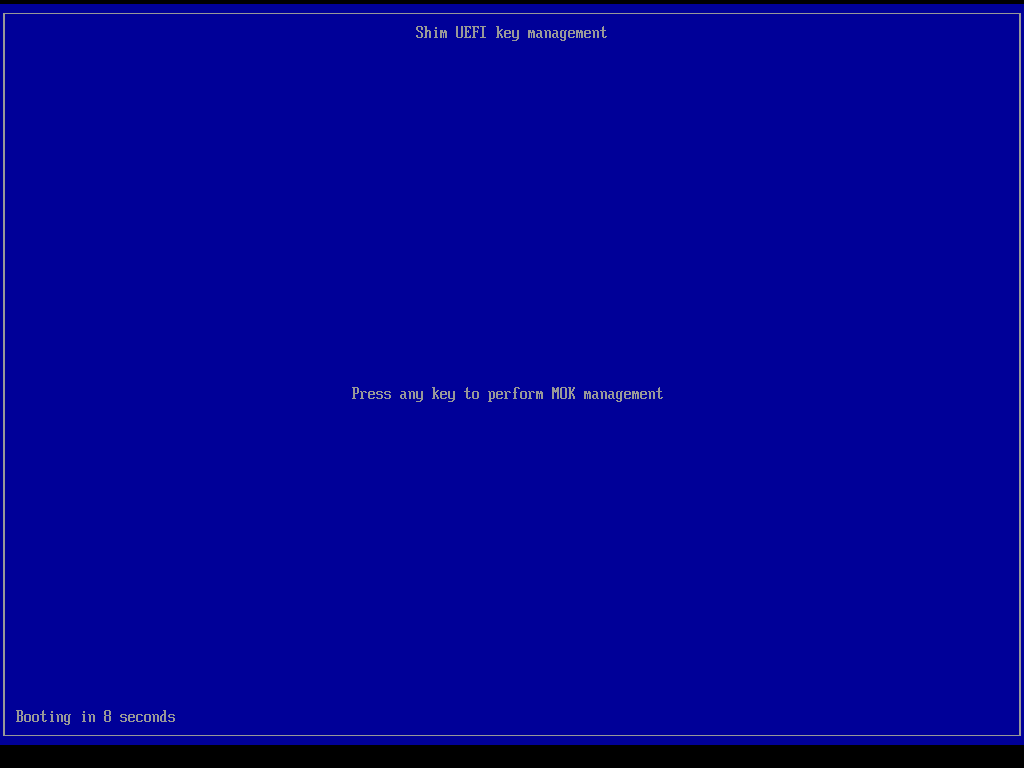

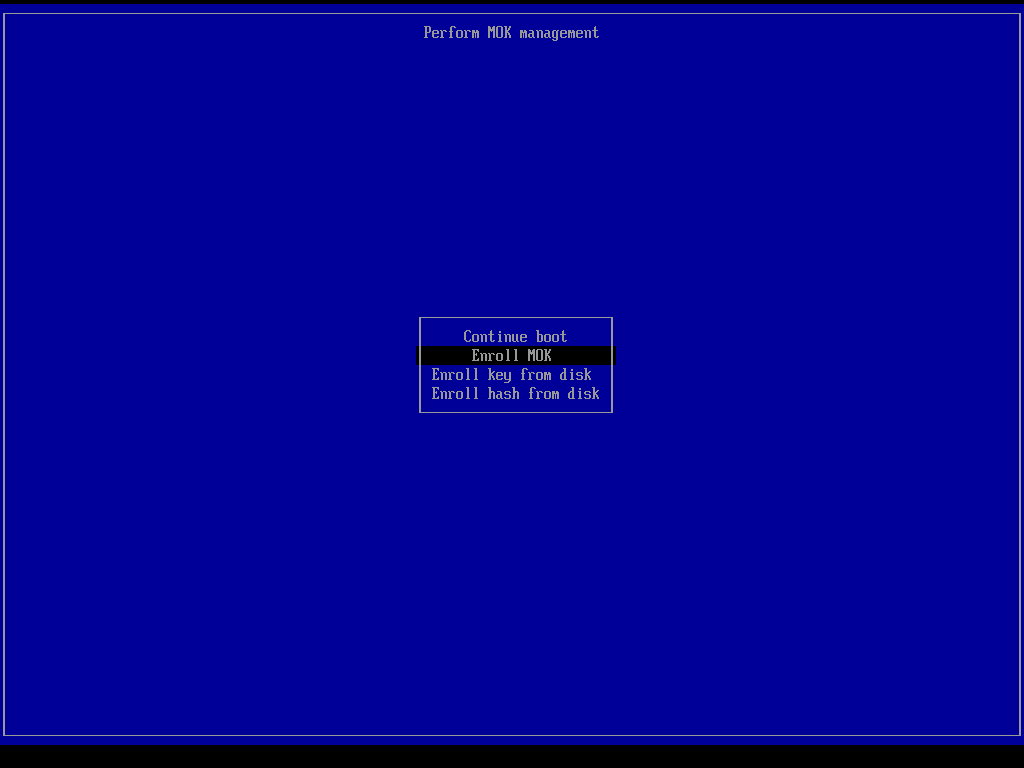

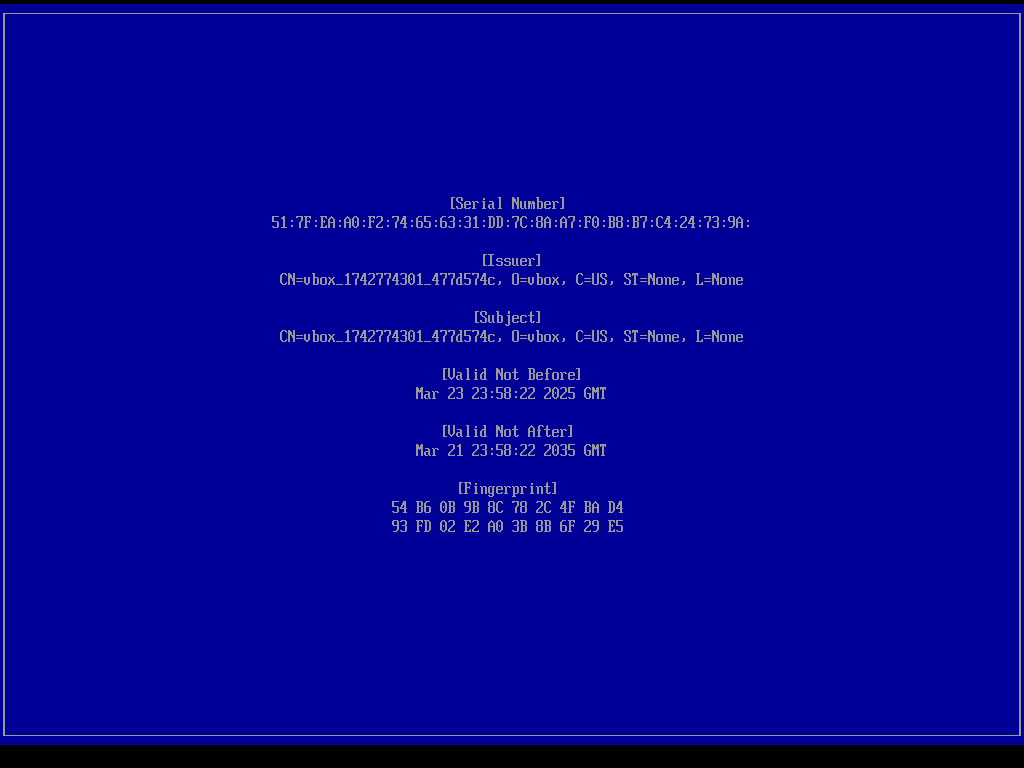

5. Reboot and Enroll the Key

After rebooting, the UEFI firmware will prompt you to enroll the new key.

If needed, you could also check for the key contents in that screen.

6. Start VirtualBox Kernel Modules

The modules are now signed and can be loaded. Enable these at boot:

sudo systemctl start vboxdrv

sudo systemctl enable vboxdrv

Verify they’re loaded:

lsmod | grep vbox

Output:

vboxnetadp 32768 0

vboxnetflt 40960 0

vboxdrv 708608 2 vboxnetadp,vboxnetflt

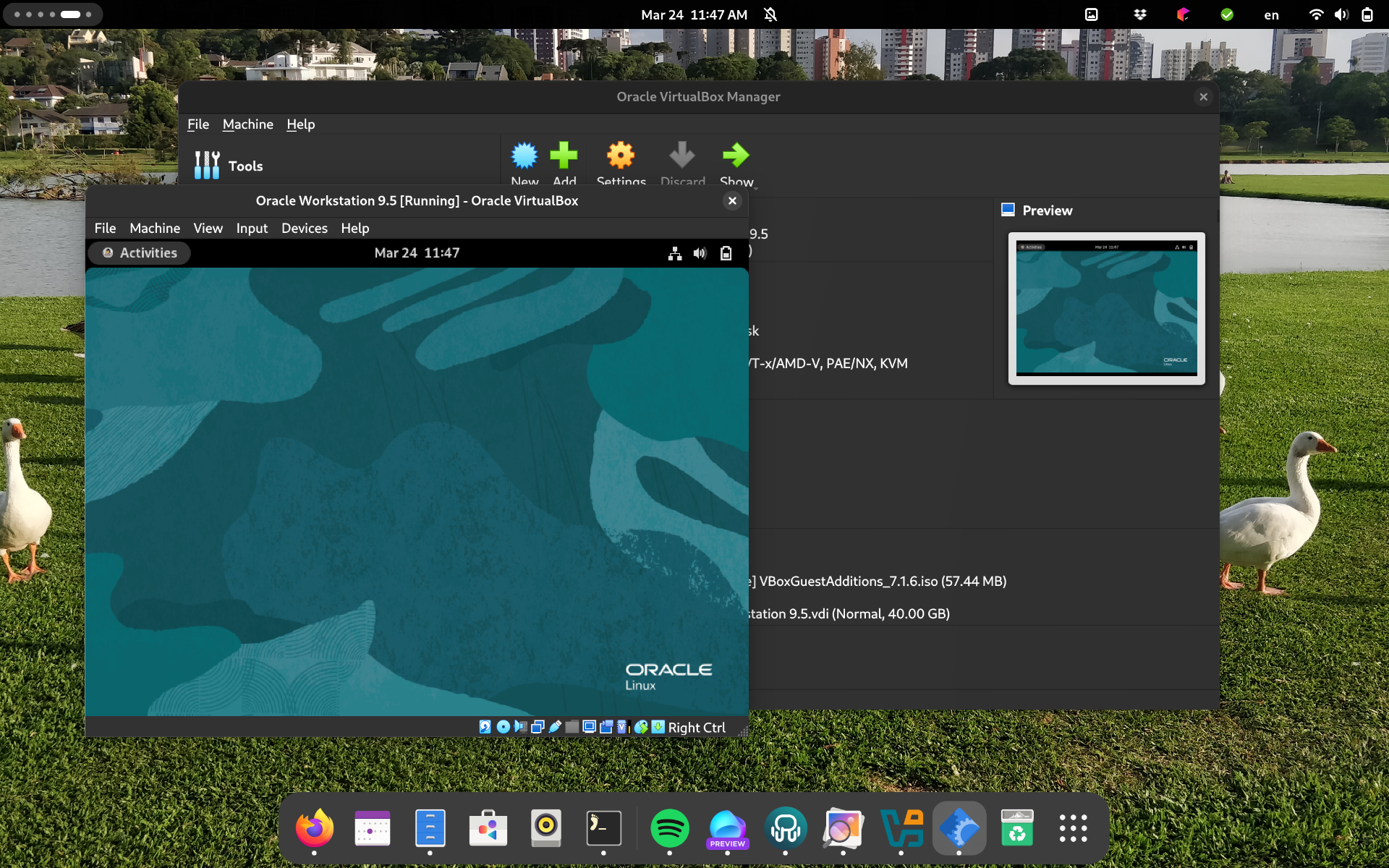

Now, VirtualBox runs on Fedora with Secure Boot and TPM enabled, without disabling BitLocker on Windows.

A practical guide to implement OpenTelemetry in Spring Boot

01 December 2024

In this tutorial I want to consolidate some practical ideas regarding OpenTelemetry and how to use it with Spring Boot.

This tutorial is composed by four sections

- OpenTelemetry practical concepts

- Setting up an observability stack with OpenTelemetry Collector, Grafana, Loki, Tempo and Podman

- Instrumenting Spring Boot applications for OpenTelemetry

- Testing and E2E sample

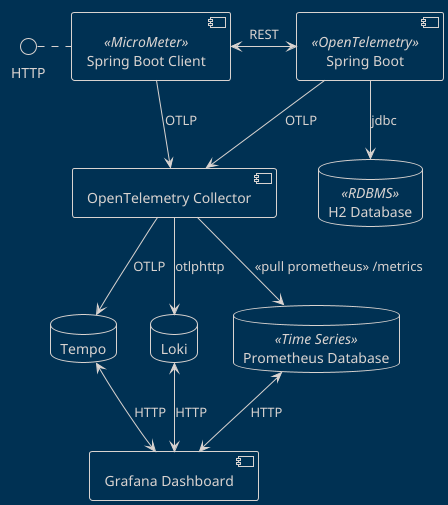

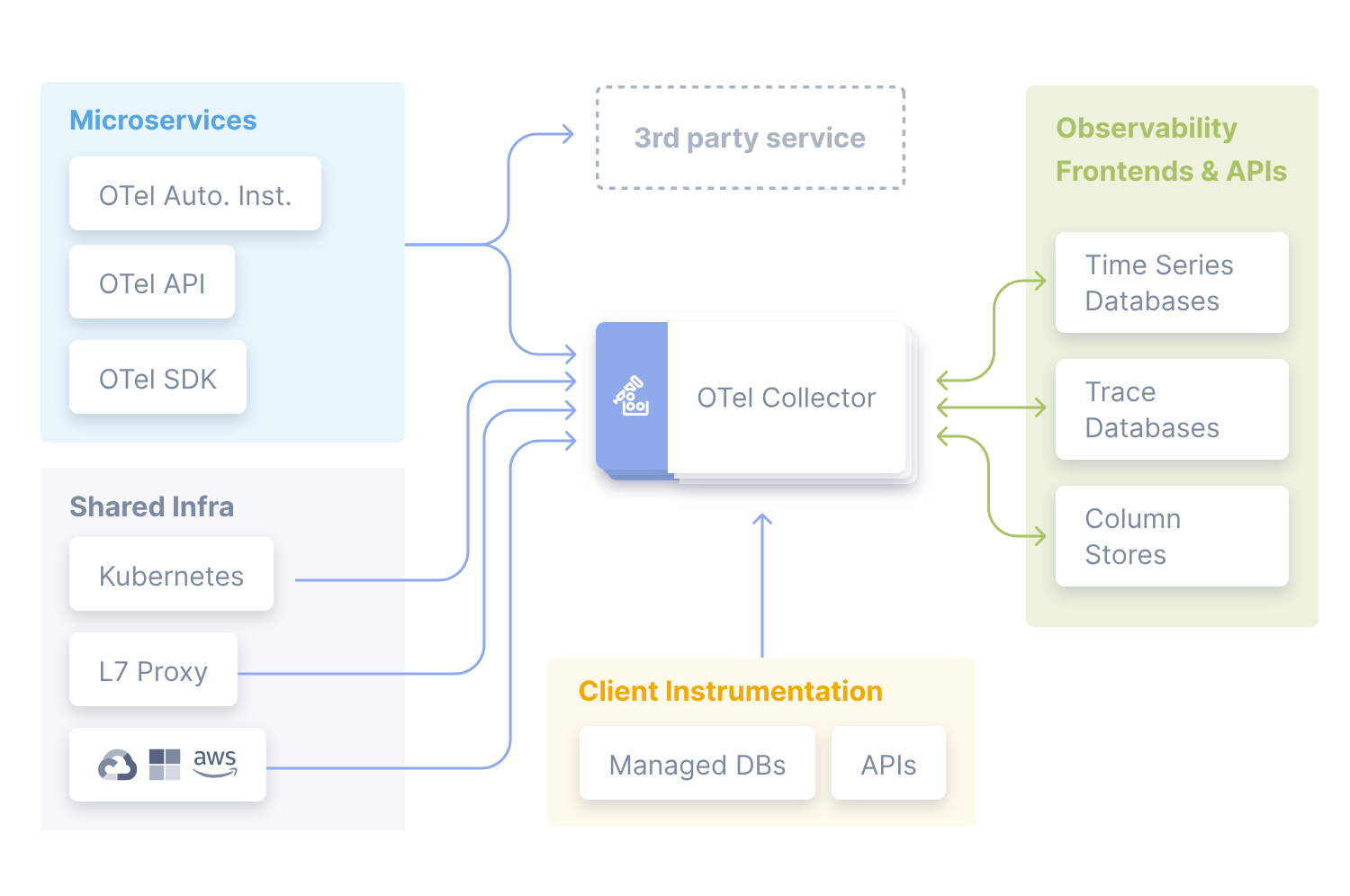

By the end of the tutorial, you should be able to implement the following architecture:

OpenTelemetry practical concepts

As the official documentation states, OpenTelemetry is

- An Observability framework and toolkit designed to create and manage telemetry data such as traces, metrics, and logs.

- Vendor and tool-agnostic, meaning that it can be used with a broad variety of Observability backends.

- Focused on the generation, collection, management, and export of telemetry. A major goal of OpenTelemetry is that you can easily instrument your applications or systems, no matter their language, infrastructure, or runtime environment.

Monitoring, observability and METL

To keep things short, monitoring is the process of collecting, processing and analyzing data to track the state of a (information) system. Then, monitoring is going to the next level, to actually understand the information that is being collected and do something with it, like defining alerts for a given system.

To achieve both goals it is necessary to collect three dimensions of data, specifically:

- Logs: Registries about processes and applications, with useful data like timestamps and context

- Metrics: Numerical data about the performance of applications and application modules

- Traces: Data that allow to estabilish the complete route that a given operation traverses through a series of dependent applications

Hence, when the state of a given system is altered in some way, we have an Event, that correlates and ideally generates data on the three dimensions.

Why is OpenTelemetry important and which problem does it solve?

Developers recognize by experience that monitoring and observability are important, either to evaluate the actual state of a system or to do post-mortem analysis after disasters. Hence, it is natural to think that observability has been implemented in various ways. For example if we think on a system constructed with Java we have at least the following collection points:

- Logs: Systemd, /var/log, /opt/tomcat, FluentD

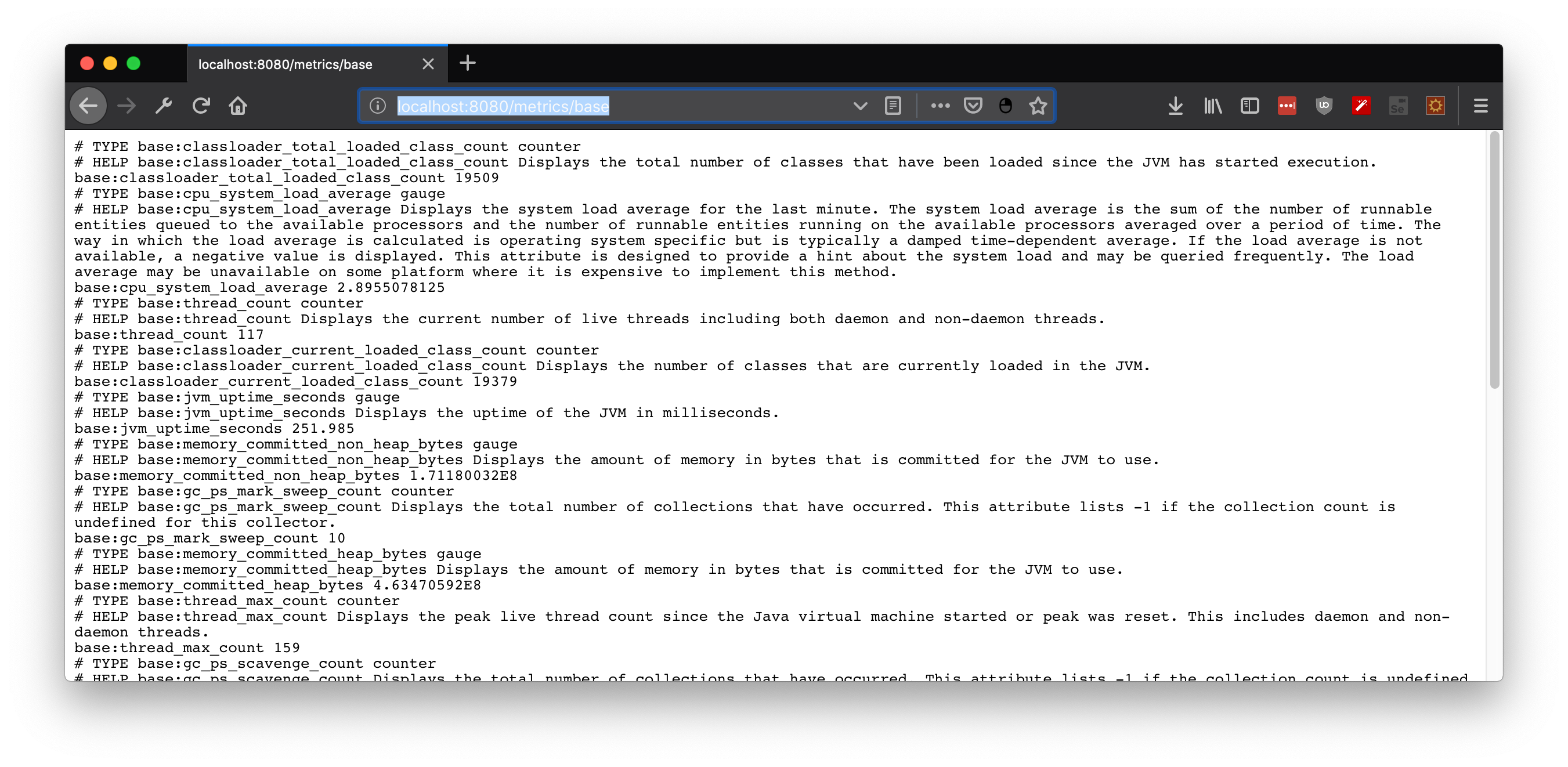

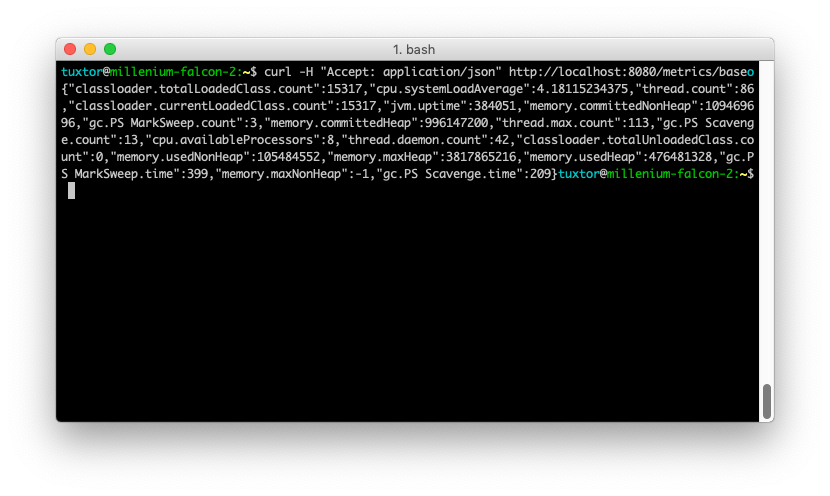

- Metrics: Java metrics via JMX, OS Metrics, vendor specific metrics via Spring Actuator

- Tracing: Data via Jaeger or Zipkin tooling in our Java workloads

This variety in turn imposes a great amount of complexity in instrumenting our systems to provide information, that a- comes in different formats, from b- technology that is difficult to implement, often with c- solutions that are too tied to a given provider or in the worst cases, d- technologies that only work with certain languages/frameworks.

And that's the magic about OpenTelemetry proposal, by creating a working group under the CNCF umbrella the project is able to provide useful things like:

- Common protocols that vendors and communities can implement to talk each other

- Standards for software communities to implement instrumentation in libraries and frameworks to provide data in OpenTelemetry format

- A collector able to retrieve/receive data from diverse origins compatible with OpenTelemetry, process it and send it to ...

- Analysis platforms, databases and cloud vendors able to receive the data and provide added value over it

In short, OpenTelemetry is the reunion of various great monitoring ideas that overlapping software communities can implement to facilitate the burden of monitoring implementations.

OpenTelemetry data pipeline

For me, the easiest way to think about OpenTelemetry concepts is a data pipeline, in this data pipeline you need to

- Instrument your workloads to push (or offer) the telemetry data to a processing/collecting element -i.e. OpenTelemetry Collector-

- Configure OpenTelemetry Collector to receive or pull the data from diverse workloads

- Configure OpenTelemetry Collector to process the data -i.e adding special tags, filtering data-

- Configure OpenTelemetry Collector to push (or offer) the data to compatible backends

- Configure and use the backends to receive (or pull) the data from the collector, to allow analysis, alarms, AI ... pretty much any case that you can think about with data

Setting up an observability stack with OpenTelemetry Collector, Grafana, Prometheus, Loki, Tempo and Podman

As OpenTelemetry got popular various vendors have implemented support for it, to mention a few:

Self-hosted platforms

Cloud platforms

Hence, for development purposes, it is always useful to know how to bootstrap a quick observability stack able to receive and show OpenTelemetry capabilities.

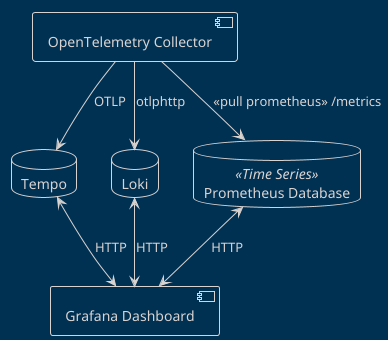

For this purpose we will use the following elements:

- Prometheus as time-series database for metrics

- Loki as logs platform

- Tempo as a tracing platform

- Grafana as a web UI

And of course OpenTelemetry collector. This example is based on various Grafana examples, with a little bit of tweaking to demonstrate the different ways of collecting, processing and sending data to backends.

OpenTelemetry collector

As stated previously, OpenTelemetry collector acts as an intermediary that receives/pull information from data sources, processes this information and, forwards the information to destinations like analysis platforms or even other collectors. The collector is able to do this either with compliant workloads or via plugins that talk with the workloads using proprietary formats.

As the plugins collection can be increased or decreased, vendors have created their own distributions of OpenTelemetry collectors, for reference I've used successfully in the real world:

- Amazon ADOT

- Splunk Distribution of OpenTelemetry Collector

- Grafana Alloy

- OpenTelemetry Collector (the reference implementation)

You could find a complete list directly on OpenTelemetry website.

For this demonstration, we will create a data pipeline using the contrib version of the reference implementation which provides a good amount of receivers, exporters and processors. In our case Otel configuration is designed to:

- Receive data from Spring Boot workloads (ports 4317 and 4318)

- Process the data adding a new tag to metrics

- Expose an endpoint for Prometheus scrapping (port 8889)

- Send logs to Loki (port 3100) using otlphttp format

- Send traces to Tempo (port 9411) using otlp format

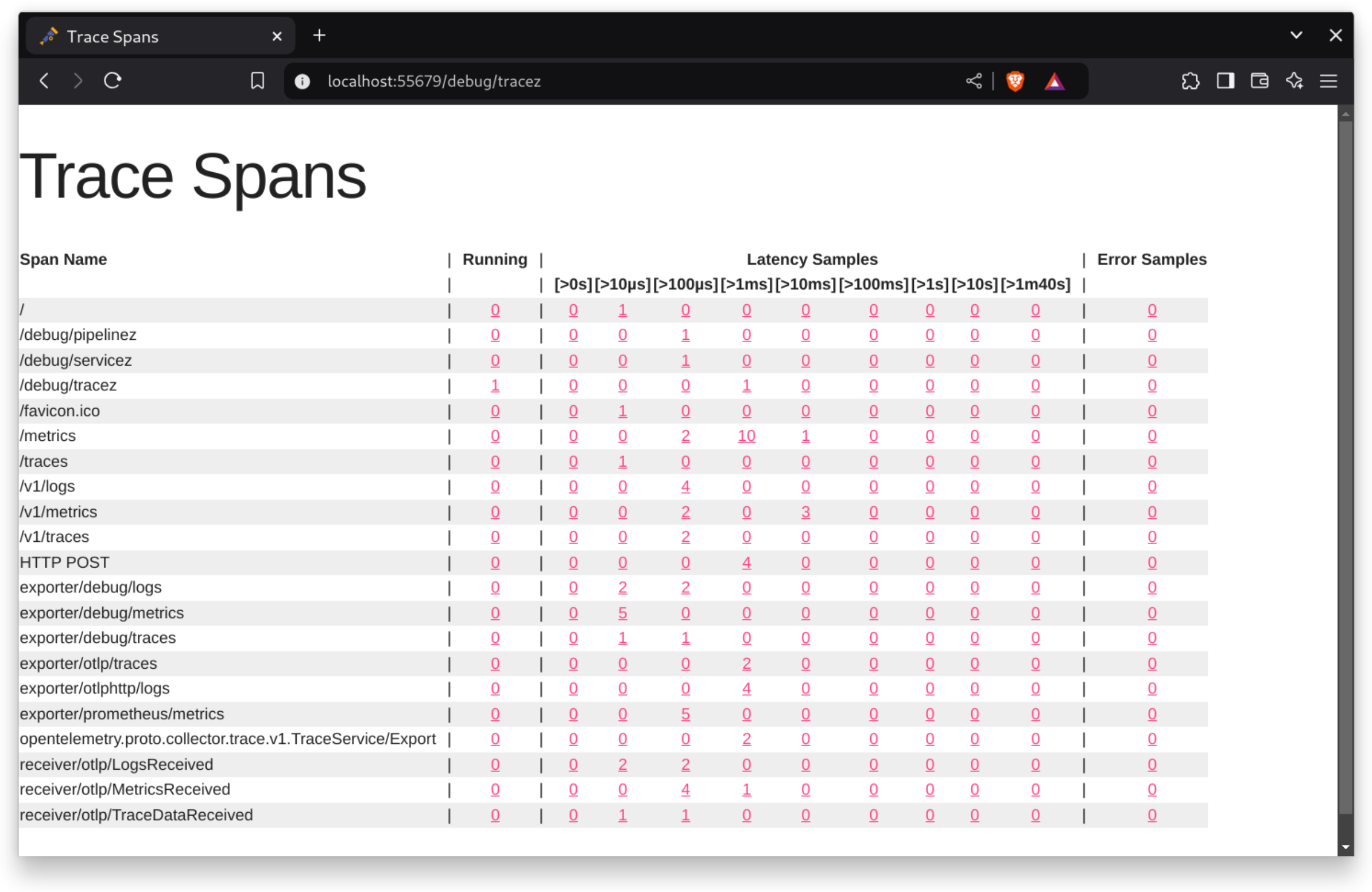

- Exposes a rudimentary dashboard from the collector, called zpages. Very useful for debugging.

otel-config.yaml

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

processors:

attributes:

actions:

- key: team

action: insert

value: vorozco

exporters:

debug:

prometheus:

endpoint: "0.0.0.0:8889"

otlphttp:

endpoint: http://loki:3100/otlp

otlp:

endpoint: tempo:4317

tls:

insecure: true

service:

extensions: [zpages]

pipelines:

metrics:

receivers: [otlp]

processors: [attributes]

exporters: [debug,prometheus]

traces:

receivers: [otlp]

exporters: [debug, otlp]

logs:

receivers: [otlp]

exporters: [debug, otlphttp]

extensions:

zpages:

endpoint: "0.0.0.0:55679"

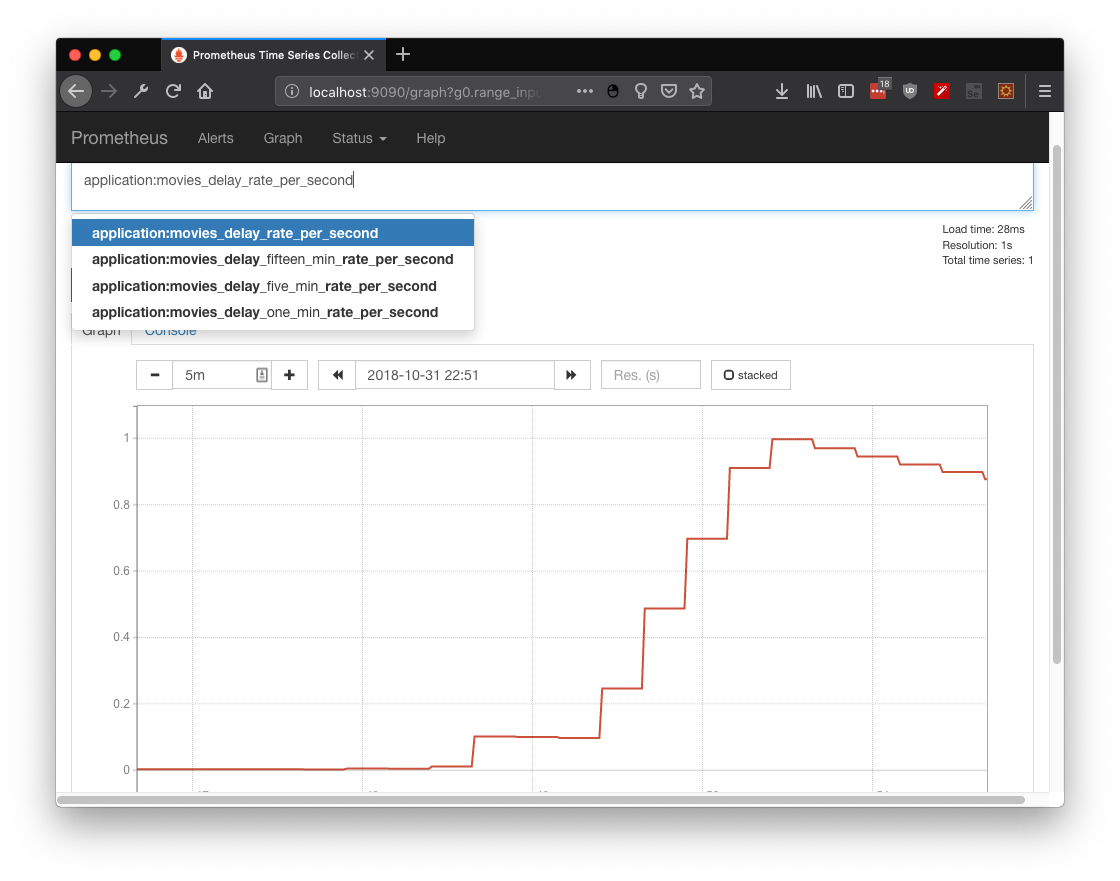

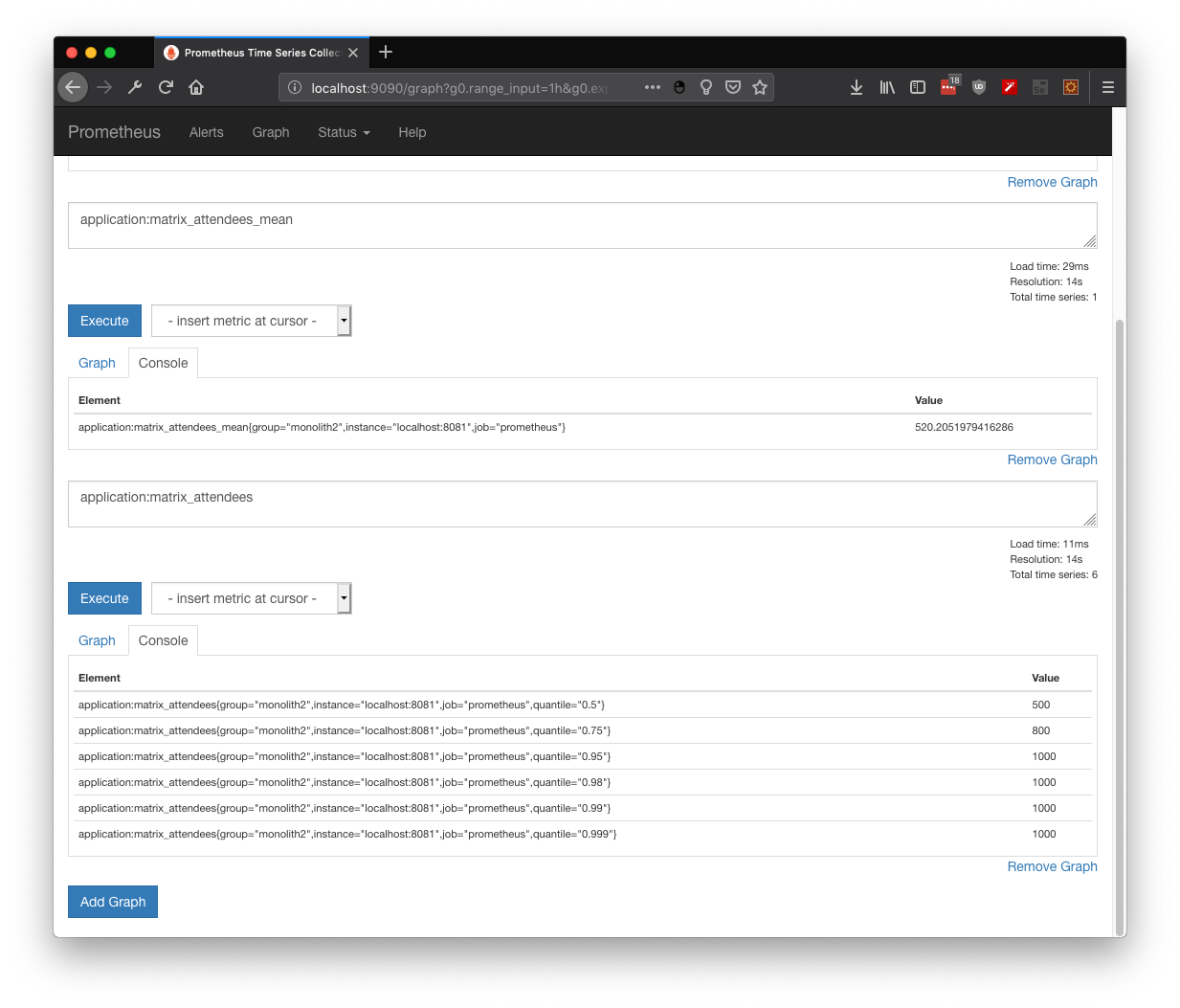

Prometheus

Prometheus is a well known analysis platform, that among other things offers dimensional data and a performant time-series storage.

By default it works as a metrics scrapper, then, workloads provide a http endpoint offering data using the Prometheus format. For our example we configured Otel to offer metrics to the prometheus host via port 8889.

prometheus:

endpoint: "prometheus:8889"

Then, whe need to configure Prometheus to scrape the metrics from the Otel host. You would notice two ports, the one that we defined for the active workload data (8889) and another for metrics data for the collector itself (8888).

prometheus.yml

scrape_configs:

- job_name: "otel"

scrape_interval: 10s

static_configs:

- targets: ["otel:8889"]

- targets: ["otel:8888"]

It is worth highlighting that Prometheus also offers a way to ingest information instead of scrapping it, and, the official support for OpenTelemetry ingestion is coming on the new versions.

Loki

As described in the website, Loki is a specific solution for log aggregation heavily inspired by Prometheus, with the particular design decision to NOT format in any way the log contents, leaving that responsibility to the query system.

To configure the project for local environments, the project offers a configuration that is usable for most of the development purposes. The following configuration is an adaptation to preserve the bare minimum to work with temporal files and memory.

loki.yaml

auth_enabled: false

server:

http_listen_port: 3100

grpc_listen_port: 9096

common:

instance_addr: 127.0.0.1

path_prefix: /tmp/loki

storage:

filesystem:

chunks_directory: /tmp/loki/chunks

rules_directory: /tmp/loki/rules

replication_factor: 1

ring:

kvstore:

store: inmemory

query_range:

results_cache:

cache:

embedded_cache:

enabled: true

max_size_mb: 100

schema_config:

configs:

- from: 2020-10-24

store: tsdb

object_store: filesystem

schema: v13

index:

prefix: index_

period: 24h

ruler:

alertmanager_url: http://localhost:9093

limits_config:

allow_structured_metadata: true

Then, we configure an exporter to deliver the data to the loki host using oltphttp format.

otlphttp:

endpoint: http://loki:3100/otlp

Tempo

In similar fashion than Loki, Tempo is an Open Source project created by grafana that aims to provide a distributed tracing backend. On a personal note, for me besides performance it shines for being compatible not only with OpenTelemetry, it can also ingest data in Zipkin and Jaeger formats.

To configure the project for local environments, the project offers a configuration that is usable for most of the development purposes. The following configuration is an adaptation to remove the metrics generation and simplify the configuration, however with this we loose the service graph feature.

tempo.yaml

stream_over_http_enabled: true

server:

http_listen_port: 3200

log_level: info

query_frontend:

search:

duration_slo: 5s

throughput_bytes_slo: 1.073741824e+09

metadata_slo:

duration_slo: 5s

throughput_bytes_slo: 1.073741824e+09

trace_by_id:

duration_slo: 5s

distributor:

receivers:

otlp:

protocols:

http:

grpc:

ingester:

max_block_duration: 5m # cut the headblock when this much time passes. this is being set for demo purposes and should probably be left alone normally

compactor:

compaction:

block_retention: 1h # overall Tempo trace retention. set for demo purposes

storage:

trace:

backend: local # backend configuration to use

wal:

path: /var/tempo/wal # where to store the wal locally

local:

path: /var/tempo/blocks

Then, we configure an exporter to deliver the data to Tempo host using oltp/grpc format.

otlp:

endpoint: tempo:4317

tls:

insecure: true

Grafana

Loki, Tempo and (to some extent) Prometheus are data storages, but we still need to show this data to the user. Here, Grafana enters the scene.

Grafana offers a good selection of analysis tools, plugins, dashboards, alarms, connectors and a great community that empowers observability. Besides having a great compatibility with Prometheus, it offers of course a perfect compatibility with their other offerings.

To configure Grafana you just need to plug compatible datasources and the rest of work will be on the web ui.

grafana.yaml

apiVersion: 1

datasources:

- name: Otel-Grafana-Example

type: prometheus

url: http://prometheus:9090

editable: true

- name: Loki

type: loki

access: proxy

orgId: 1

url: http://loki:3100

basicAuth: false

isDefault: true

version: 1

editable: false

- name: Tempo

type: tempo

access: proxy

orgId: 1

url: http://tempo:3200

basicAuth: false

version: 1

editable: false

apiVersion: 1

uid: tempo

Podman (or Docker)

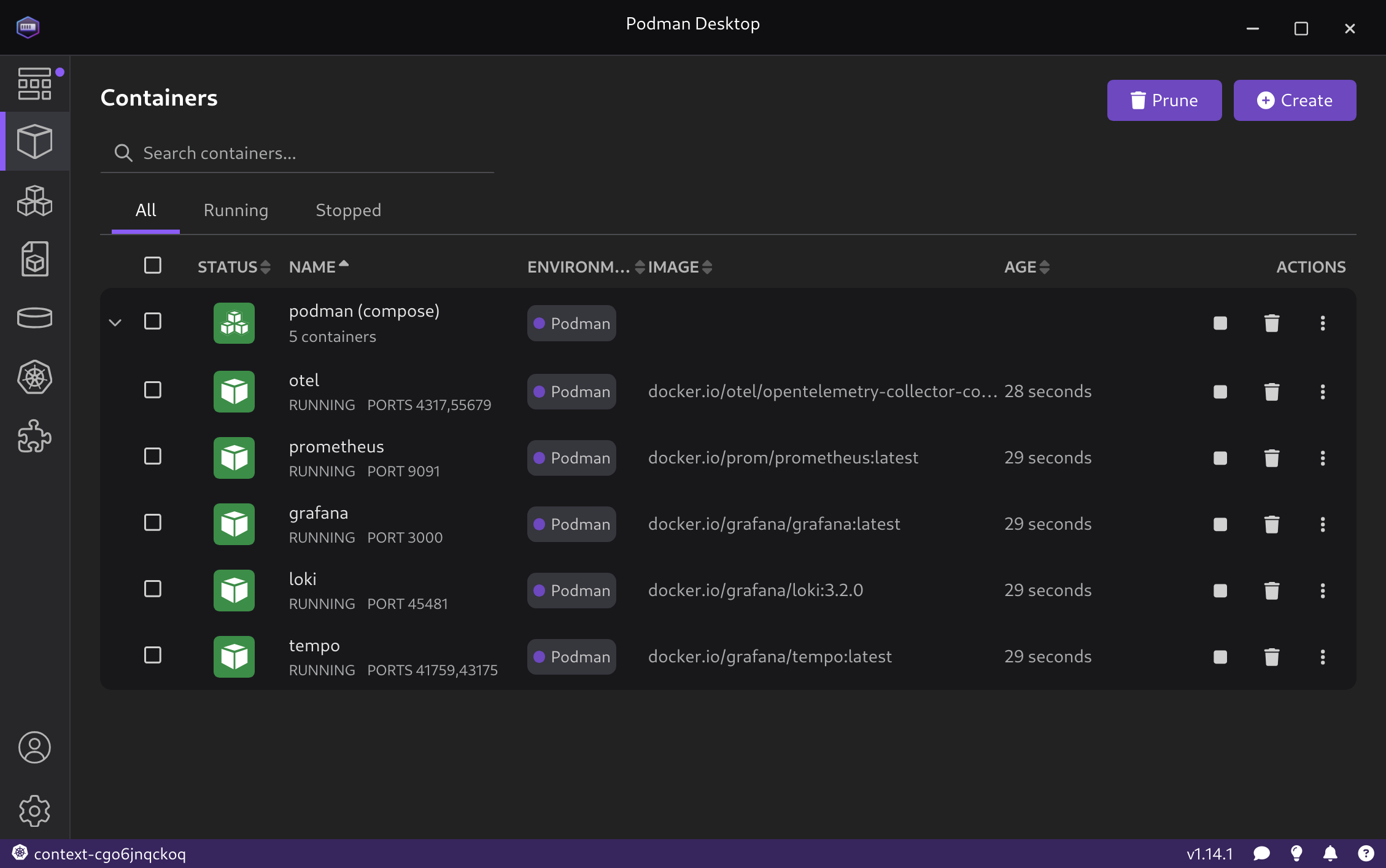

At this point you may have noticed that I've referred to the backends using single names, this is because I intend to set these names using a Podman Compose deployment.

otel-compose.yml

version: '3'

services:

otel:

container_name: otel

image: otel/opentelemetry-collector-contrib:latest

command: [--config=/etc/otel-config.yml]

volumes:

- ./otel-config.yml:/etc/otel-config.yml

ports:

- "4318:4318"

- "4317:4317"

- "55679:55679"

prometheus:

container_name: prometheus

image: prom/prometheus

command: [--config.file=/etc/prometheus/prometheus.yml]

volumes:

- ./prometheus.yml:/etc/prometheus/prometheus.yml

ports:

- "9091:9090"

grafana:

container_name: grafana

environment:

- GF_AUTH_ANONYMOUS_ENABLED=true

- GF_AUTH_ANONYMOUS_ORG_ROLE=Admin

image: grafana/grafana

volumes:

- ./grafana.yml:/etc/grafana/provisioning/datasources/default.yml

ports:

- "3000:3000"

loki:

container_name: loki

image: grafana/loki:3.2.0

command: -config.file=/etc/loki/local-config.yaml

volumes:

- ./loki.yaml:/etc/loki/local-config.yaml

ports:

- "3100"

tempo:

container_name: tempo

image: grafana/tempo:latest

command: [ "-config.file=/etc/tempo.yaml" ]

volumes:

- ./tempo.yaml:/etc/tempo.yaml

ports:

- "4317" # otlp grpc

- "4318"

At this point the compose description is pretty self-descriptive, but I would like to highlight some things:

- Some ports are open to the host -e.g. 4318:4318 - while others are closed to the default network that compose will be created among containers -e.g. 3100-

- This stack is designed to avoid any permanent data. Again, this is my personal way to boot quickly an observability stack to allow tests during deployment. To make it ready for production you probably would want to preserve the data in some volumes

Once the configuration is ready, you can launch it using the compose file

cd podman

podman compose -f otel-compose.yml up

If the configuration is ok, you should have five containers running without errors.

Instrumenting Spring Boot applications for OpenTelemetry

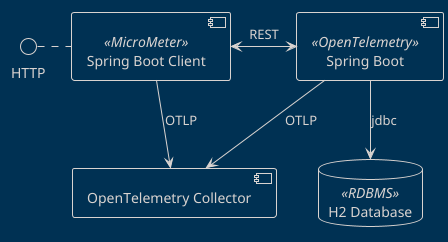

As part of my daily activities I was in charge of a major implementation of all these concepts. Hence it was natural for me to create a proof of concept that you could find at my GitHub.

For demonstration purposes we have two services with different HTTP endpoints:

springboot-demo:8080- Useful to demonstrate local and database tracing, performance, logs and OpenTelemetry instrumentation/books- A books CRUD using Spring Data/fibo- A Naive Fibonacci implementation that generates CPU load and delays/log- Which generate log messages using the different SLF4J levels

springboot-client-demo:8081- Useful to demonstrate tracing capabilities, Micrometer instrumentation and Micrometer Tracing instrumentation/trace-demo- A quick OpenFeing client that invokes books GetAll Books demo

Instrumentation options

Given the popularity of OpenTelemetry, developers can expect also multiple instrumentation options.

First of all, the OpenTelemetry project offers a framework-agnostic instrumentation that uses bytecode manipulation, for this instrumentation to work you need to include a Java Agent via Java Classpath. In my experience this instrumentation is preferred if you don't control the workload or if your platform does not offer OpenTelemetry support at all.

However, instrumentation of workloads can become really specific -e.g. instrumentation of a Database pool given a particular IoC mechanism-. For this, the Java world provides a good ecosystem, for example:

And of course Spring Boot.

Spring Boot is a special case with TWO major instrumentation options

Both options use Spring concepts like decorators and interceptors to capture and send information to the destinations. The only rule is to create the clients/services/objects in the Spring way (hence via Spring IoC).

I've used both successfully and my heavily opinionated conclusion is the following:

- Micrometer collects more information about spring metrics. Besides OpenTelemetry backend, it supports a plethora of backends directly without any collector intervention. If you cannot afford a collector, this is the way. From Micrometer perspective OpenTelemetry is just another backend

- Micrometer Tracing is the evolution of Spring Cloud Sleuth, hence if you have workloads with Spring Boot 2 and 3, you have to support both tools (or maybe migrate everything to Spring boot 3?)

- The Micrometer family does not offer a way to collect logs and send these to a backend, hence devs have to solve this by using an appender specific to your logging library. On the other hand OpenTelemetry Spring Boot starter offers this out of the box if you use Spring Boot default (SLF4J over Logback)

As these libraries are mutually exclusive, if the decision is mine, I would pick OpenTelemetry's Spring Boot starter. It offers logs support OOB and also a bridge for micrometer Metrics.

Instrumenting springboot-demo with OpenTelemetry SpringBoot starter

As always, it is also good to consider the official documentation.

Otel instrumentation with the Spring started is activated in three steps:

- You need to include both OpenTelemetry Bom and OpenTelemetry dependency. If you are planning to also use micrometer metrics, it is also a good idea to include Spring Actuator

<dependencyManagement>

<dependencies>

<dependency>

<groupId>io.opentelemetry.instrumentation</groupId>

<artifactId>opentelemetry-instrumentation-bom</artifactId>

<version>2.10.0</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

...

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<dependency>

<groupId>io.opentelemetry.instrumentation</groupId>

<artifactId>opentelemetry-spring-boot-starter</artifactId>

</dependency>

-

There is a set of optional libraries and adapters that you can configure if your workloads already diverged from the "Spring Way"

-

You need to activate (or not) the dimensions of observability (metrics, traces and logs). Also, you can finetune the exporting parameter like ports, urls or exporting periods. Either by using Spring Properties or env variables

#Configure exporters

otel.logs.exporter=otlp

otel.metrics.exporter=otlp

otel.traces.exporter=otlp

#Configure metrics generation

otel.metric.export.interval=5000 #Export metrics each five seconds

otel.instrumentation.micrometer.enabled=true #Enabe Micrometer metrics bridge

Instrumenting springboot-client-demo with Micrometer and Micrometer Tracing

Again, this instrumentation does not support logs exporting. Also, it is a good idea to check the latest documentation for Micrometer and Micrometer Tracing.

- As in the previous example, you need to enable Spring Actuator (which includes Micrometer). As OpenTelemetry is just a backend from Micrometer perspective, you just need to ehable the corresponding OTLP registry which will export metrics to localhost by default.

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-otlp</artifactId>

</dependency>

- In a similar way you need to Metrics, once actuator is enabled you just need to add support for the tracing backend

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-tracing-bridge-otel</artifactId>

</dependency>

- Finally, you can finetune the configuration using Spring properties. For example, you can decide if 100% of traces are reproted or how often the metrics are reported to the backend.

management.otlp.tracing.endpoint=http://localhost:4318/v1/traces

management.otlp.tracing.timeout=10s

management.tracing.sampling.probability=1

management.otlp.metrics.export.url=http://localhost:4318/v1/metrics

management.otlp.metrics.export.step=5s

management.opentelemetry.resource-attributes."service-name"=${spring.application.name}

Testing and E2E sample

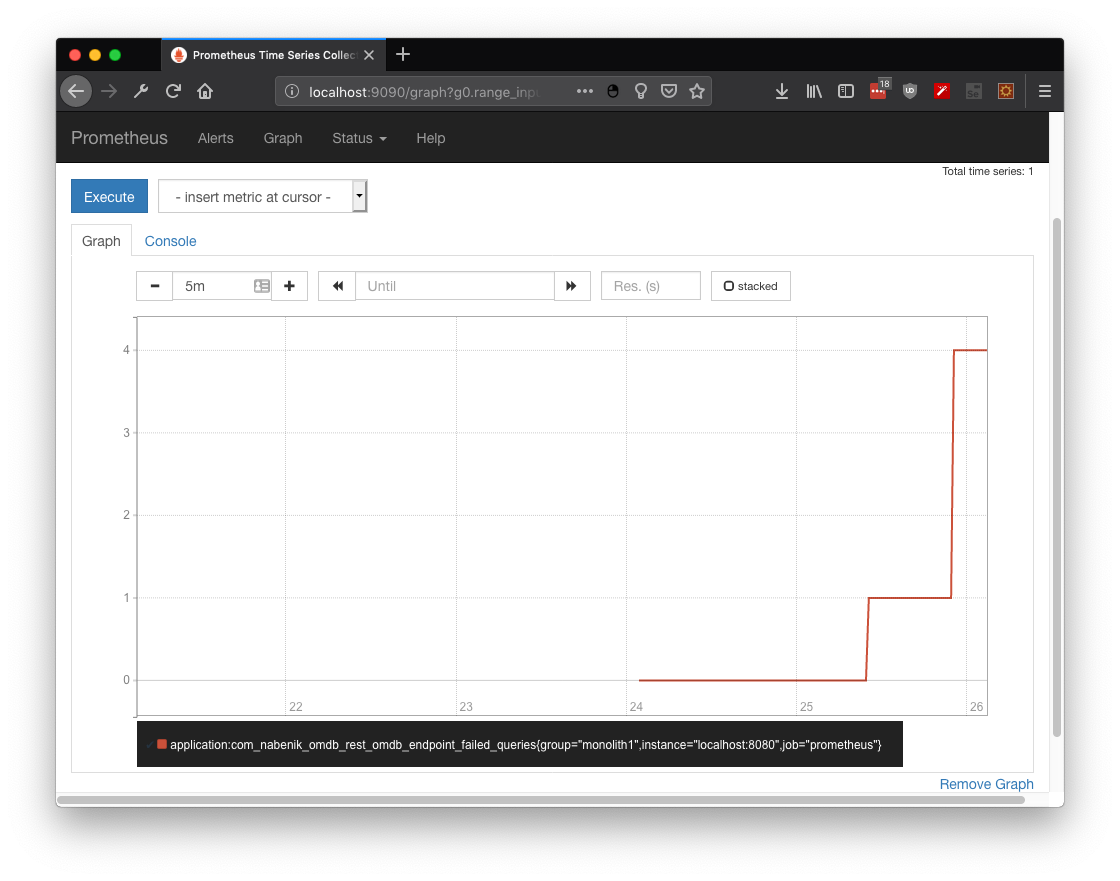

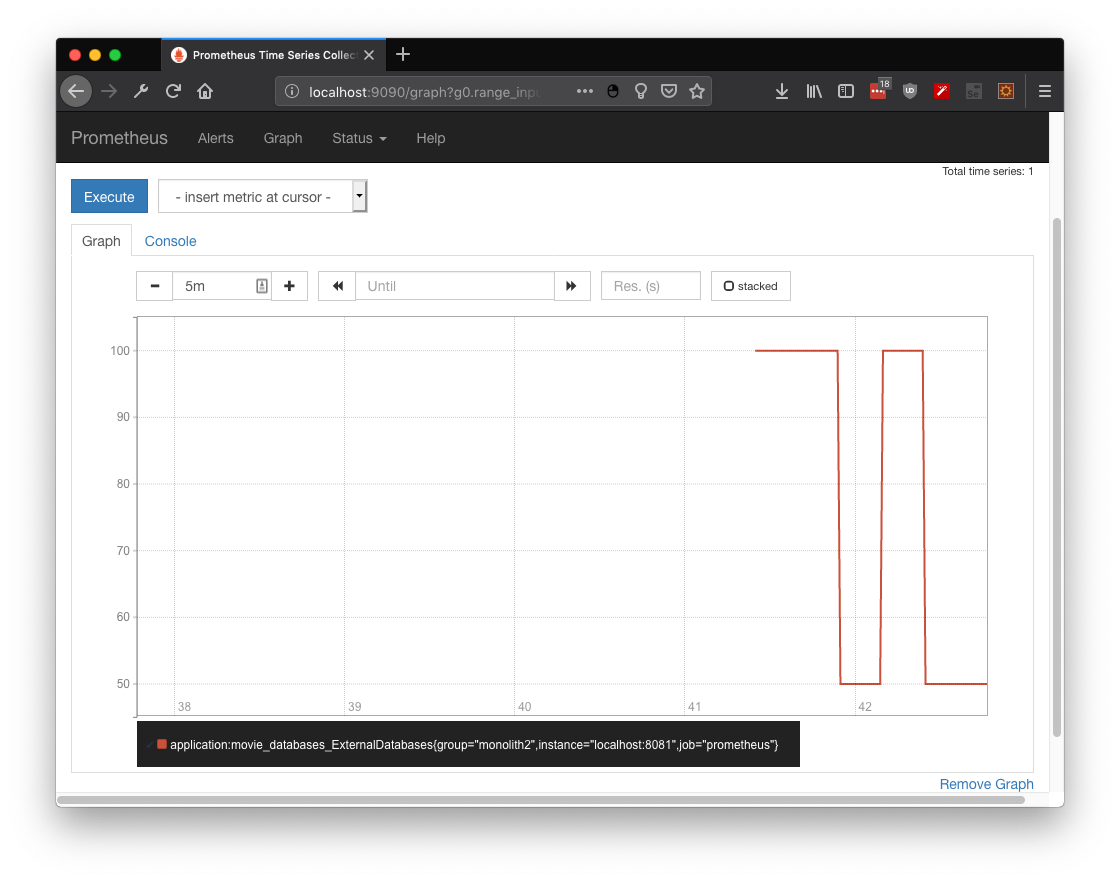

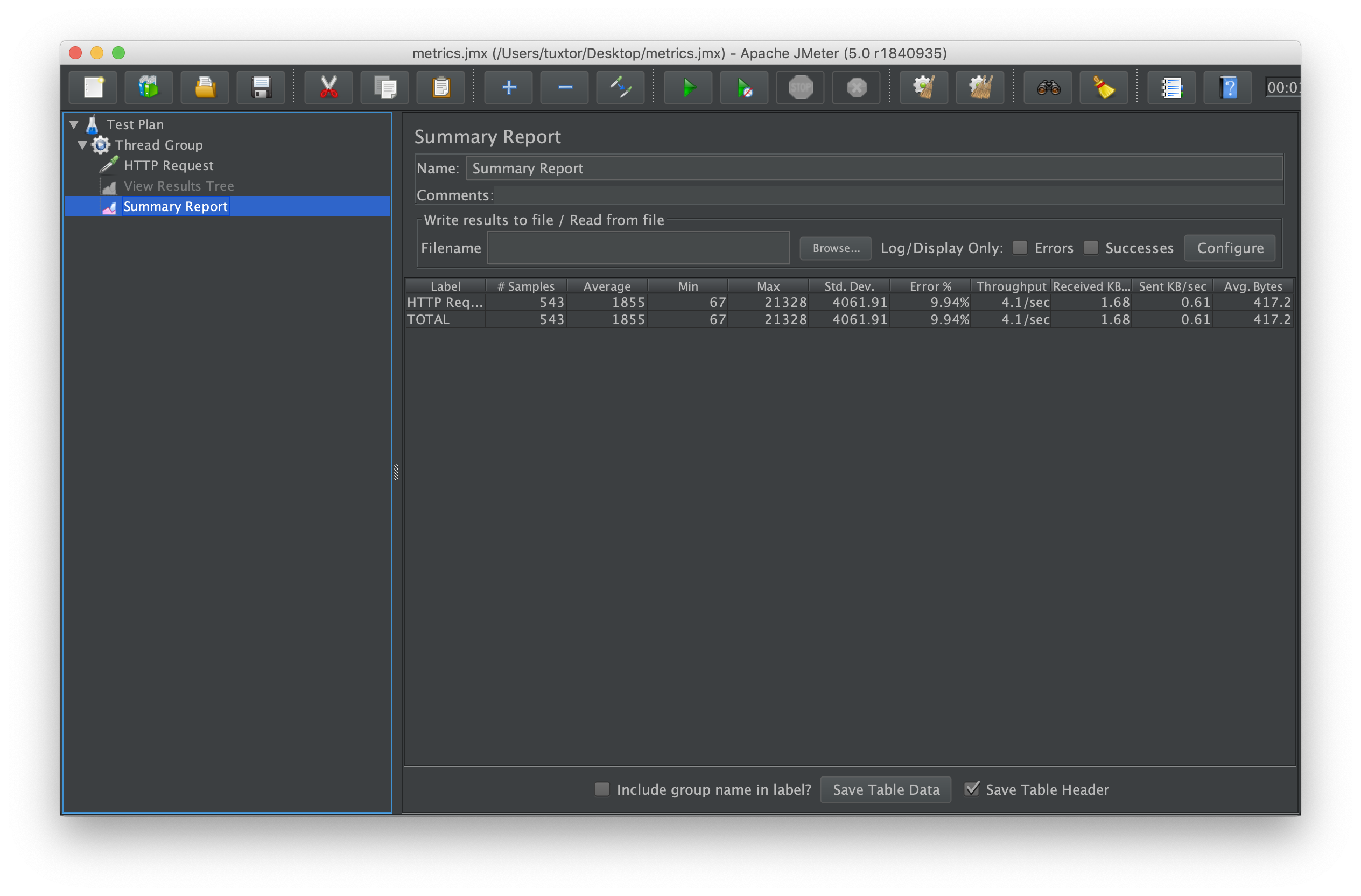

Generating workload data

The POC provides the following structure

├── podman # Podman compose config files

├── springboot-client-demo #Spring Boot Client instrumented with Actuator, Micrometer and MicroMeter tracing

└── springboot-demo #Spring Boot service instrumented with OpenTelemetry Spring Boot Starter

- The first step is to boot the observability stack we created previously.

cd podman

podman compose -f otel-compose.yml up

This will provide you an instance of Grafana on port 3000

Then, it is time to boot the first service!. You only need Java 21 on the active shell:

cd springboot-demo

mvn spring-boot:run

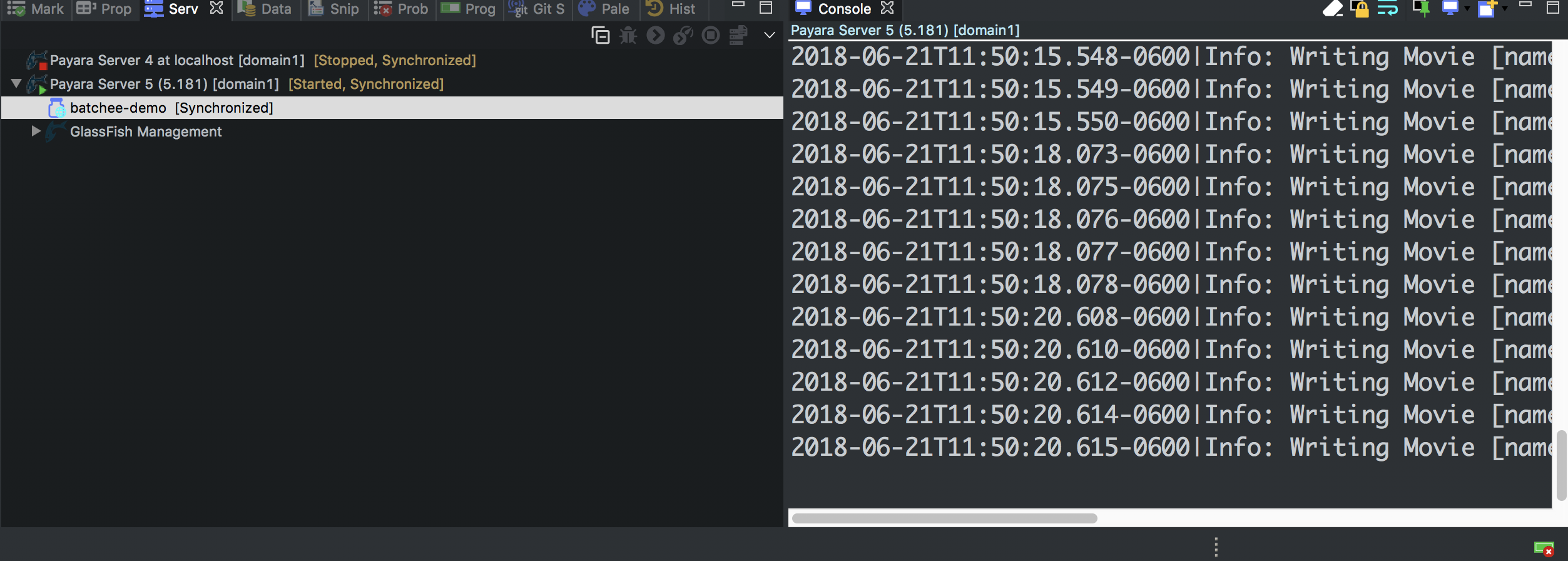

If the workload is properly configured, you will see the following information on the OpenTelemetry container standard output. Which basically says you are successfully reporting data.

[otel] | 2024-12-01T22:10:07.730Z info Logs {"kind": "exporter", "data_type": "logs", "name": "debug", "resource logs": 1, "log records": 24}

[otel] | 2024-12-01T22:10:10.671Z info Metrics {"kind": "exporter", "data_type": "metrics", "name": "debug", "resource metrics": 1, "metrics": 64, "data points": 90}

[otel] | 2024-12-01T22:10:10.672Z info Traces {"kind": "exporter", "data_type": "traces", "name": "debug", "resource spans": 1, "spans": 5}

[otel] | 2024-12-01T22:10:15.691Z info Metrics {"kind": "exporter", "data_type": "metrics", "name": "debug", "resource metrics": 1, "metrics": 65, "data points": 93}

[otel] | 2024-12-01T22:10:15.833Z info Metrics {"kind": "exporter", "data_type": "metrics", "name": "debug", "resource metrics": 1, "metrics": 65, "data points": 93}

[otel] | 2024-12-01T22:10:15.835Z info Logs {"kind": "exporter", "data_type": "logs", "name": "debug", "resource logs": 1, "log records": 5}

The data is being reported over the OpenTelemetry ports (4317 and 4318) which are open from Podman to the host. By default all telemetry libraries report to localhost, but this can be configured for other cases like FaaS or Kubernetes.

Also, you could verify the reporting status in ZPages

Finally let's do the same with Spring Boot client:

cd springboot-client-demo

mvn spring-boot:run

As described in the previous section, I created a set of interactions to:

Generate CPU workload using Naive fibonacci

curl http://localhost:8080/fibo\?n\=45

Generate logs in different levels

curl http://localhost:8080/fibo\?n\=45

Persist data using a CRUD

curl -X POST --location "http://localhost:8080/books" \

-H "Content-Type: application/json" \

-d '{

"author": "Miguel Angel Asturias",

"title": "El señor presidente",

"isbn": "978-84-376-0494-7",

"publisher": "Editorial planeta"

}'

And then retrieve the data using a secondary service

curl http://localhost:8081/trace-demo

This asciicast shows the interaction:

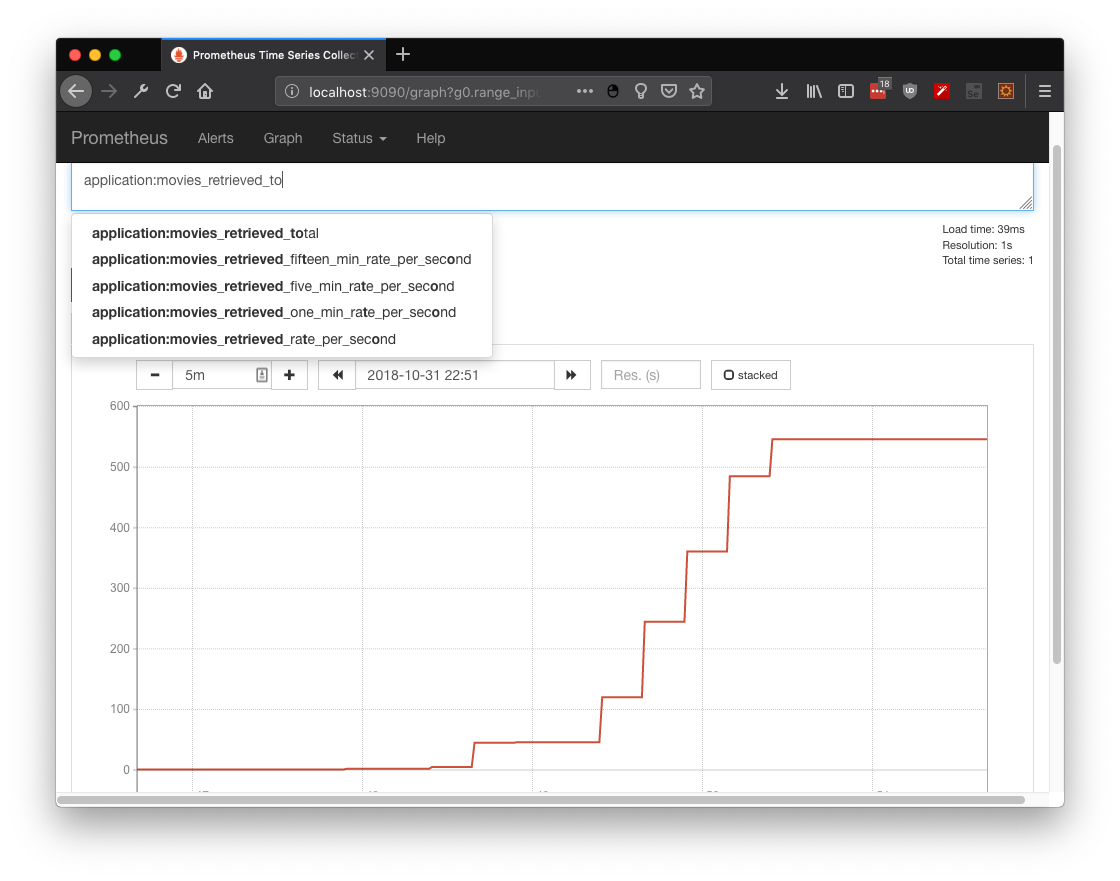

Grafana results

Once the data is accesible by Grafana, the what to do with data is up to you, again, you could:

- Create dashboards

- Configure alarms

- Configure notifications from alarms

The quickest way to verify if the data is reported correctly is to verify directly in Grafana explore.

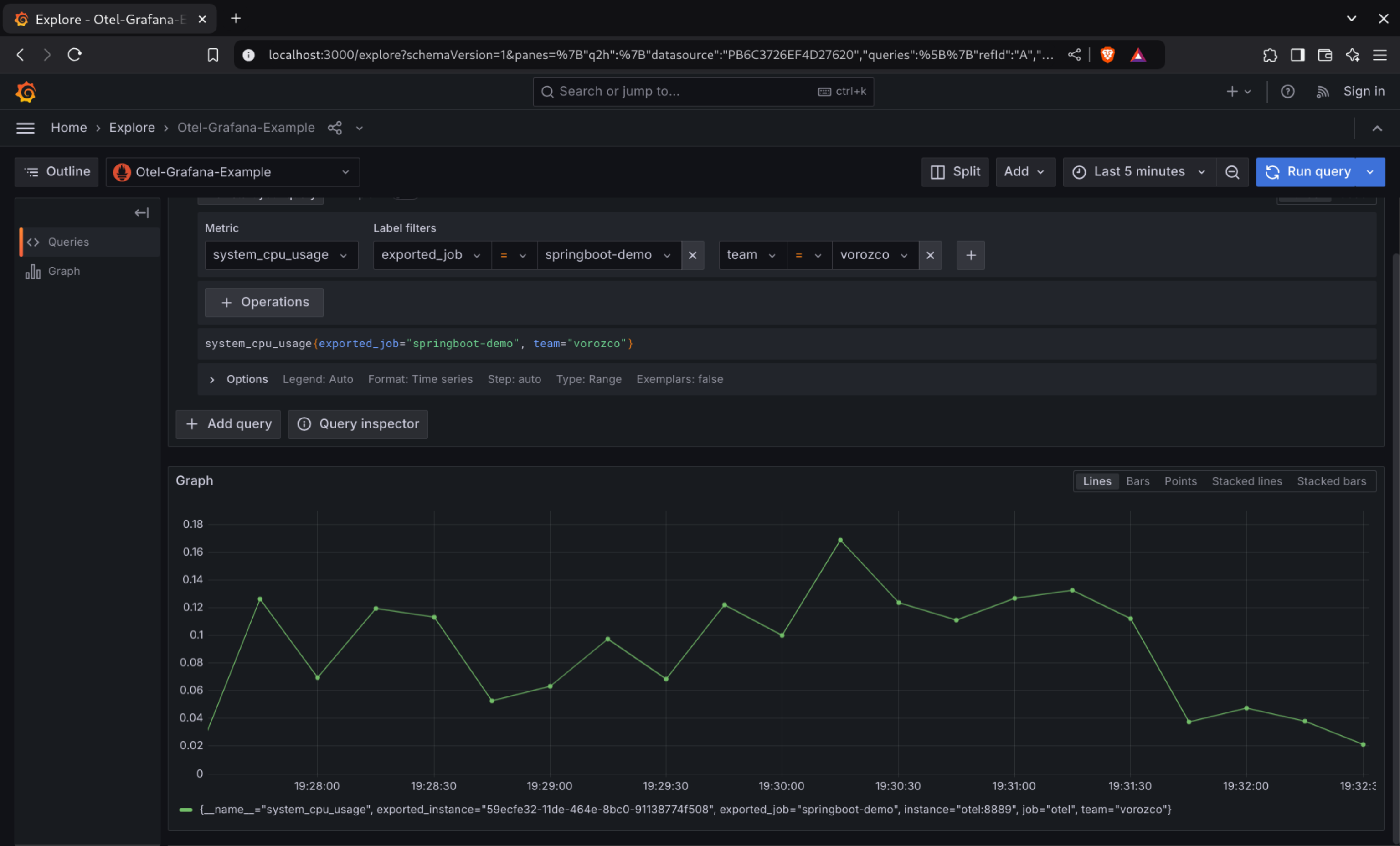

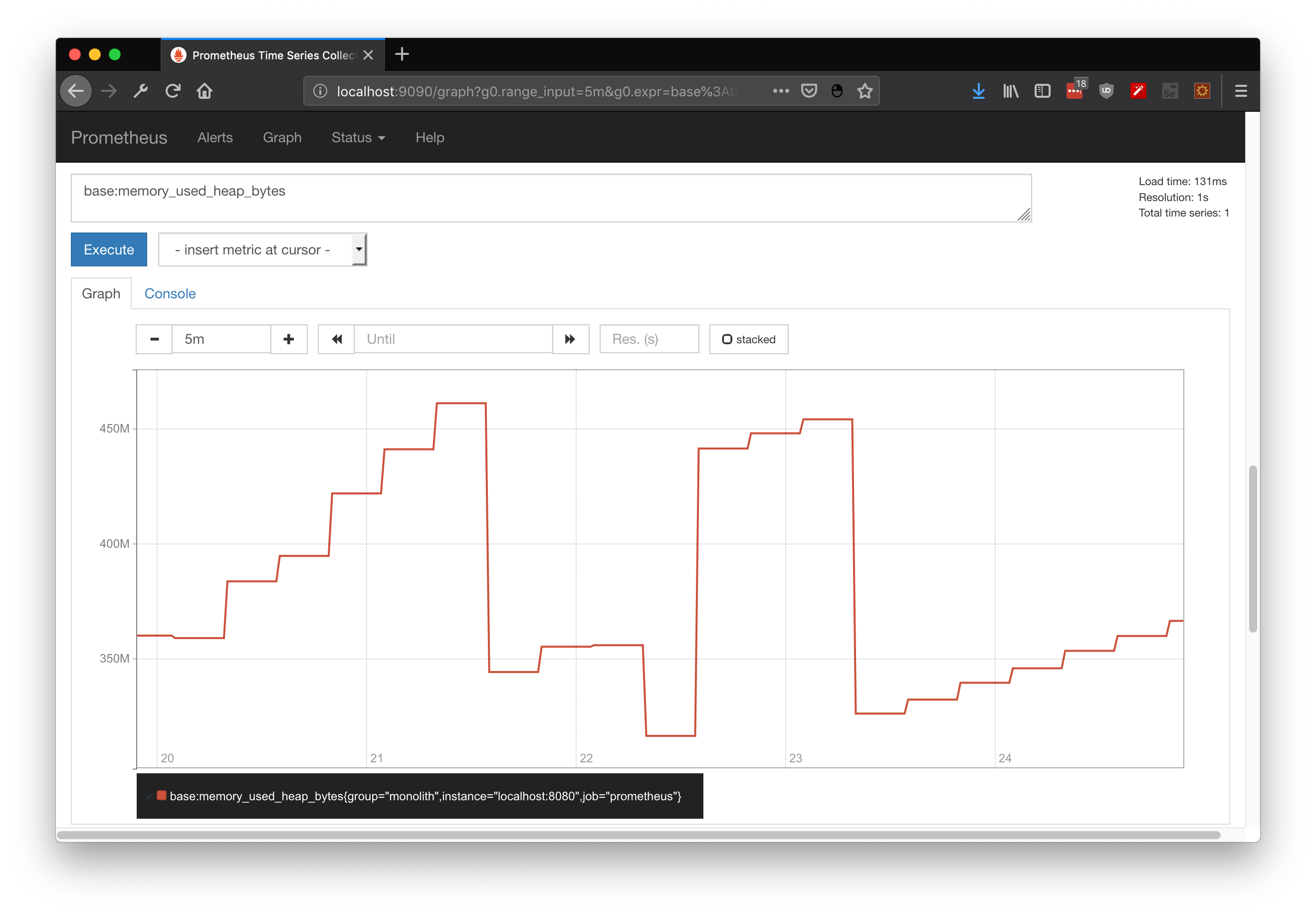

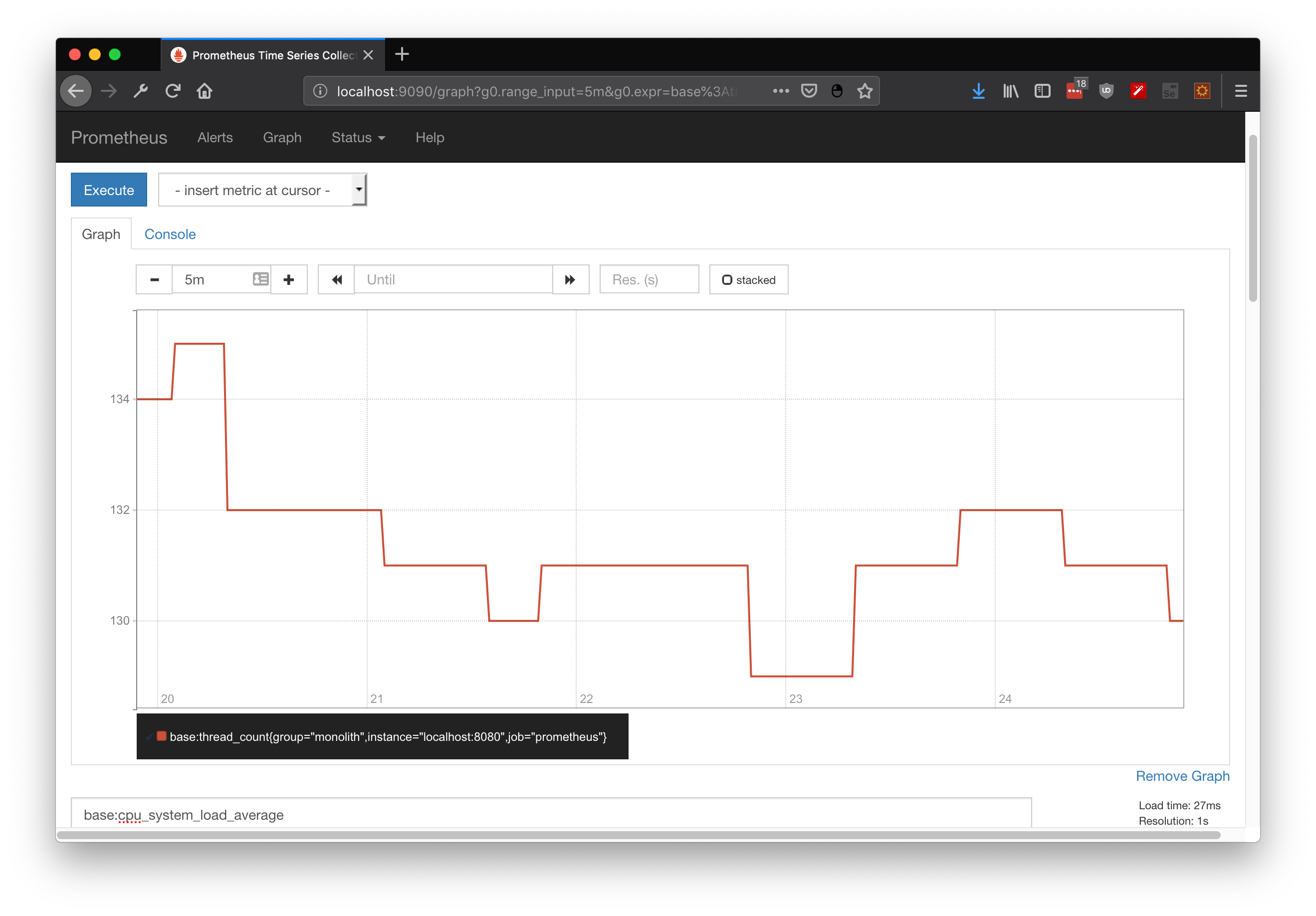

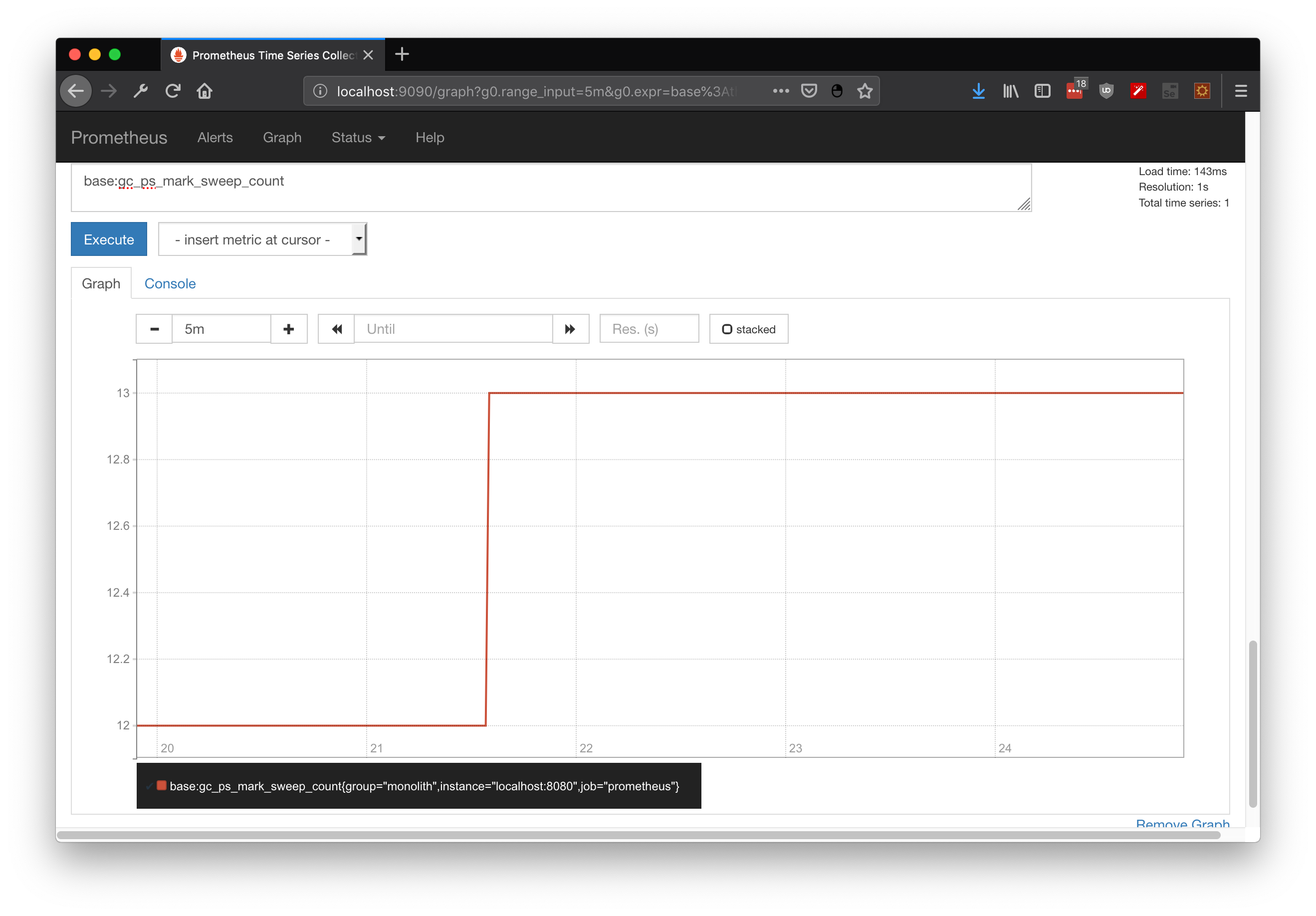

First, we can check some metrics like system_cpu_usage and filter by service name. In this case I used springboot-demo which has the CPU demo using naive fibonacci, I can even filter by my own tag (which was added by Otel processor):

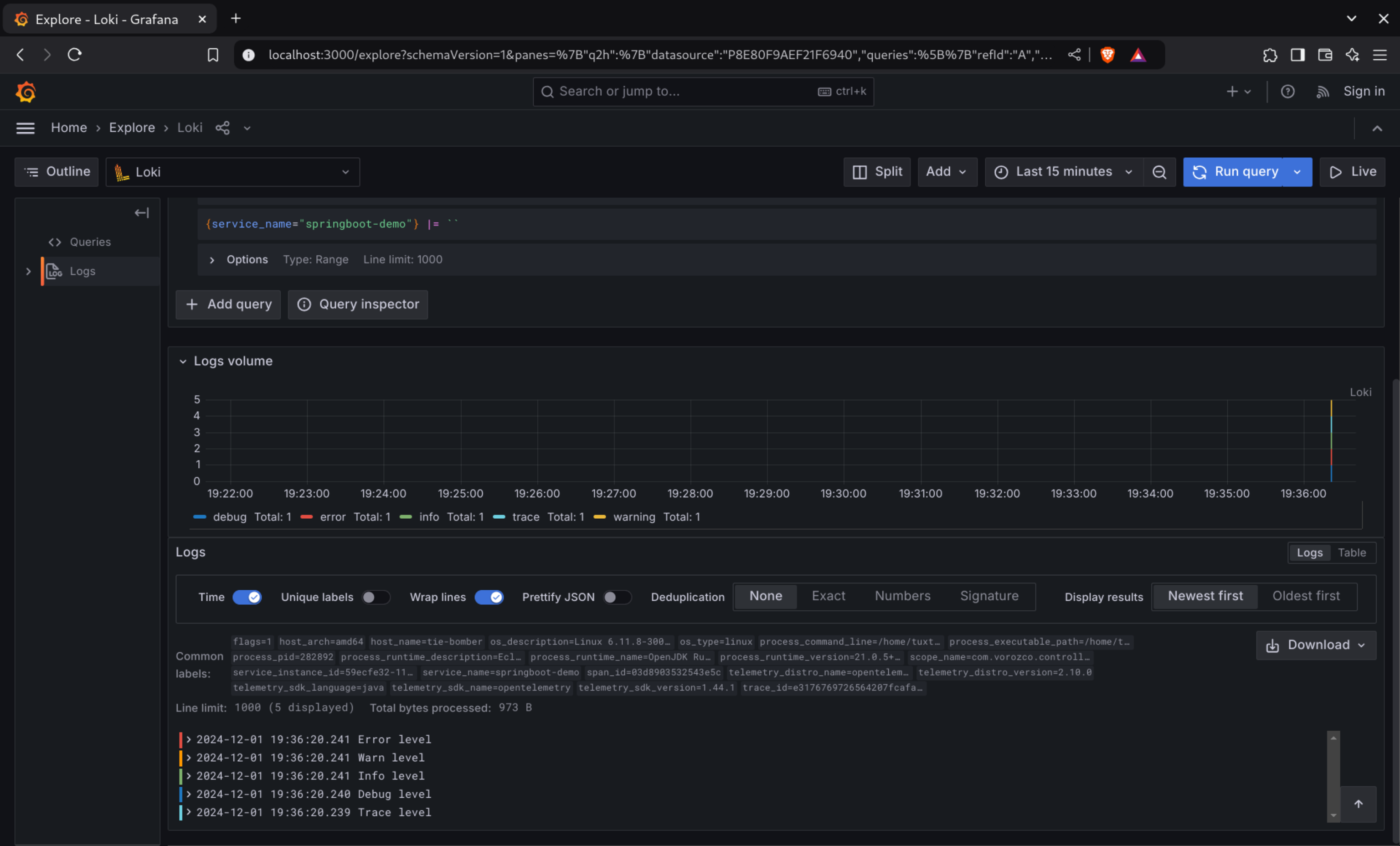

In the same way, logs are already stored in Loki:

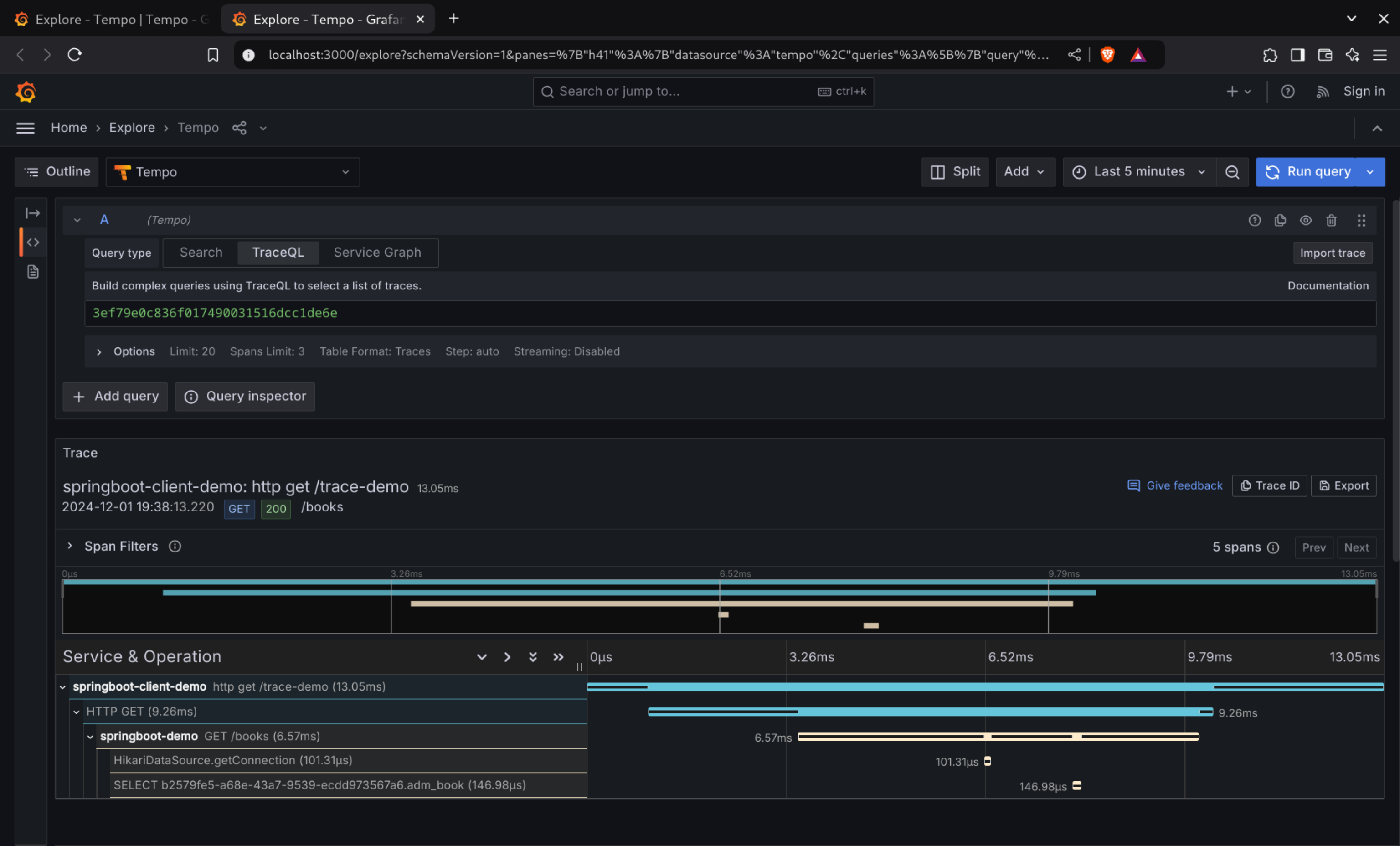

Finally, we could check the whole trace, including both services and interaction with H2 RDBMS:

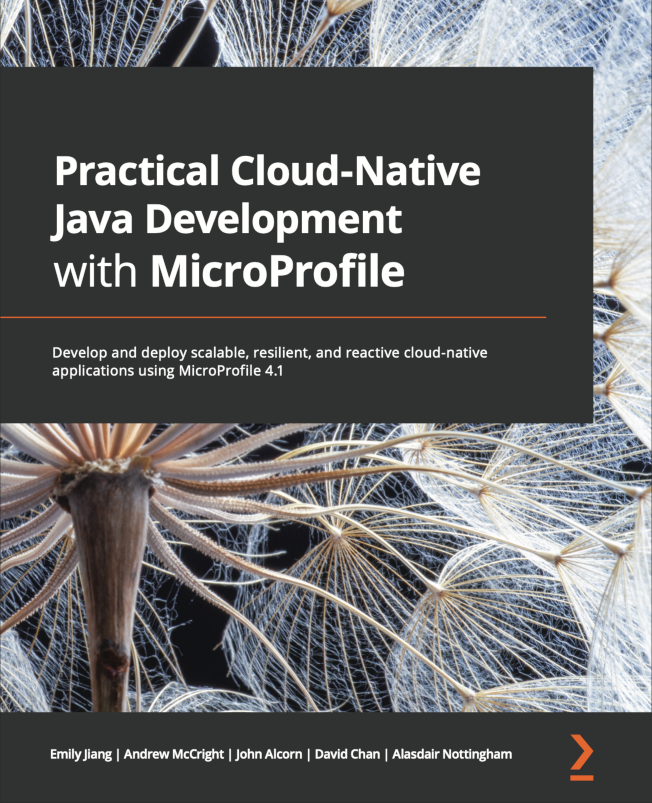

Book Review: Practical Cloud-Native Java Development with MicroProfile

24 September 2021

General information

- Pages: 403

- Published by: Packt

- Release date: Aug 2021

Disclaimer: I received this book as a collaboration with Packt and one of the authors (Thanks Emily!)

A book about Microservices for the Java Enterprise-shops

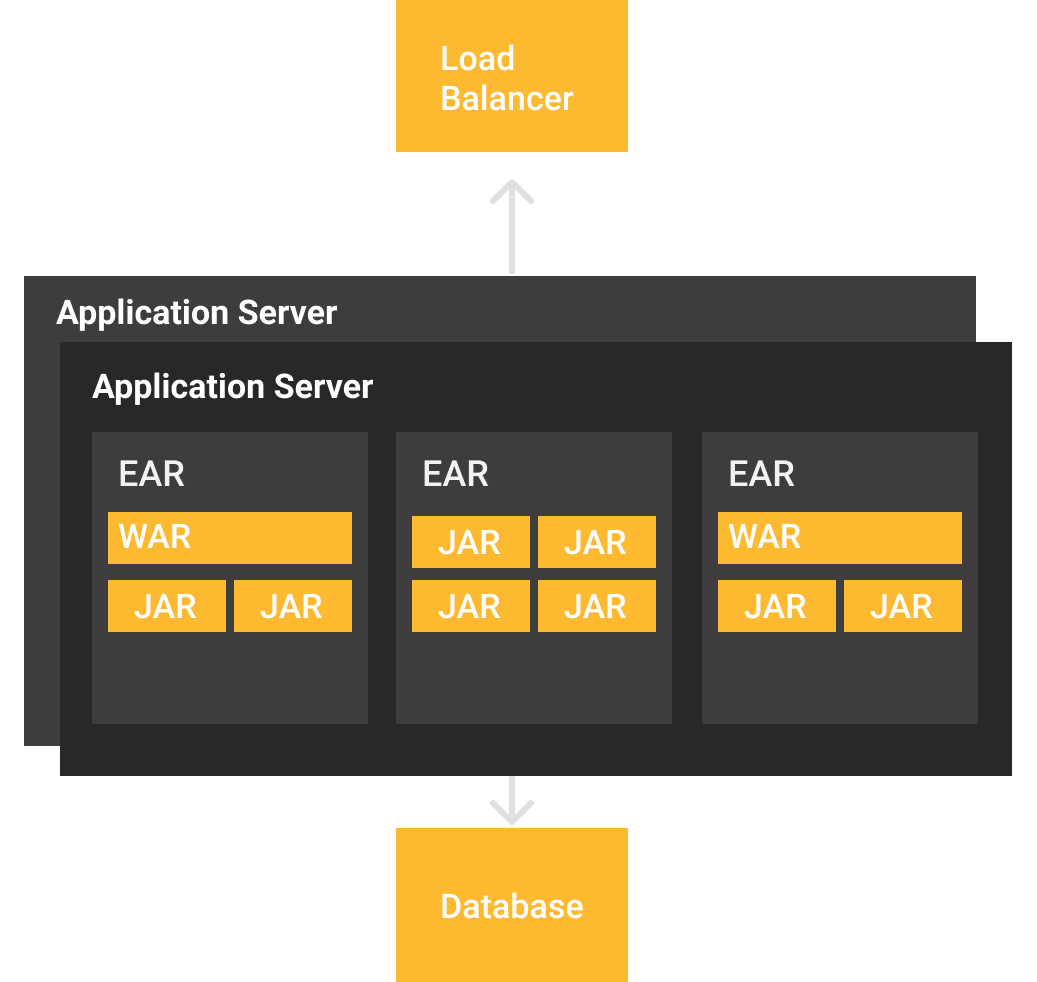

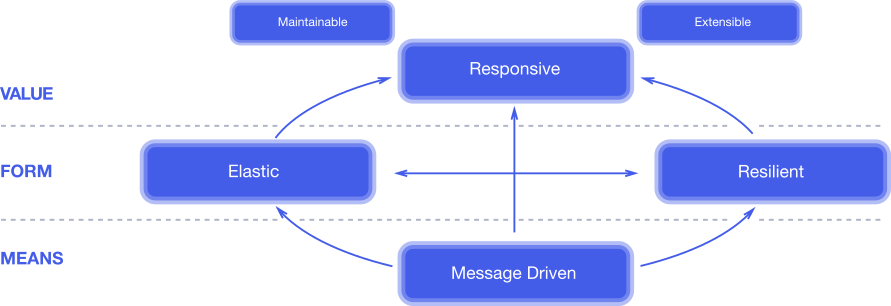

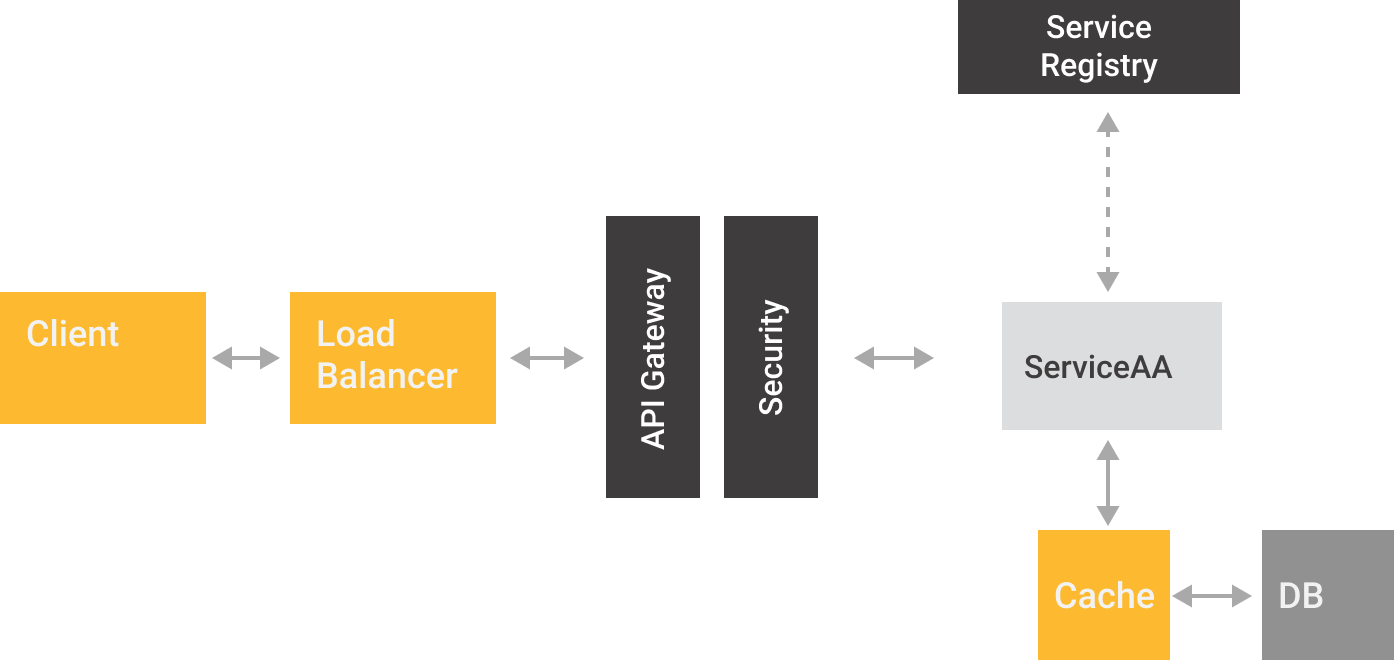

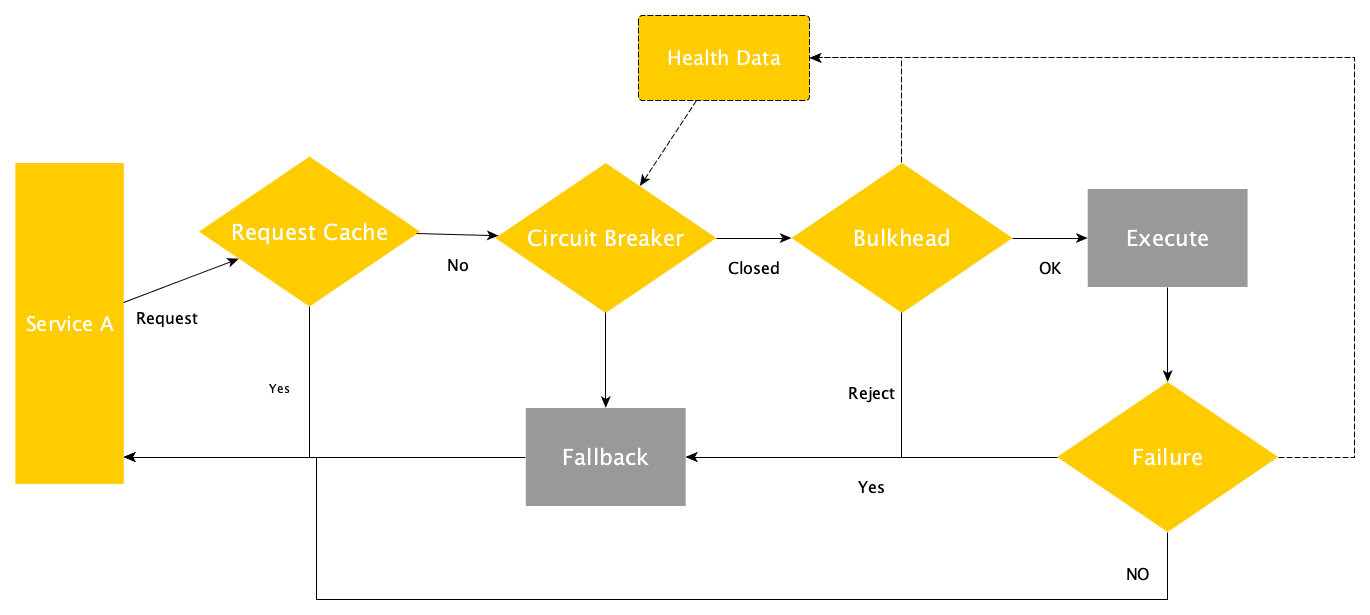

Year after year many enterprise companies are struggling to embrace Cloud Native practices that we tend to denominate as Microservices, however Microservices is a metapattern that needs to follow a well defined approach, like:

- (We aim for) reactive systems

- (Hence we need a methodology like) 12 Cloud Native factors

- (Implementing) well-known design patterns

- (Dividing the system by using) Domain Driven Design

- (Implementing microservices via) Microservices chassis and/or service mesh

- (Achieving deployments by) Containers orchestration

Many of these concepts require a considerable amount of context, but some books, tutorials, conferences and YouTube videos tend to focus on specific niche information, making difficult to have a "cold start" in the microservices space if you have been developing regular/monolithic software. For me, that's the best thing about this book, it provides a holistic view to understand microservices with Java and MicroProfile for "cold starter developers".

About the book

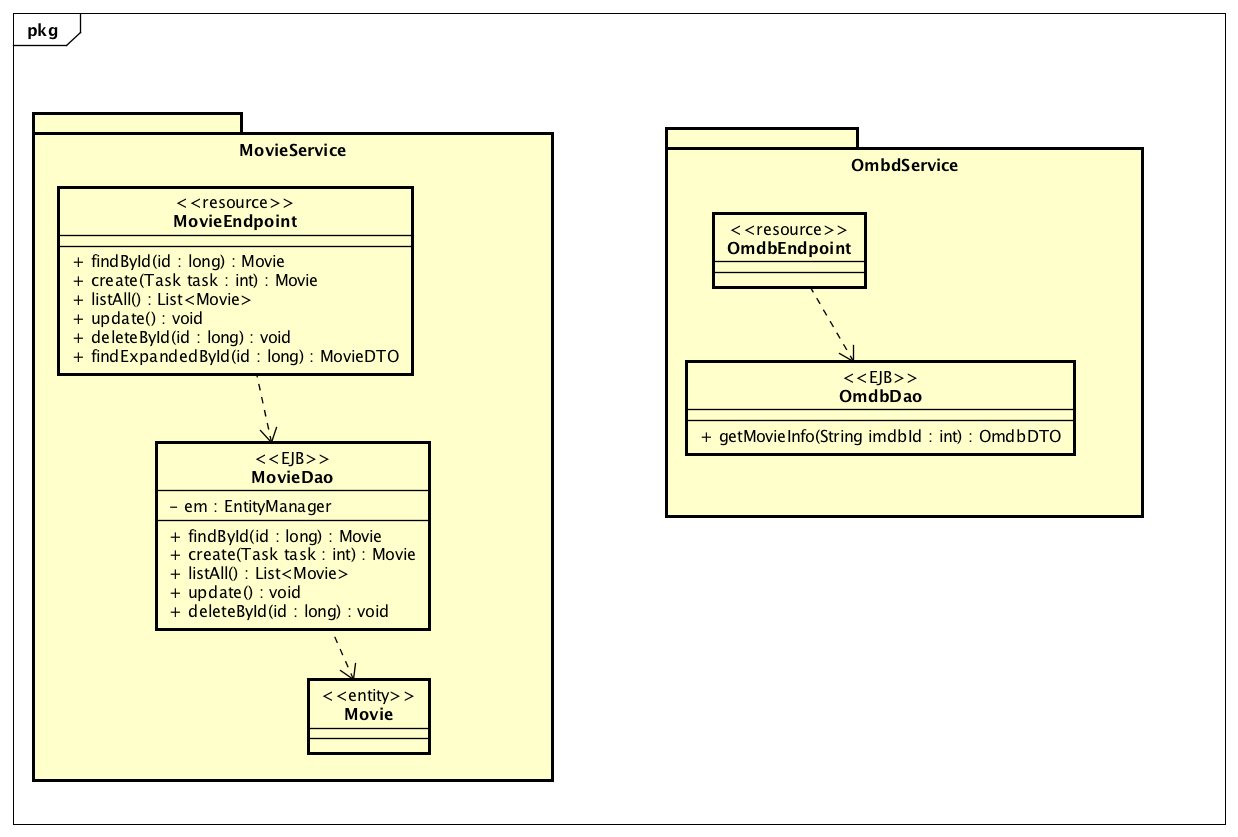

Using a software architect perspective, MicroProfile could be defined as a set of specifications (APIs) that many microservices chassis implement in order to solve common microservices problems through patterns, lessons learned from well known Java libraries, and proposals for collaboration between Java Enterprise vendors.

Subsequently if you think that it sounds a lot like Java EE, that's right, it's the same spirit but on the microservices space with participation for many vendors, including vendors from the Java EE space -e.g. Red Hat, IBM, Apache, Payara-.

The main value of this book is the willingness to go beyond the APIs, providing four structured sections that have different writing styles, for instance:

- Section 1: Cloud Native Applications - Written as a didactical resource to learn fundamentals of distributed systems with Cloud Native approach

- Section 2: MicroProfile Deep Dive - Written as a reference book with code snippets to understand the motivation, functionality and specific details in MicroProfile APIs and the relation between these APIs and common Microservices patterns -e.g. Remote procedure invocation, Health Check APIs, Externalized configuration-

- Section 3: End-to-End Project Using MicroProfile - Written as a narrative workshop with source code already available, to understand the development and deployment process of Cloud Native applications with MicroProfile

- Section 4: The standalone specifications - Written as a reference book with code snippets, it describes the development of newer specs that could be included in the future under MicroProfile's umbrella

First section

This was by far my favorite section. This section presents a well-balanced overview about Cloud Native practices like:

- Cloud Native definition

- The role of microservices and the differences with monoliths and FaaS

- Data consistency with event sourcing

- Best practices

- The role of MicroProfile

I enjoyed this section because my current role is to coach or act as a software architect at different companies, hence this is good material to explain the whole panorama to my coworkers and/or use this book as a quick reference.

My only concern with this section is about the final chapter, this chapter presents an application called IBM Stock Trader that (as you probably guess) IBM uses to demonstrate these concepts using MicroProfile with OpenLiberty. The chapter by itself presents an application that combines data sources, front/ends, Kubernetes; however the application would be useful only on Section 3 (at least that was my perception). Hence you will be going back to this section once you're executing the workshop.

Second section

This section divides the MicroProfile APIs in three levels, the division actually makes a lot of sense but was evident to me only during this review:

- The base APIs to create microservices (JAX-RS, CDI, JSON-P, JSON-B, Rest Client)

- Enhancing microservices (Config, Fault Tolerance, OpenAPI, JWT)

- Observing microservices (Health, Metrics, Tracing)

Additionally, section also describes the need for Docker and Kubernetes and how other common approaches -e.g. Service mesh- overlap with Microservice Chassis functionality.

Currently I'm a MicroProfile user, hence I knew most of the APIs, however I liked the actual description of the pattern/need that motivated the inclusion of the APIs, and the description could be useful for newcomers, along with the code snippets also available on GitHub.

If you're a Java/Jakarta EE developer you will find the CDI section a little bit superficial, indeed CDI by itself deserves a whole book/fascicle but this chapter gives the basics to start the development process.

Third section

This section switches the writing style to a workshop style. The first chapter is entirely focused on how to compile the sample microservices, how to fulfill the technical requirements and which MicroProfile APIs are used on every microservice.

You must notice that this is not a Java programming workshop, it's a Cloud Native workshop with ready to deploy microservices, hence the step by step guide is about compilation with Maven, Docker containers, scaling with Kubernetes, operators in Openshift, etc.

You could explore and change the source code if you wish, but the section is written in a "descriptive" way assuming the samples existence.

Fourth section

This section is pretty similar to the second section in the reference book style, hence it also describes the pattern/need that motivated the discussion of the API and code snippets. The main focus of this section is GraphQL, Reactive Approaches and distributed transactions with LRA.

This section will probably change in future editions of the book because at the time of publishing the Cloud Native Container Foundation revealed that some initiatives about observability will be integrated in the OpenTelemetry project and MicroProfile it's discussing their future approach.

Things that could be improved

As any review this is the most difficult section to write, but I think that a second edition should:

- Extend the CDI section due its foundational status

- Switch the order of the Stock Tracer presentation

- Extend the data consistency discussión -e.g. CQRS, Event Sourcing-, hopefully with advances from LRA

The last item is mostly a wish since I'm always in the need for better ways to integrate this common practices with buses like Kafka or Camel using MicroProfile. I know that some implementations -e.g. Helidon, Quarkus- already have extensions for Kafka or Camel, but the data consistency is an entire discussion about patterns, tools and best practices.

Who should read this book?

- Java developers with strong SE foundations and familiarity with the enterprise space (Spring/Java EE)

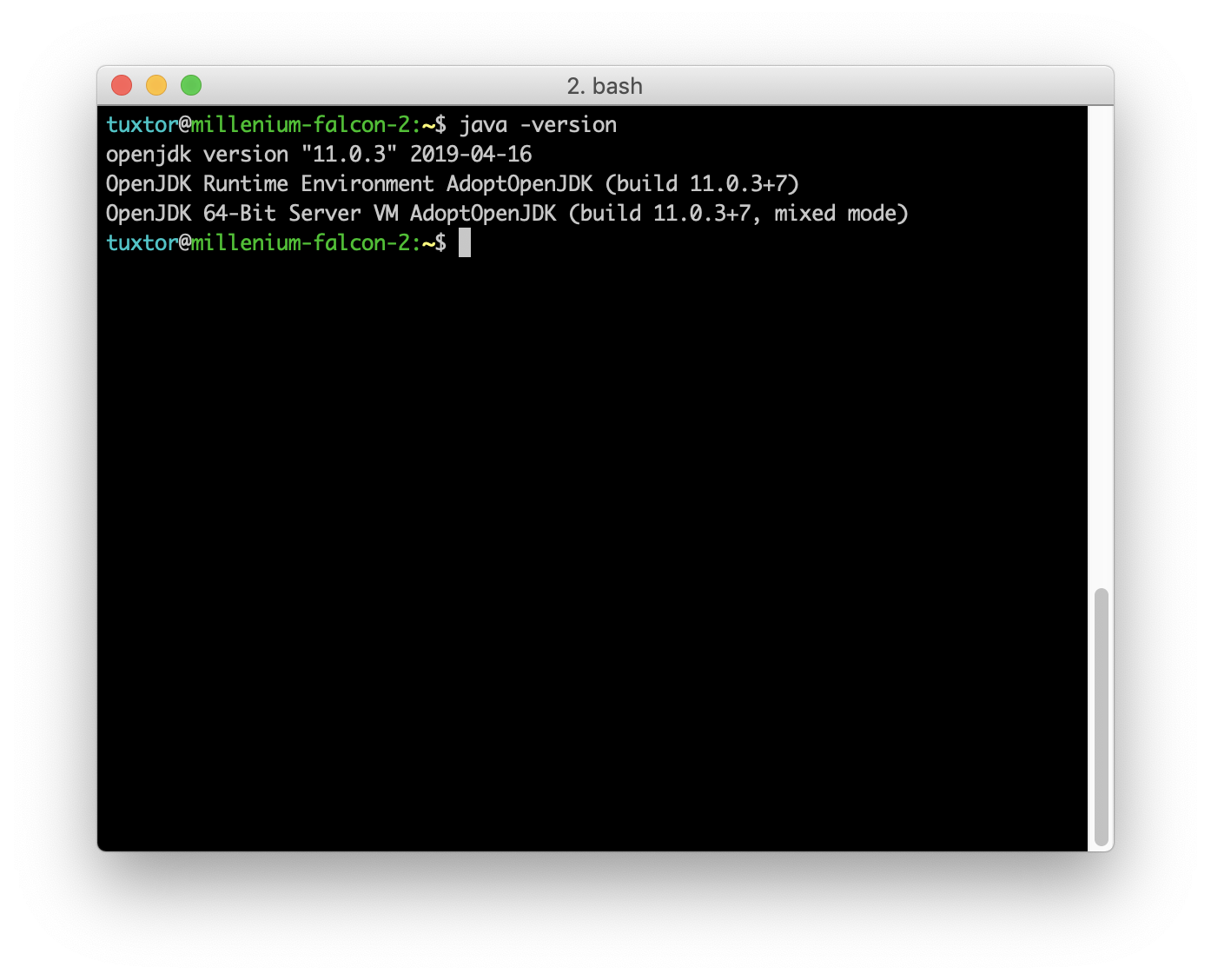

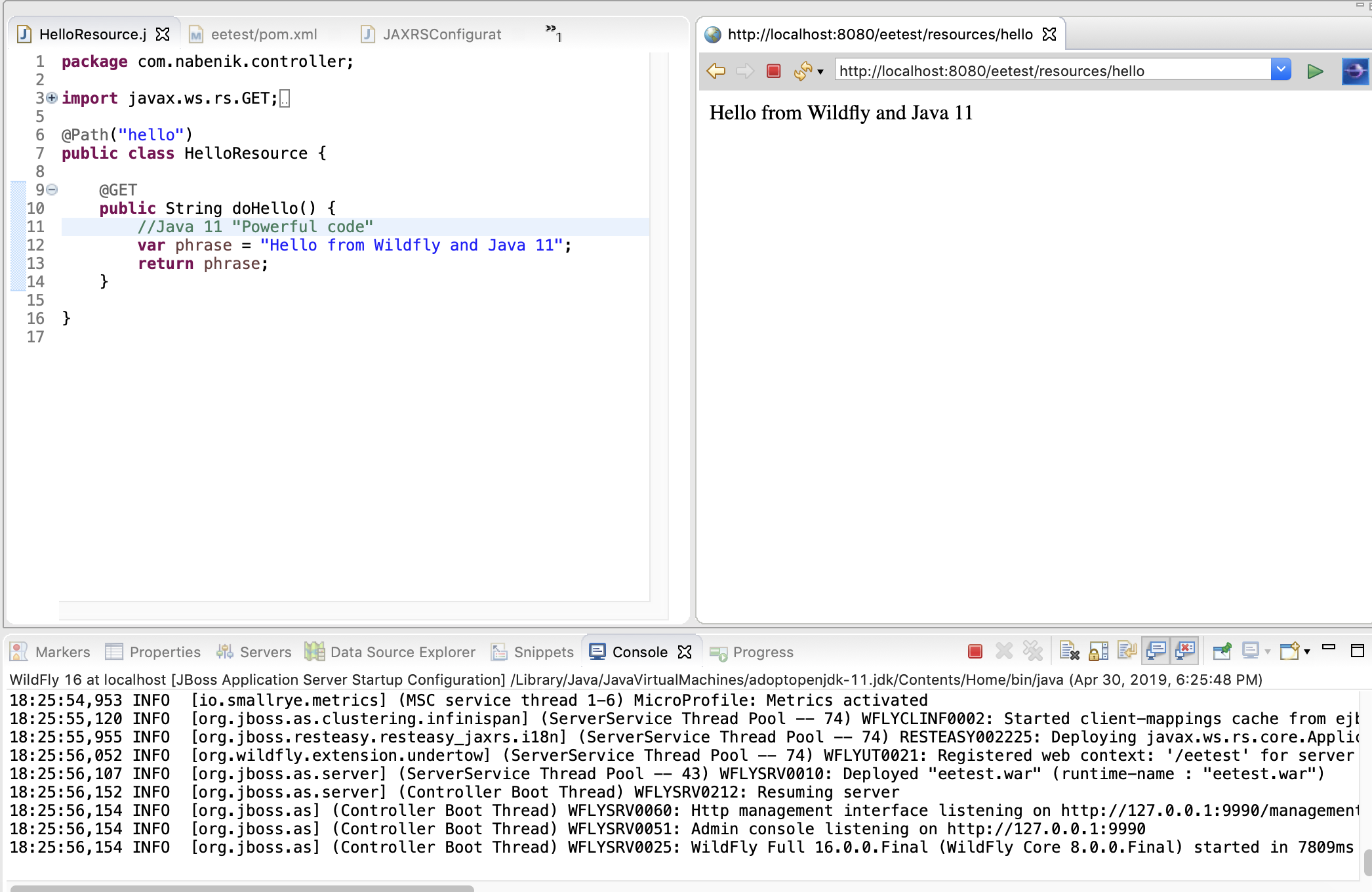

General considerations on updating Enterprise Java projects from Java 8 to Java 11

23 September 2020

The purpose of this article is to consolidate all difficulties and solutions that I've encountered while updating Java EE projects from Java 8 to Java 11 (and beyond). It's a known fact that Java 11 has a lot of new characteristics that are revolutionizing how Java is used to create applications, despite being problematic under certain conditions.

This article is focused on Java/Jakarta EE but it could be used as basis for other enterprise Java frameworks and libraries migrations.

Is it possible to update Java EE/MicroProfile projects from Java 8 to Java 11?

Yes, absolutely. My team has been able to bump at least two mature enterprise applications with more than three years in development, being:

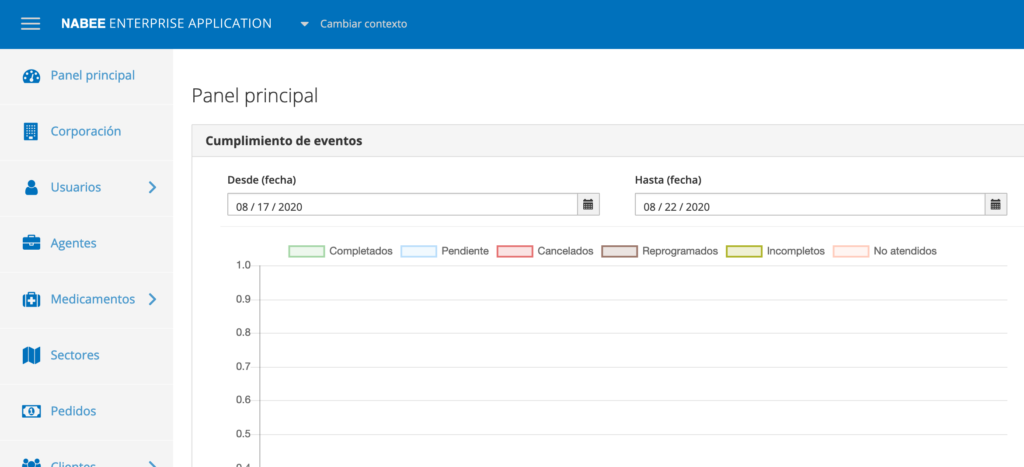

A Management Information System (MIS)

- Time for migration: 1 week

- Modules: 9 EJB, 1 WAR, 1 EAR

- Classes: 671 and counting

- Code lines: 39480

- Project's beginning: 2014

- Original platform: Java 7, Wildfly 8, Java EE 7

- Current platform: Java 11, Wildfly 17, Jakarta EE 8, MicroProfile 3.0

- Web client: Angular

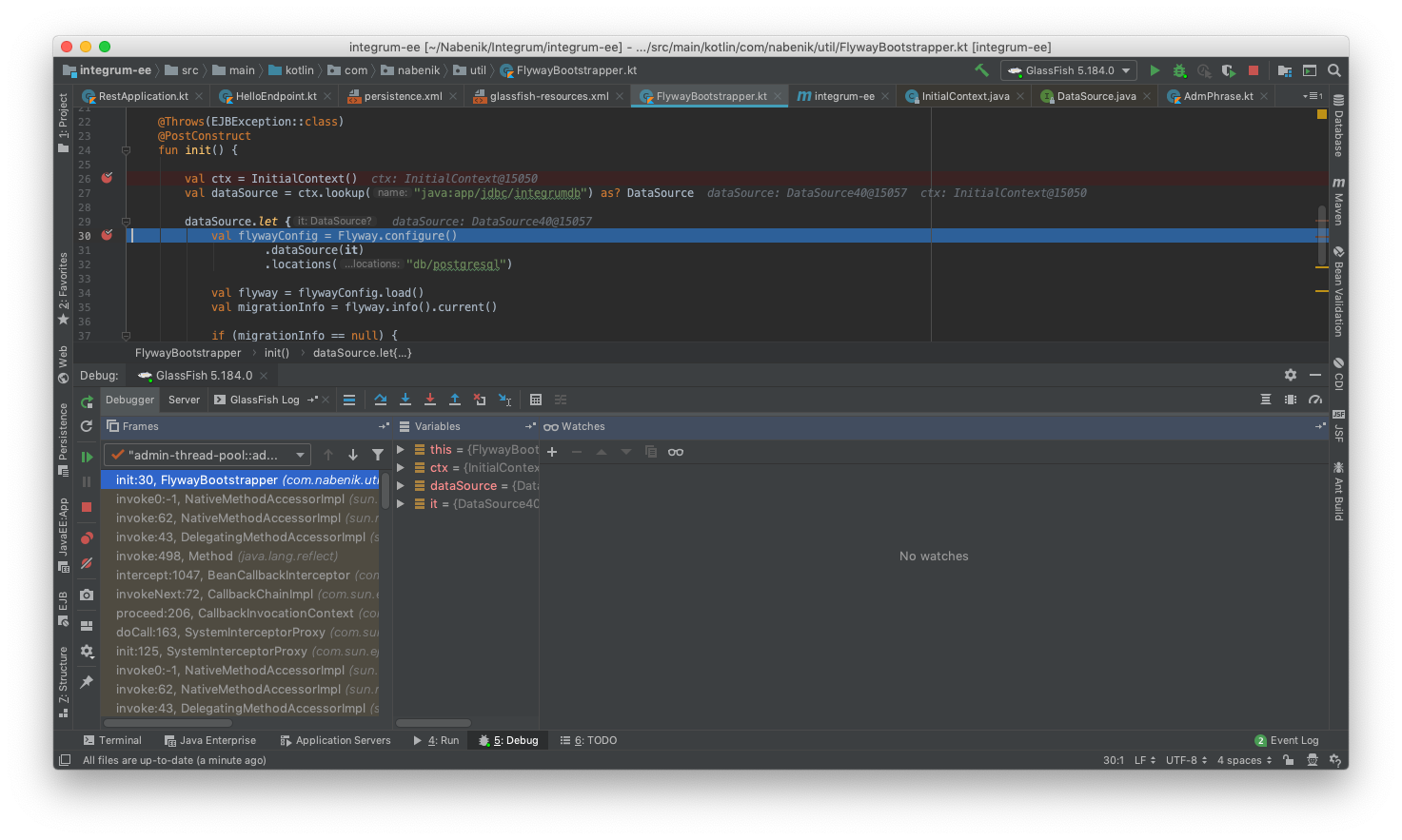

Mobile POS and Geo-fence

- Time for migration: 3 week

- Modules: 5 WAR/MicroServices

- Classes: 348 and counting

- Code lines: 17160

- Project's beginning: 2017

- Original platform: Java 8, Glassfish 4, Java EE 7

- Current platform: Java 11, Payara (Micro) 5, Jakarta EE 8, MicroProfile 3.2

- Web client: Angular

Why should I ever consider migrating to Java 11?

As everything in IT the answer is "It depends . . .". However there are a couple of good reasons to do it:

- Reduce attack surface by updating project dependencies proactively

- Reduce technical debt and most importantly, prepare your project for the new and dynamic Java world

- Take advantage of performance improvements on new JVM versions

- Take advantage from improvements of Java as programming language

- Sleep better by having a more secure, efficient and quality product

Why Java updates from Java 8 to Java 11 are considered difficult?

From my experience with many teams, because of this:

Changes in Java release cadence

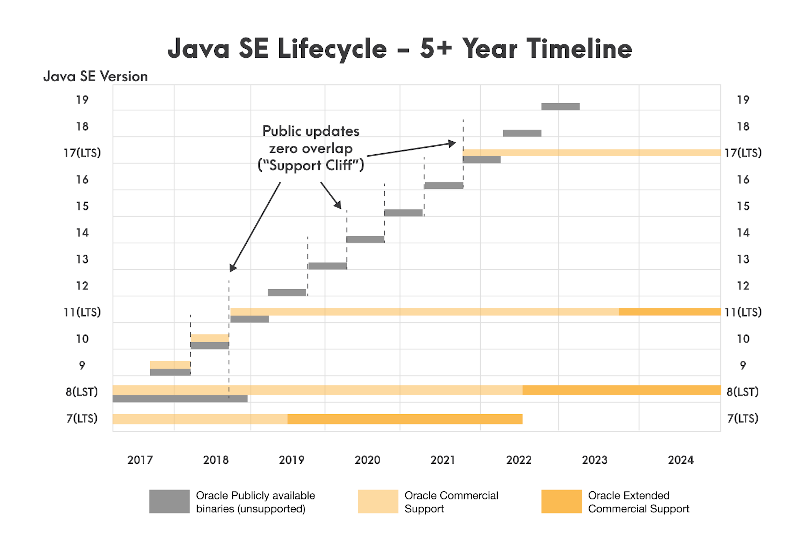

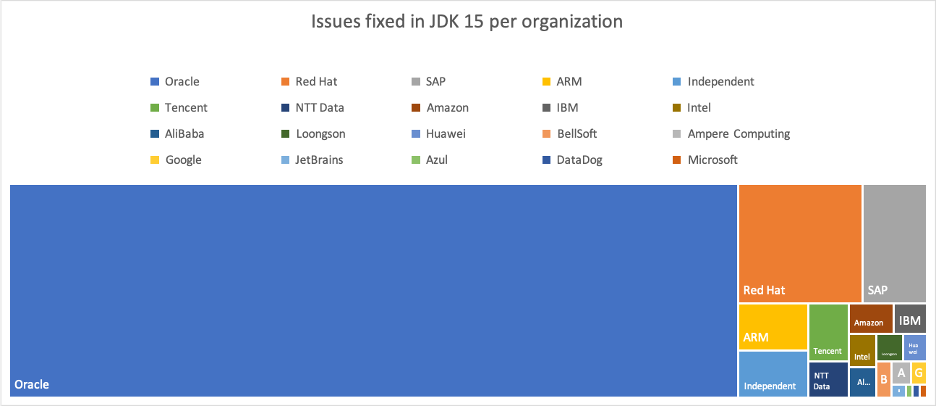

Currently, there are two big branches in JVMs release model:

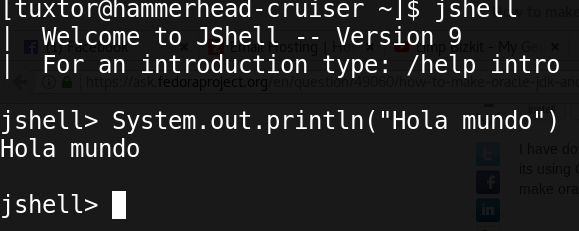

- Java LTS: With a fixed lifetime (3 years) for long term support, being Java 11 the latest one

- Java current: A fast-paced Java version that is available every 6 months over a predictable calendar, being Java 15 the latest (at least at the time of publishing for this article)

The rationale behind this decision is that Java needed dynamism in providing new characteristics to the language, API and JVM, which I really agree.

Nevertheless, it is a know fact that most enterprise frameworks seek and use Java for stability. Consequently, most of these frameworks target Java 11 as "certified" Java Virtual Machine for deployments.

Usage of internal APIs

Errata: I fixed and simplified this section following an interesting discussion on reddit :)

Java 9 introduced changes in internal classes that weren't meant for usage outside JVM, preventing/breaking the functionality of popular libraries that made use of these internals -e.g. Hibernate, ASM, Hazelcast- to gain performance.

Hence to avoid it, internal APIs in JDK 9 are inaccessible at compile time (but accesible with --add-exports), remaining accessible if they were in JDK 8 but in a future release they will become inaccessible, in the long run this change will reduce the costs borne by the maintainers of the JDK itself and by the maintainers of libraries and applications that, knowingly or not, make use of these internal APIs.

Finally, during the introduction of JEP-260 internal APIs were classified as critical and non-critical, consequently critical internal APIs for which replacements are introduced in JDK 9 are deprecated in JDK 9 and will be either encapsulated or removed in a future release.

However, you are inside the danger zone if:

- Your project compiles against dependencies pre-Java 9 depending on critical internals

- You bundle dependencies pre-Java 9 depending on critical internals

- You run your applications over a runtime -e.g. Application Servers- that include pre Java 9 transitive dependencies

Any of these situations means that your application has a probability of not being compatible with JVMs above Java 8. At least not without updating your dependencies, which also could uncover breaking changes in library APIs creating mandatory refactors.

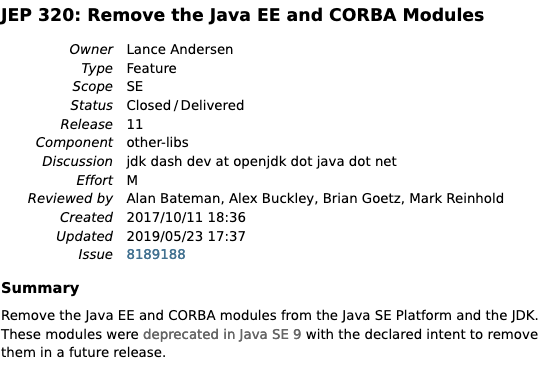

Removal of CORBA and Java EE modules from OpenJDK

Also during Java 9 release, many Java EE and CORBA modules were marked as deprecated, being effectively removed at Java 11, specifically:

- java.xml.ws (JAX-WS, plus the related technologies SAAJ and Web Services Metadata)

- java.xml.bind (JAXB)

- java.activation (JAF)

- java.xml.ws.annotation (Common Annotations)

- java.corba (CORBA)

- java.transaction (JTA)

- java.se.ee (Aggregator module for the six modules above)

- jdk.xml.ws (Tools for JAX-WS)

- jdk.xml.bind (Tools for JAXB)

As JEP-320 states, many of these modules were included in Java 6 as a convenience to generate/support SOAP Web Services. But these modules eventually took off as independent projects already available at Maven Central. Therefore it is necessary to include these as dependencies if our project implements services with JAX-WS and/or depends on any library/utility that was included previously.

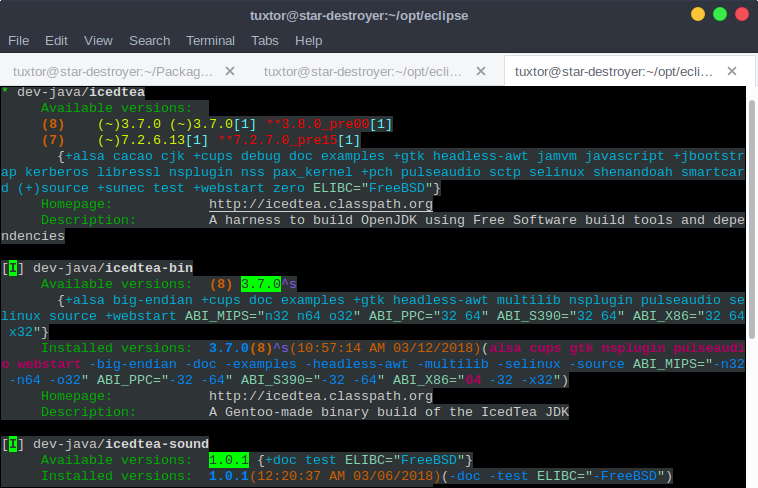

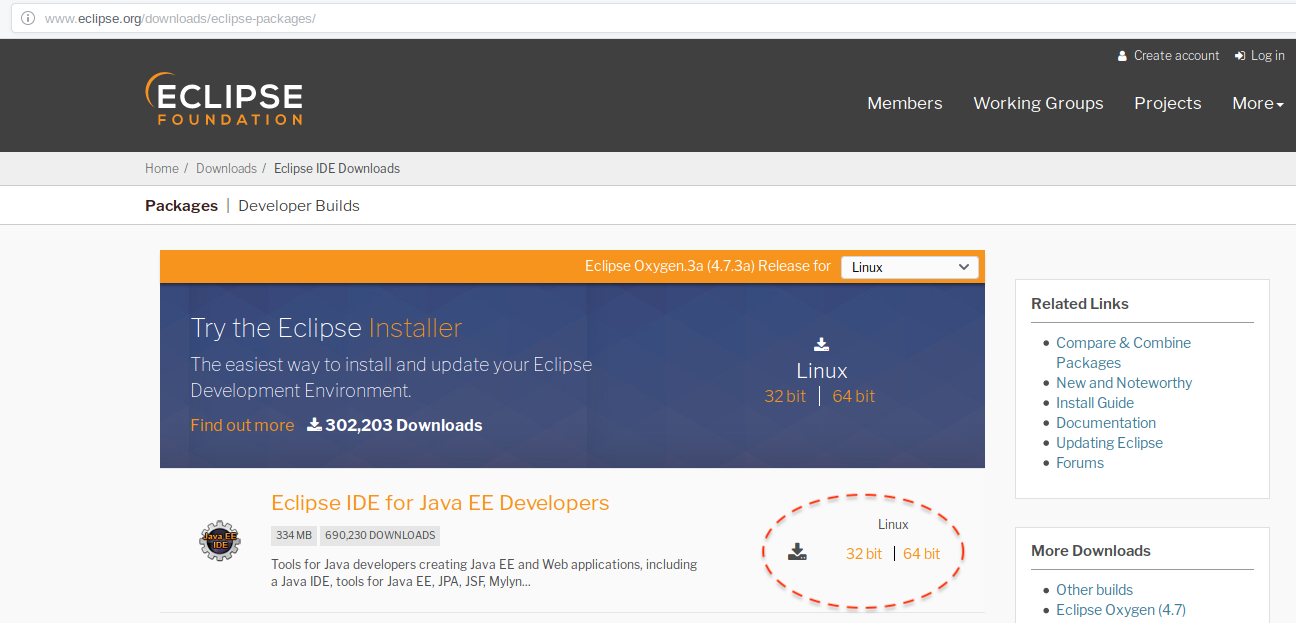

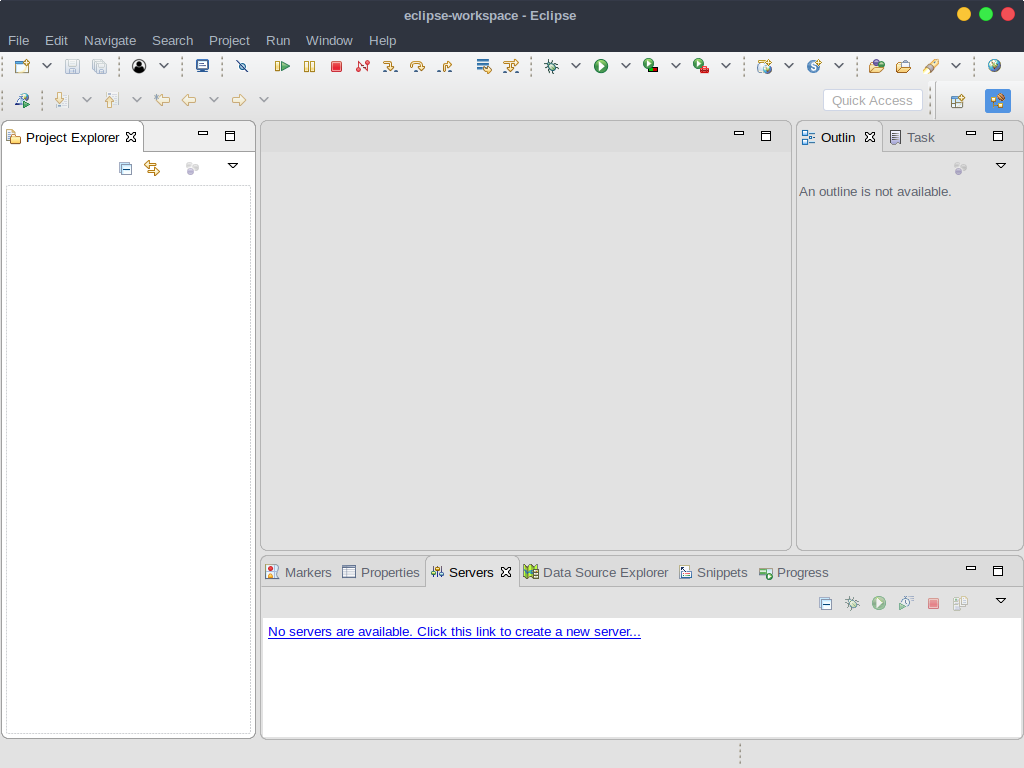

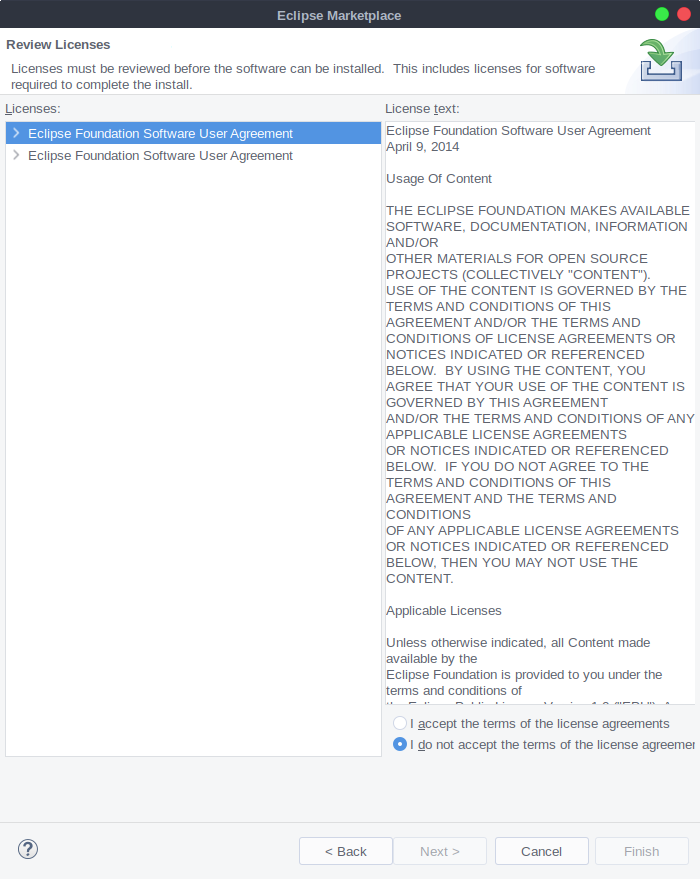

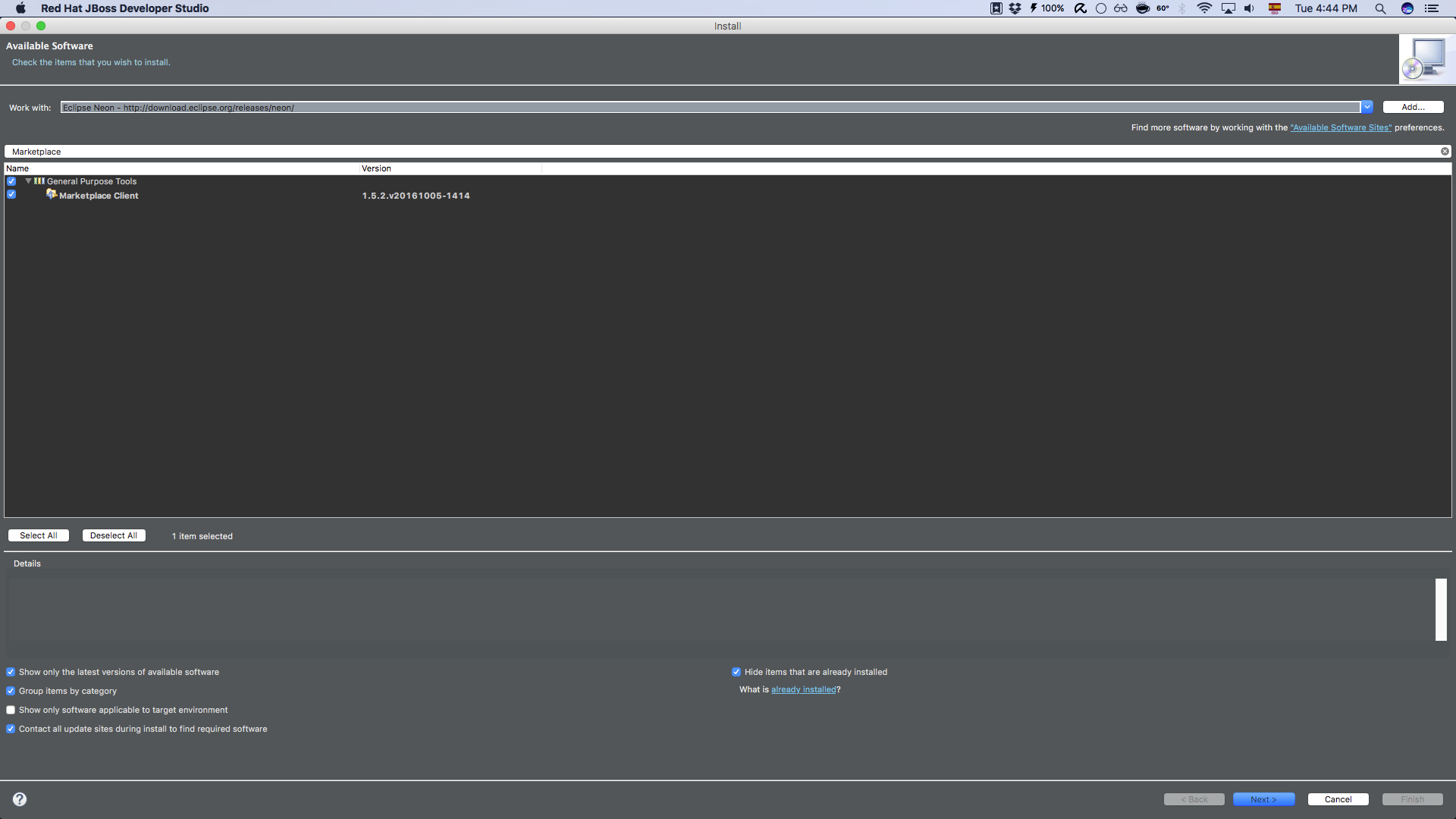

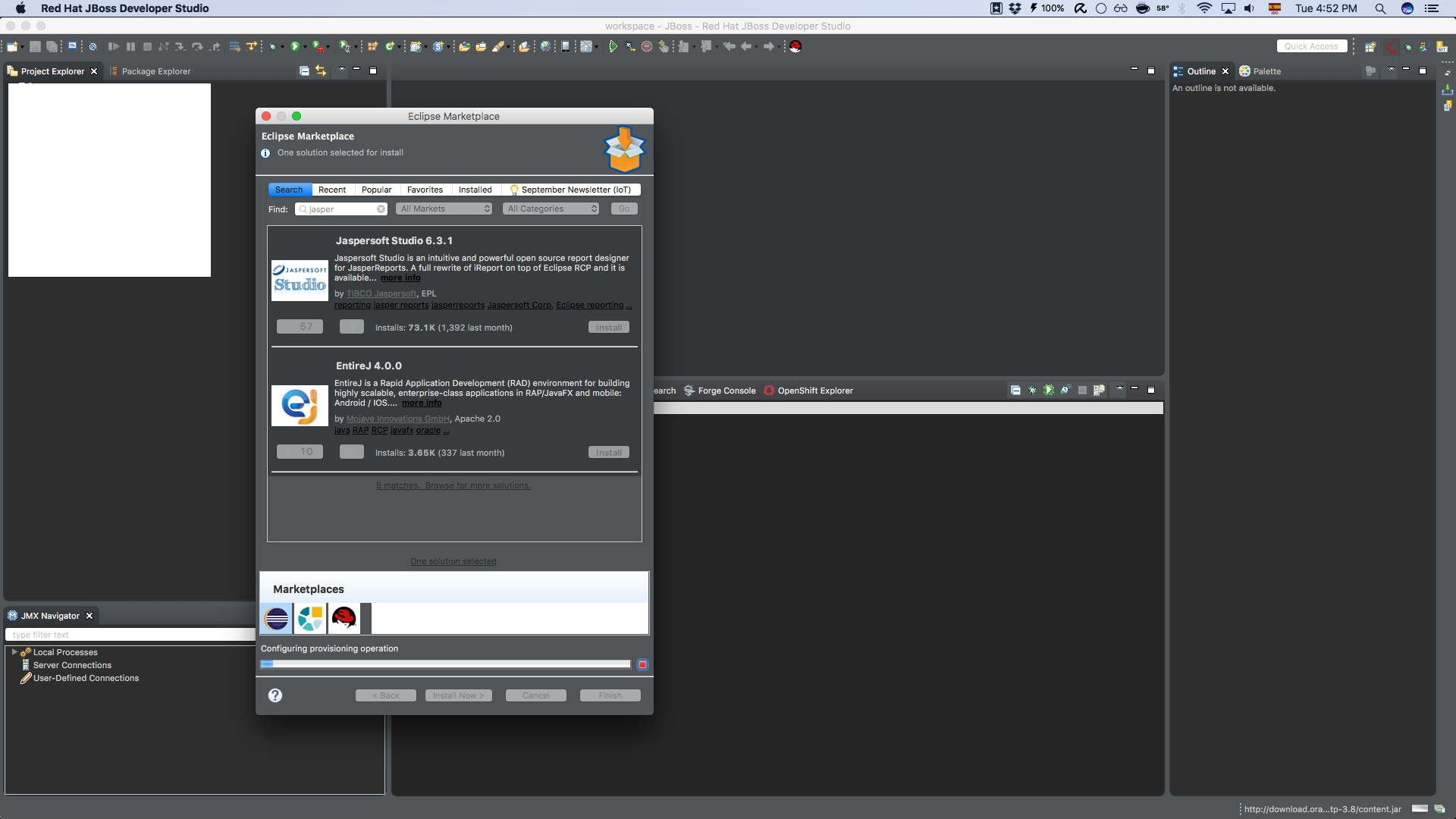

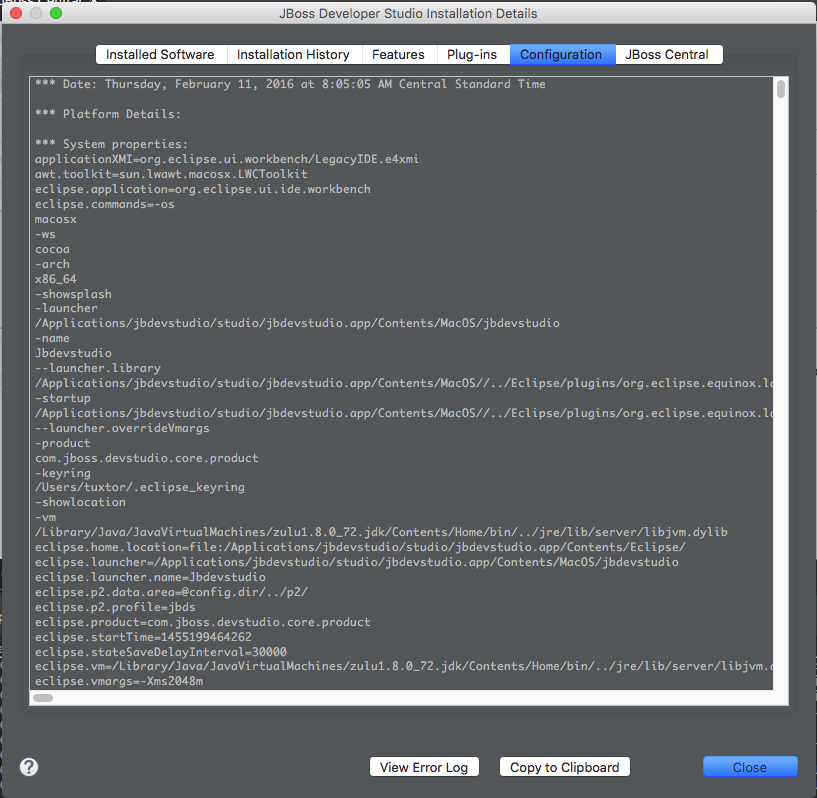

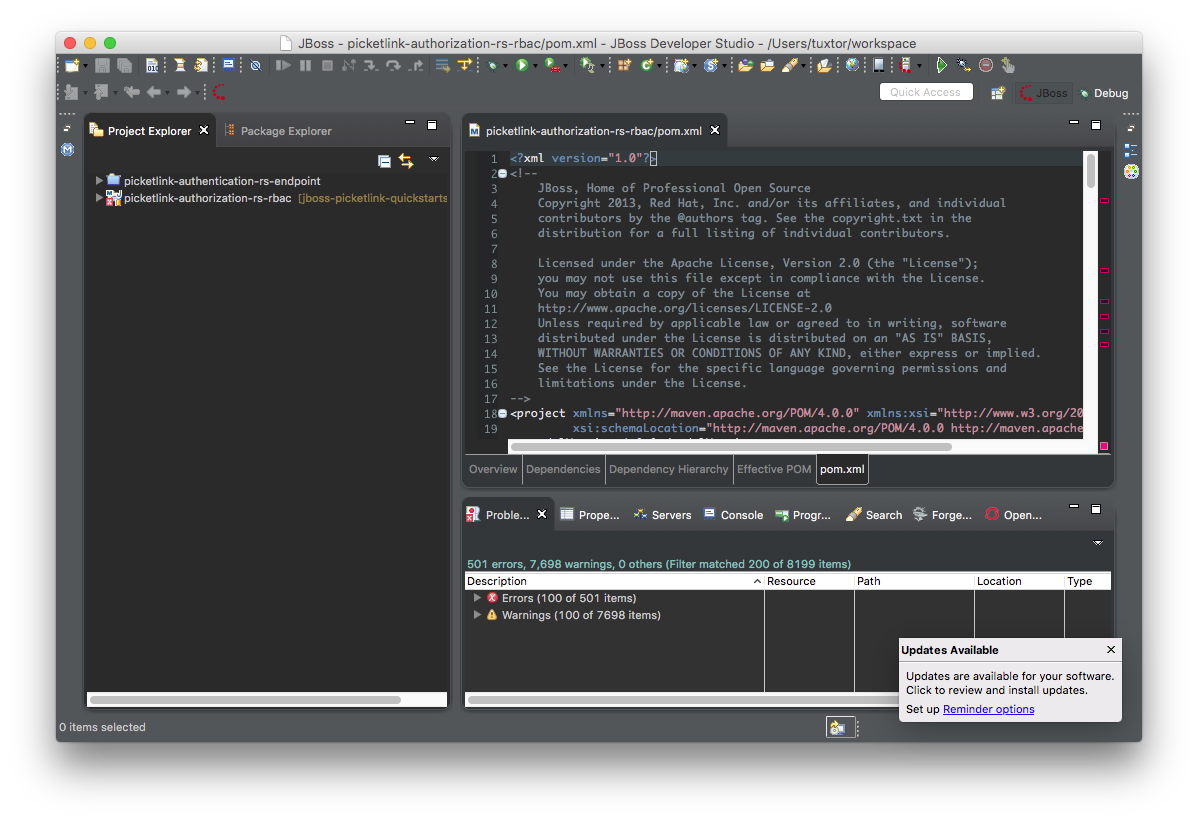

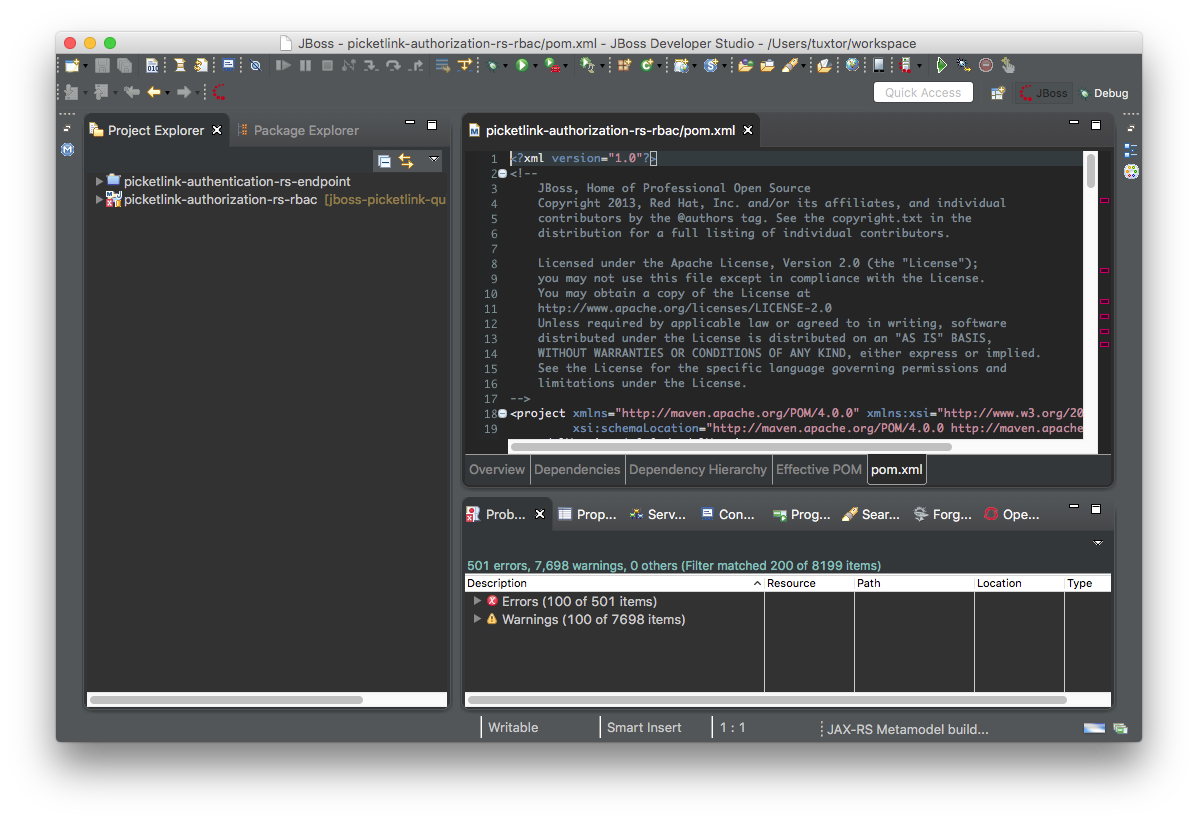

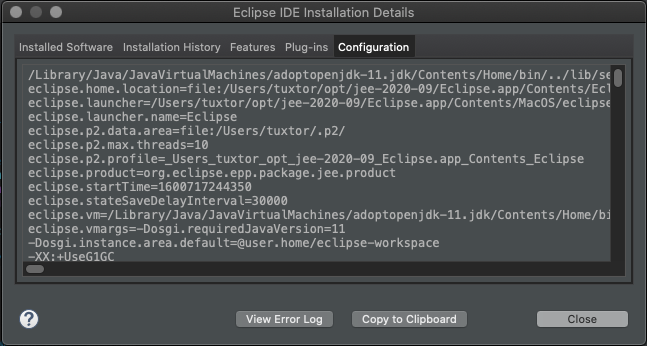

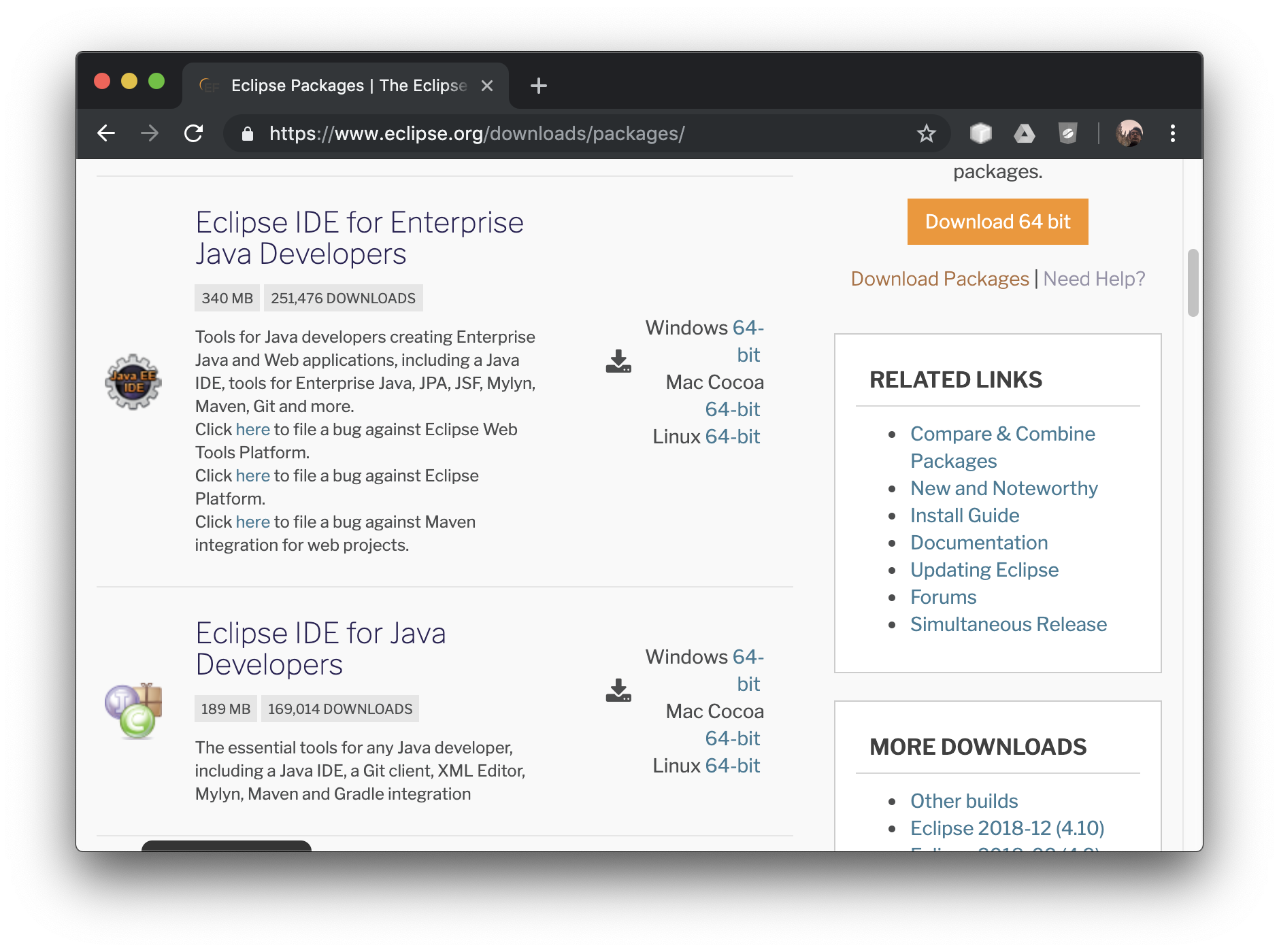

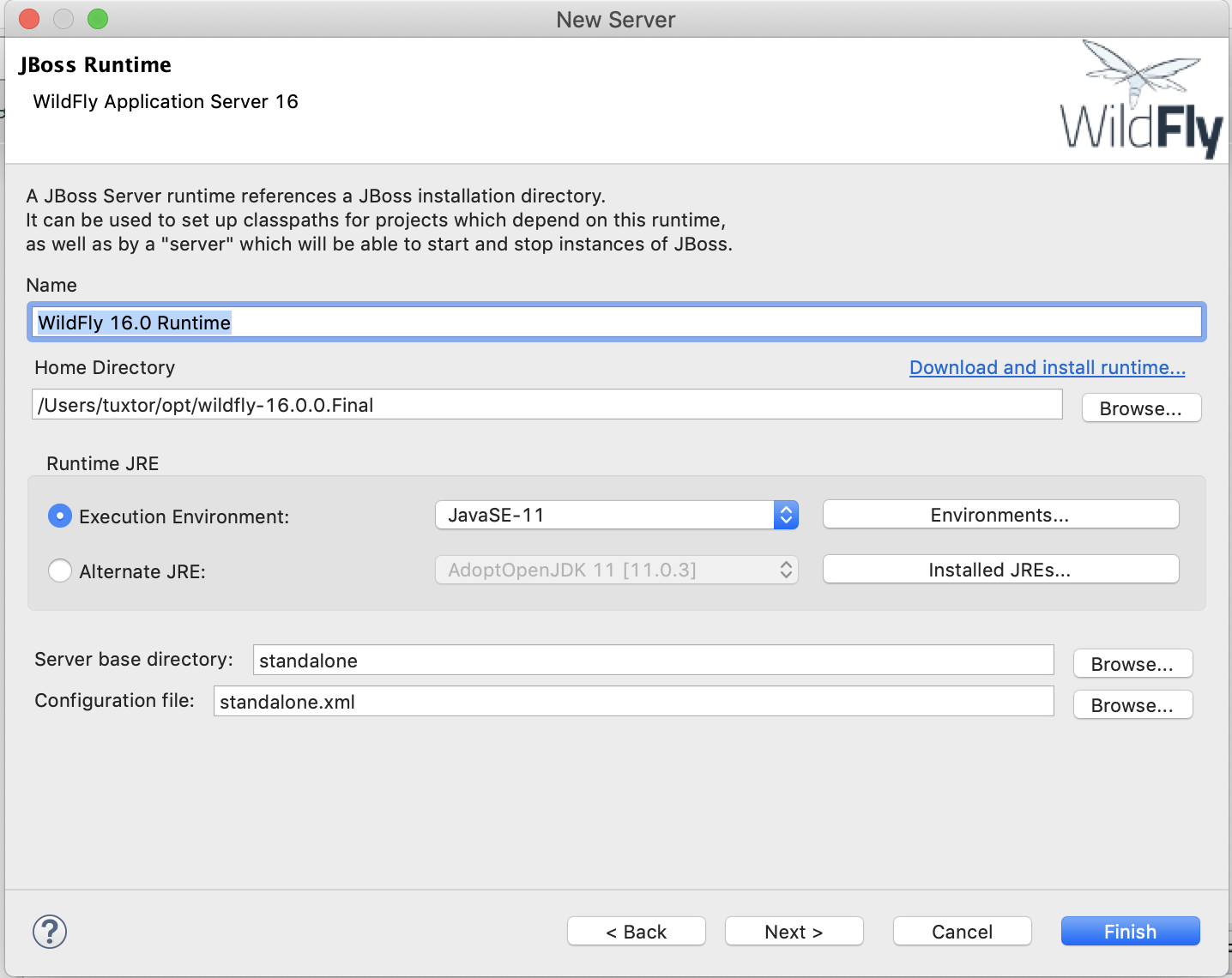

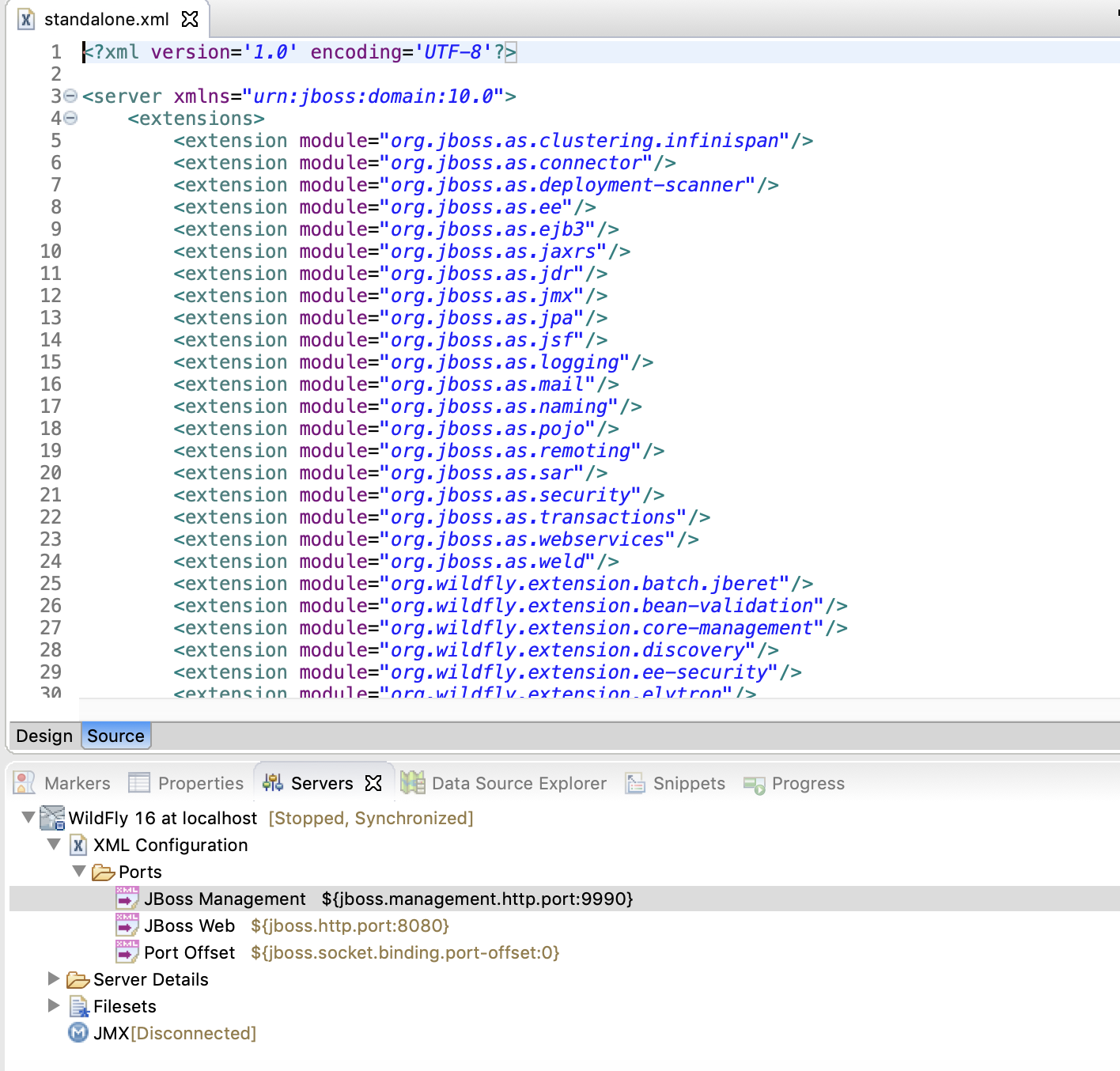

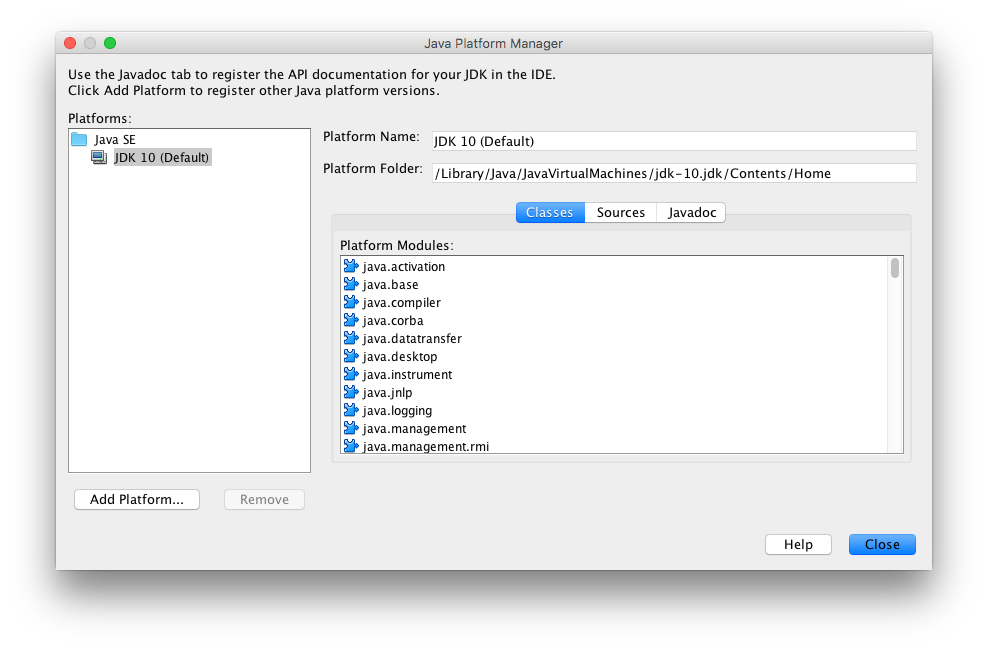

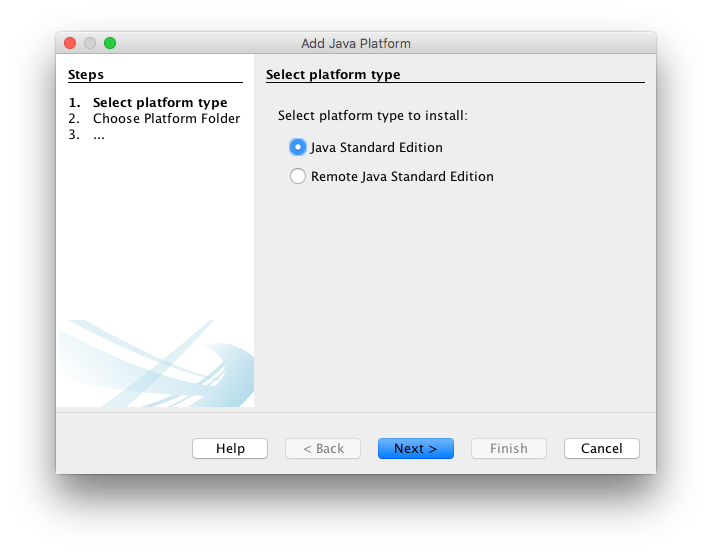

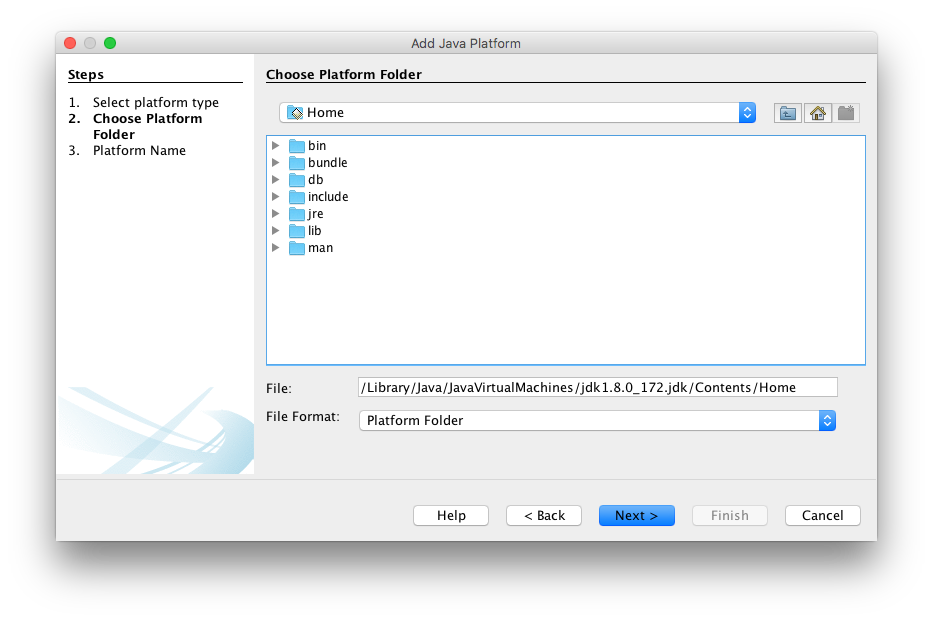

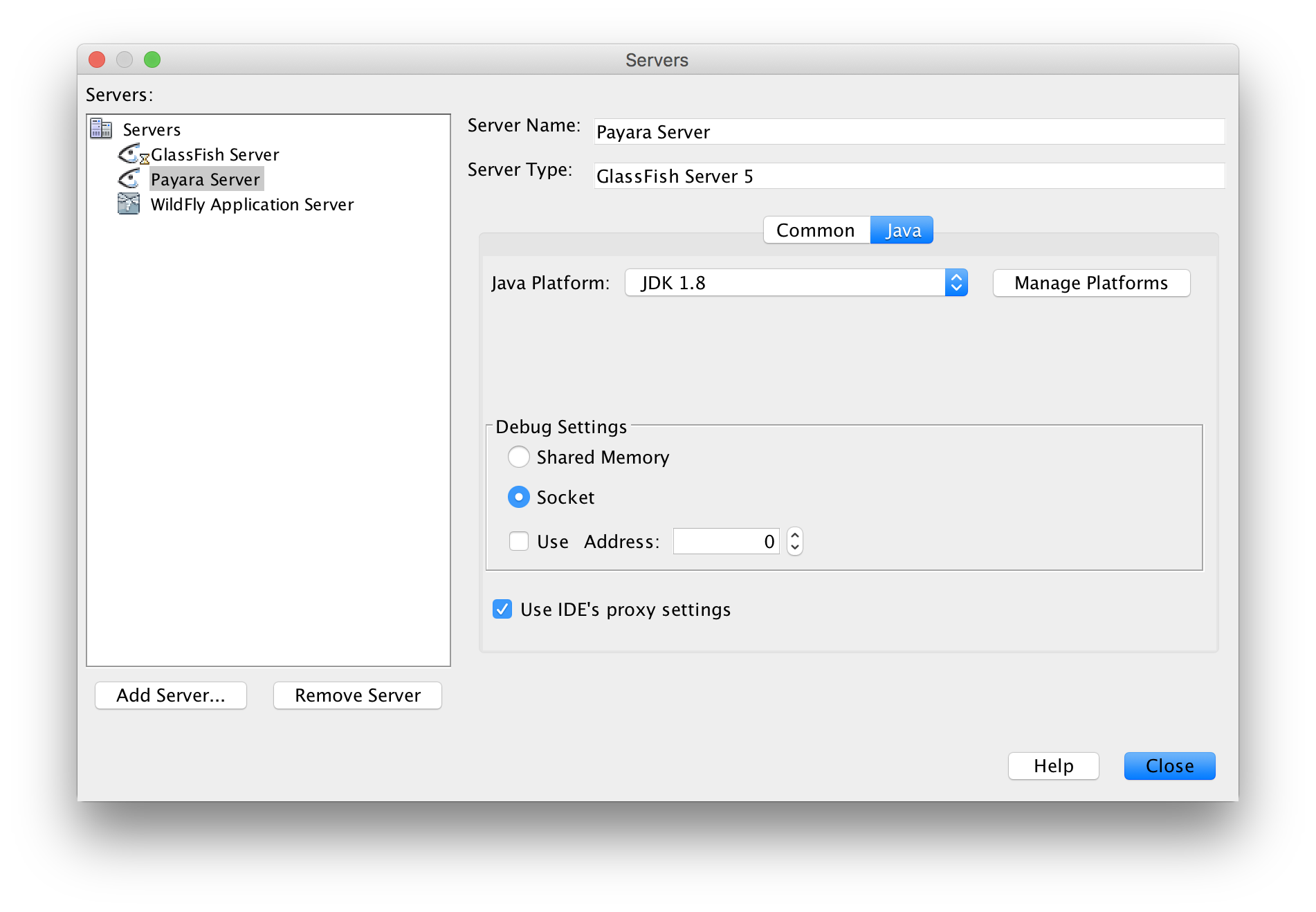

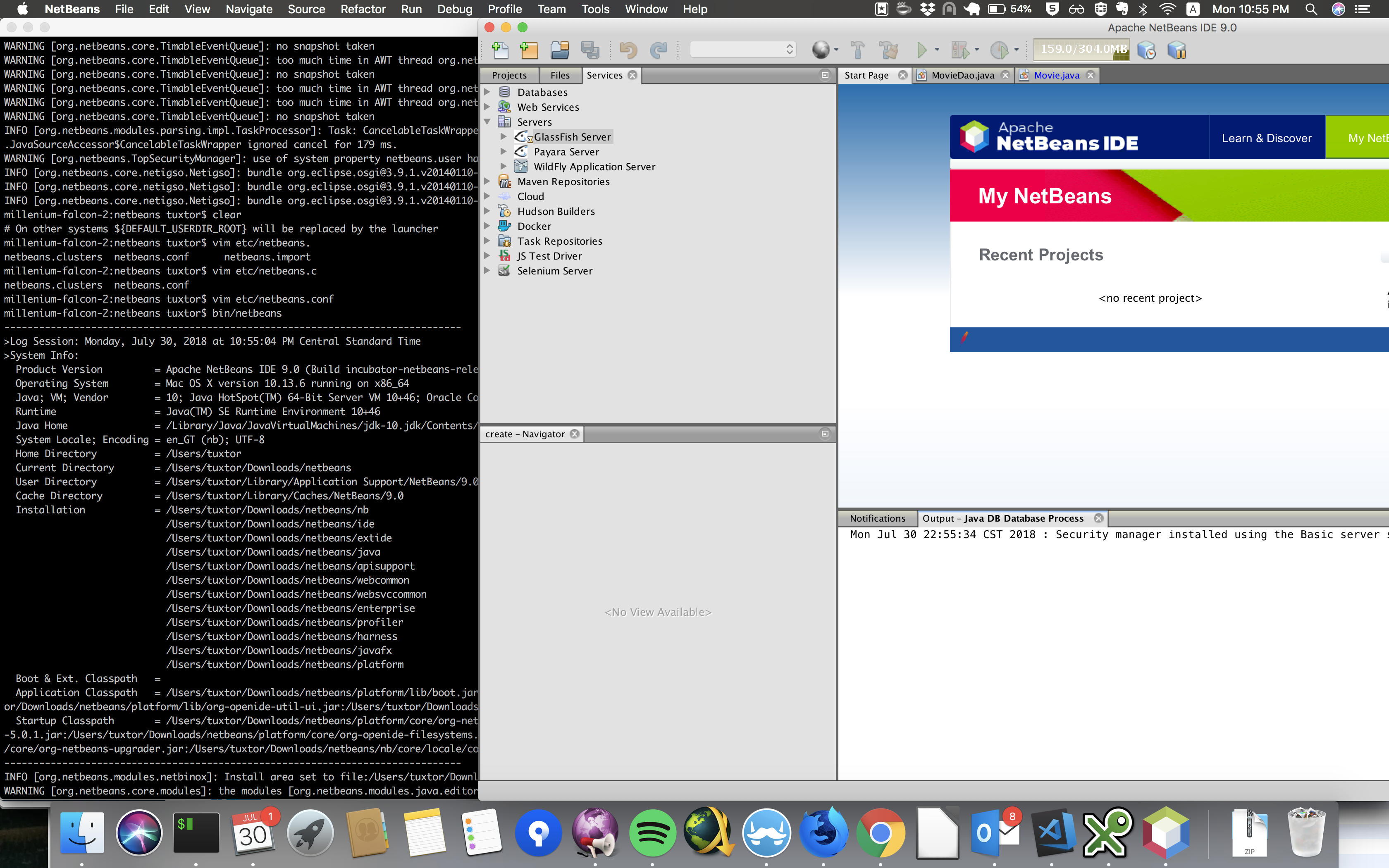

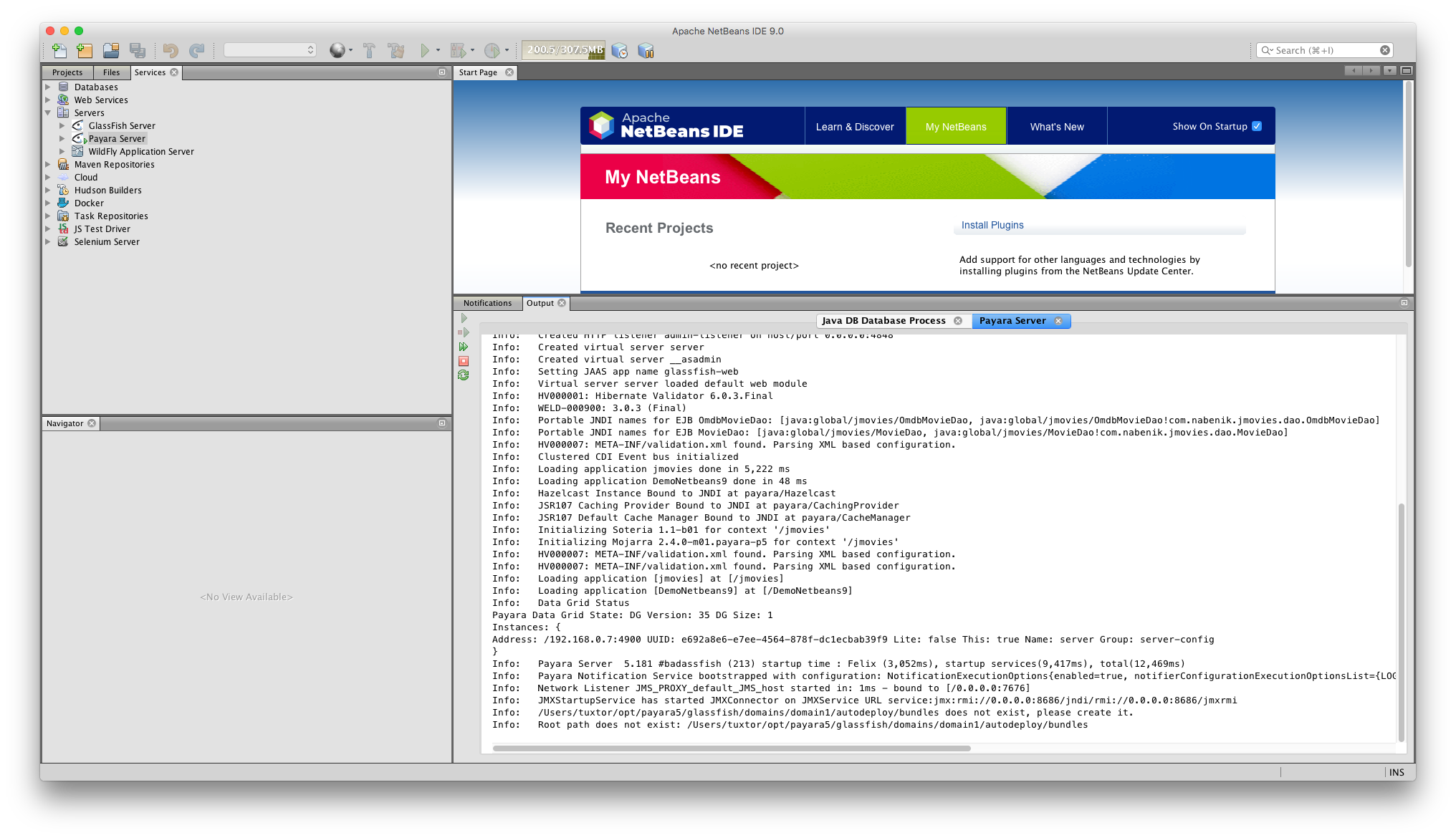

IDEs and application servers

In the same way as libraries, Java IDEs had to catch-up with the introduction of Java 9 at least in three levels:

- IDEs as Java programs should be compatible with Java Modules

- IDEs should support new Java versions as programming language -i.e. Incremental compilation, linting, text analysis, modules-

- IDEs are also basis for an ecosystem of plugins that are developed independently. Hence if plugins have any transitive dependency with issues over JPMS, these also have to be updated

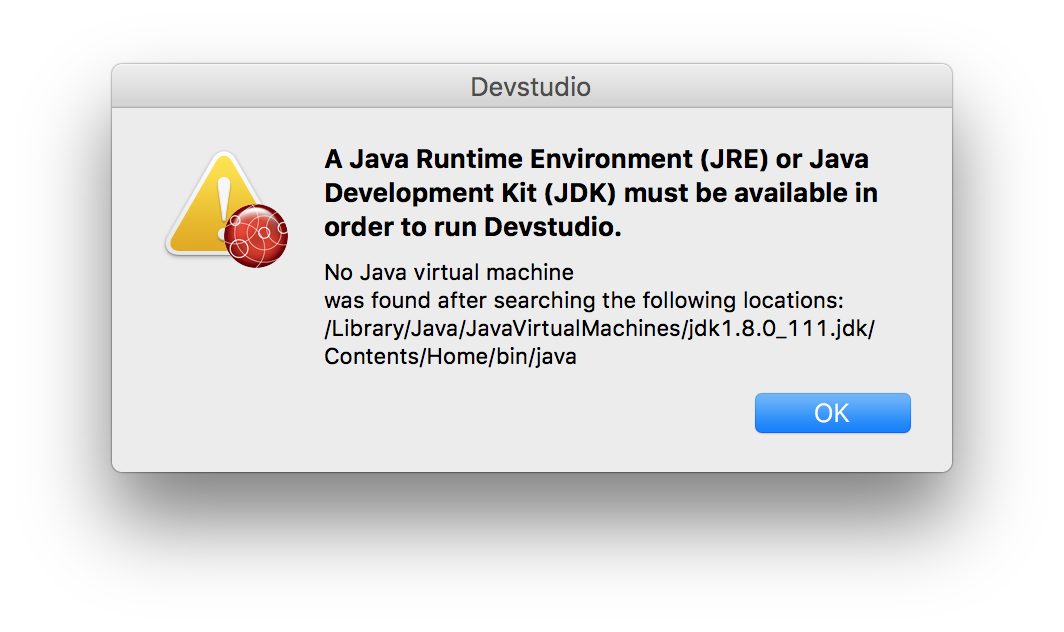

Overall, none of the Java IDEs guaranteed that plugins will work in JVMs above Java 8. Therefore you could possibly run your IDE over Java 11 but a legacy/deprecated plugin could prevent you to run your application.

How do I update?

You must notice that Java 9 launched three years ago, hence the situations previously described are mostly covered. However you should do the following verifications and actions to prevent failures in the process:

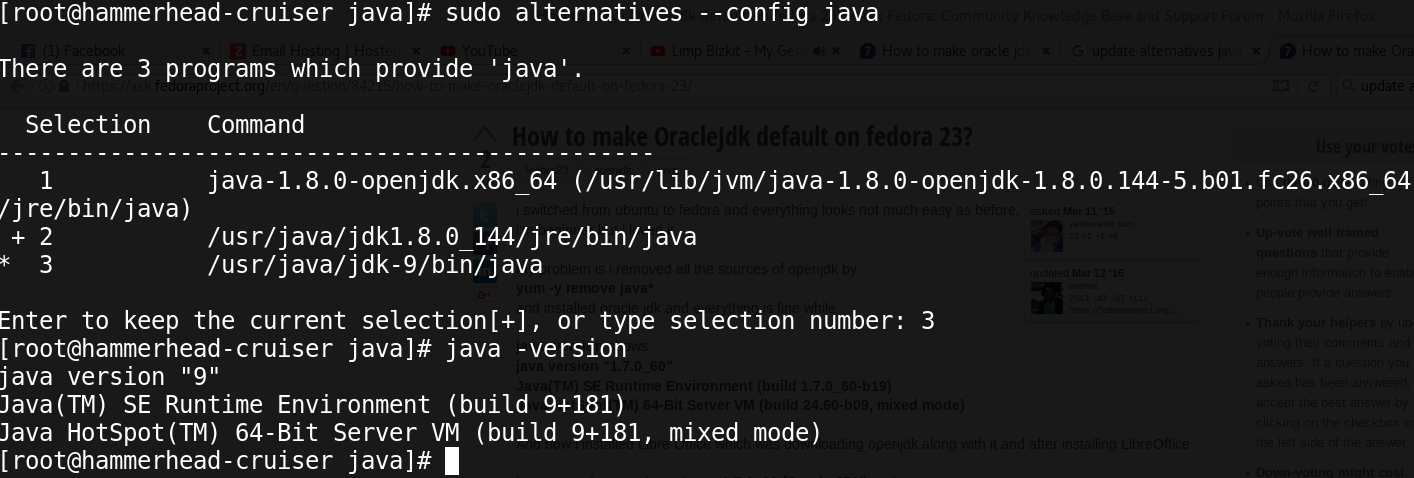

- Verify server compatibility

- Verify if you need a specific JVM due support contracts and conditions

- Configure your development environment to support multiple JVMs during the migration process

- Verify your IDE compatibility and update

- Update Maven and Maven projects

- Update dependencies

- Include Java/Jakarta EE dependencies

- Execute multiple JVMs in production

Verify server compatibility

Mike Luikides from O'Reilly affirms that there are two types of programmers. In one hand we have the low level programmers that create tools as libraries or frameworks, and on the other hand we have developers that use these tools to create experience, products and services.

Java Enterprise is mostly on the second hand, the "productive world" resting in giant's shoulders. That's why you should check first if your runtime or framework already has a version compatible with Java 11, and also if you have the time/decision power to proceed with an update. If not, any other action from this point is useless.

The good news is that most of the popular servers in enterprise Java world are already compatible, like:

- Apache Tomcat

- Apache Maven

- Spring

- Oracle WebLogic

- Payara

- Apache TomEE

... among others

If you happen to depend on non compatible runtimes, this is where the road ends unless you support the maintainer to update it.

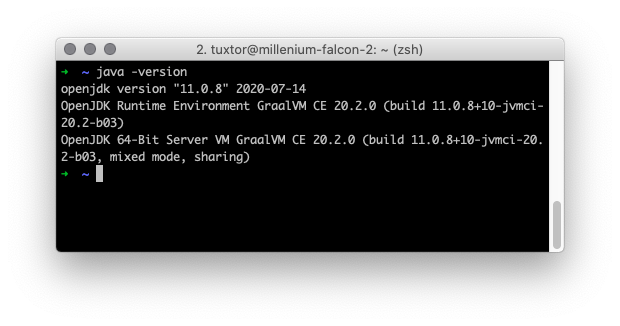

Verify if you need an specific JVM

On a non-technical side, under support contract conditions you could be obligated to use an specific JVM version.

OpenJDK by itself is an open source project receiving contributions from many companies (being Oracle the most active contributor), but nothing prevents any other company to compile, pack and TCK other JVM distribution as demonstrated by Amazon Correto, Azul Zulu, Liberica JDK, etc.

In short, there is software that technically could run over any JVM distribution and version, but the support contract will ask you for a particular version. For instance:

- WebLogic is only certified for Oracle HotSpot and GraalVM

- SAP Netweaver includes by itself SAP JVM

Configure your development environment to support multiple JDKs

Since the jump from Java 8 to Java 11 is mostly an experimentation process, it is a good idea to install multiple JVMs on the development computer, being SDKMan and jEnv the common options:

SDKMan

SDKMan is available for Unix-Like environments (Linux, Mac OS, Cygwin, BSD) and as the name suggests, acts as a Java tools package manager.

It helps to install and manage JVM ecosystem tools -e.g. Maven, Gradle, Leiningen- and also multiple JDK installations from different providers.

jEnv

Also available for Unix-Like environments (Linux, Mac OS, Cygwin, BSD), jEnv is basically a script to manage and switch multiple JVM installations per system, user and shell.

If you happen to install JDKs from different sources -e.g Homebrew, Linux Repo, Oracle Technology Network- it is a good choice.

Finally, if you use Windows the common alternative is to automate the switch using .bat files however I would appreciate any other suggestion since I don't use Windows so often.

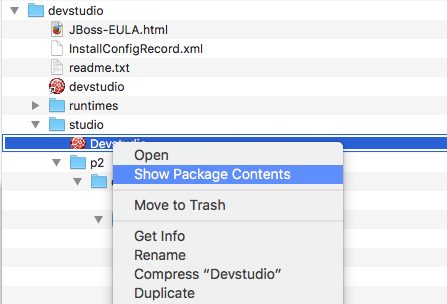

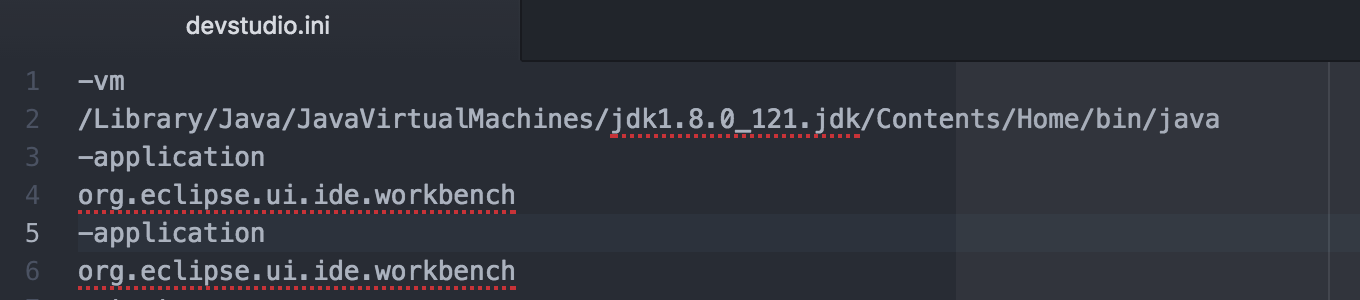

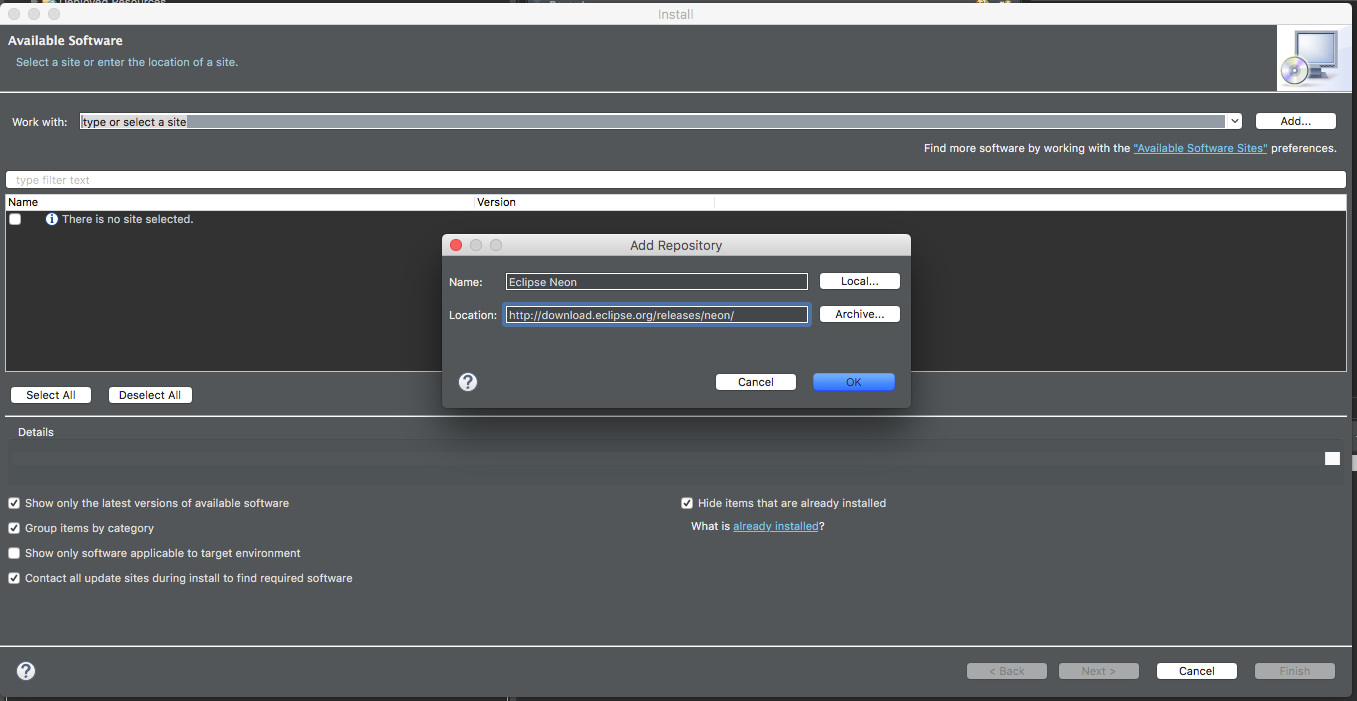

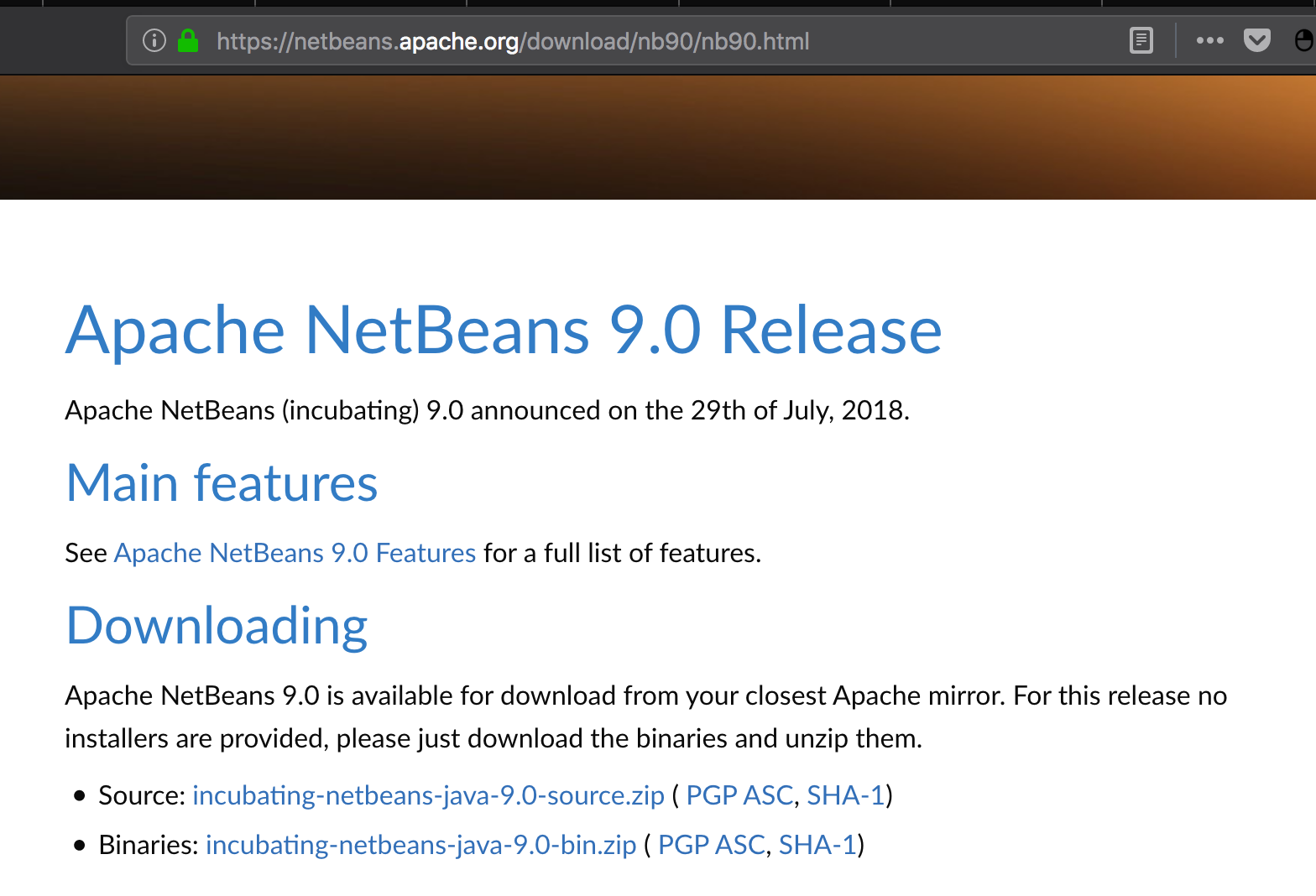

Verify your IDE compatibility and update

Please remember that any IDE ecosystem is composed by three levels:

- The IDE acting as platform

- Programming language support

- Plugins to support tools and libraries

After updating your IDE, you should also verify if all of the plugins that make part of your development cycle work fine under Java 11.

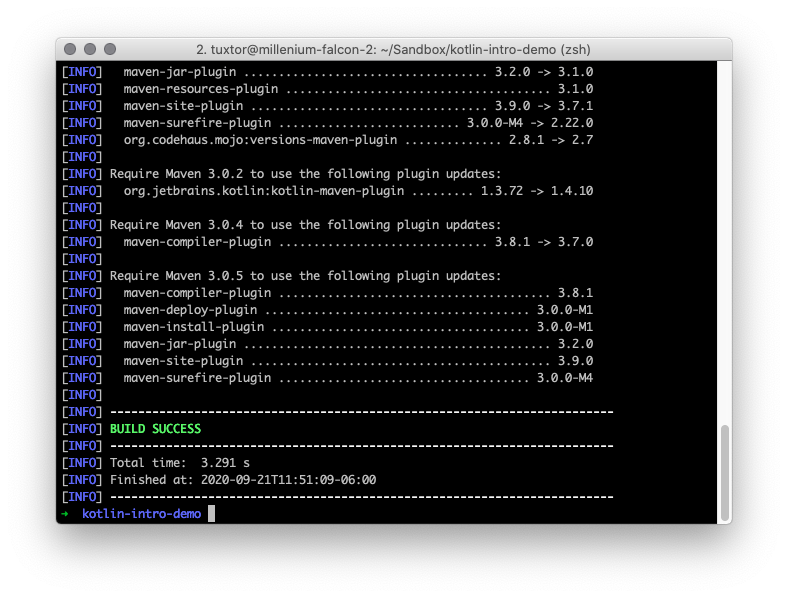

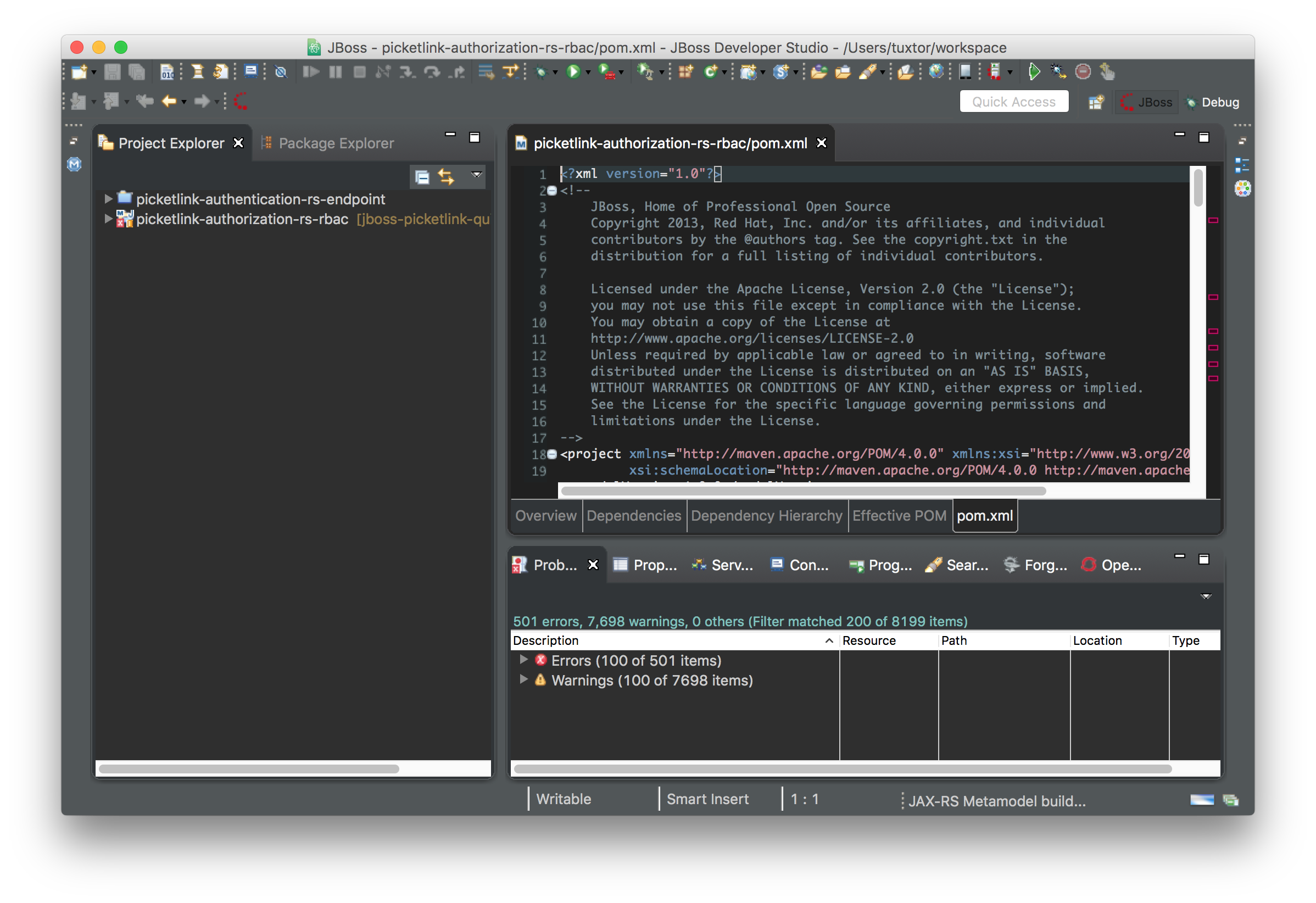

Update Maven and Maven projects

Probably the most common choice in Enterprise Java is Maven, and many IDEs use it under the hood or explicitly. Hence, you should update it.

Besides installation, please remember that Maven has a modular architecture and Maven modules version could be forced on any project definition. So, as rule of thumb you should also update these modules in your projects to the latest stable version.

To verify this quickly, you could use versions-maven-plugin:

<plugin>

<groupId>org.codehaus.mojo</groupId>

<artifactId>versions-maven-plugin</artifactId>

<version>2.8.1</version>

</plugin>

Which includes a specific goal to verify Maven plugins versions:

mvn versions:display-plugin-updates

After that, you also need to configure Java source and target compatibility, generally this is achieved in two points.

As properties:

<properties>

...

<maven.compiler.source>11</maven.compiler.source>

<maven.compiler.target>11</maven.compiler.target>

</properties>

As configuration on Maven plugins, specially in maven-compiler-plugin:

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.8.0</version>

<configuration>

<release>11</release>

</configuration>

</plugin>

Finally, some plugins need to "break" the barriers imposed by Java Modules and Java Platform Teams knows about it. Hence JVM has an argument called illegal-access to allow this, at least during Java 11.

This could be a good idea in plugins like surefire and failsafe which also invoke runtimes that depend on this flag (like Arquillian tests):

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.22.0</version>

<configuration>

<argLine>

--illegal-access=permit

</argLine>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-failsafe-plugin</artifactId>

<version>2.22.0</version>

<configuration>

<argLine>

--illegal-access=permit

</argLine>

</configuration>

</plugin>

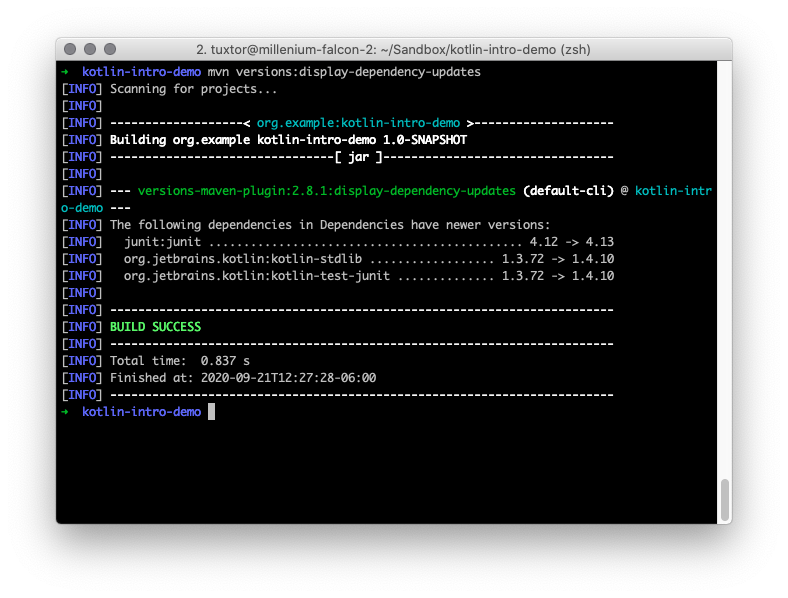

Update project dependencies

As mentioned before, you need to check for compatible versions on your Java dependencies. Sometimes these libraries could introduce breaking changes on each major version -e.g. Flyway- and you should consider a time to refactor this changes.

Again, if you use Maven versions-maven-plugin has a goal to verify dependencies version. The plugin will inform you about available updates.:

mvn versions:display-dependency-updates

In the particular case of Java EE, you already have an advantage. If you depend only on APIs -e.g. Java EE, MicroProfile- and not particular implementations, many of these issues are already solved for you.

Include Java/Jakarta EE dependencies

Probably modern REST based services won't need this, however in projects with heavy usage of SOAP and XML marshalling is mandatory to include the Java EE modules removed on Java 11. Otherwise your project won't compile and run.

You must include as dependency:

- API definition

- Reference Implementation (if needed)

At this point is also a good idea to evaluate if you could move to Jakarta EE, the evolution of Java EE under Eclipse Foundation.

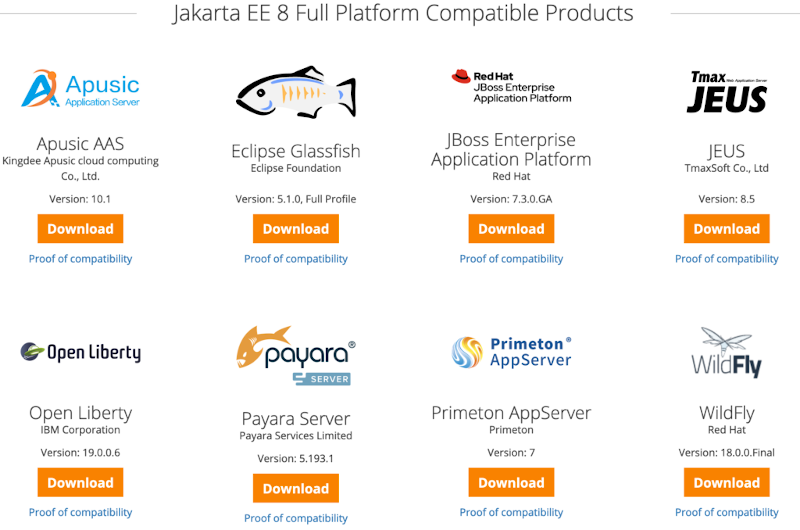

Jakarta EE 8 is practically Java EE 8 with another name, but it retains package and features compatibility, most of application servers are in the process or already have Jakarta EE certified implementations:

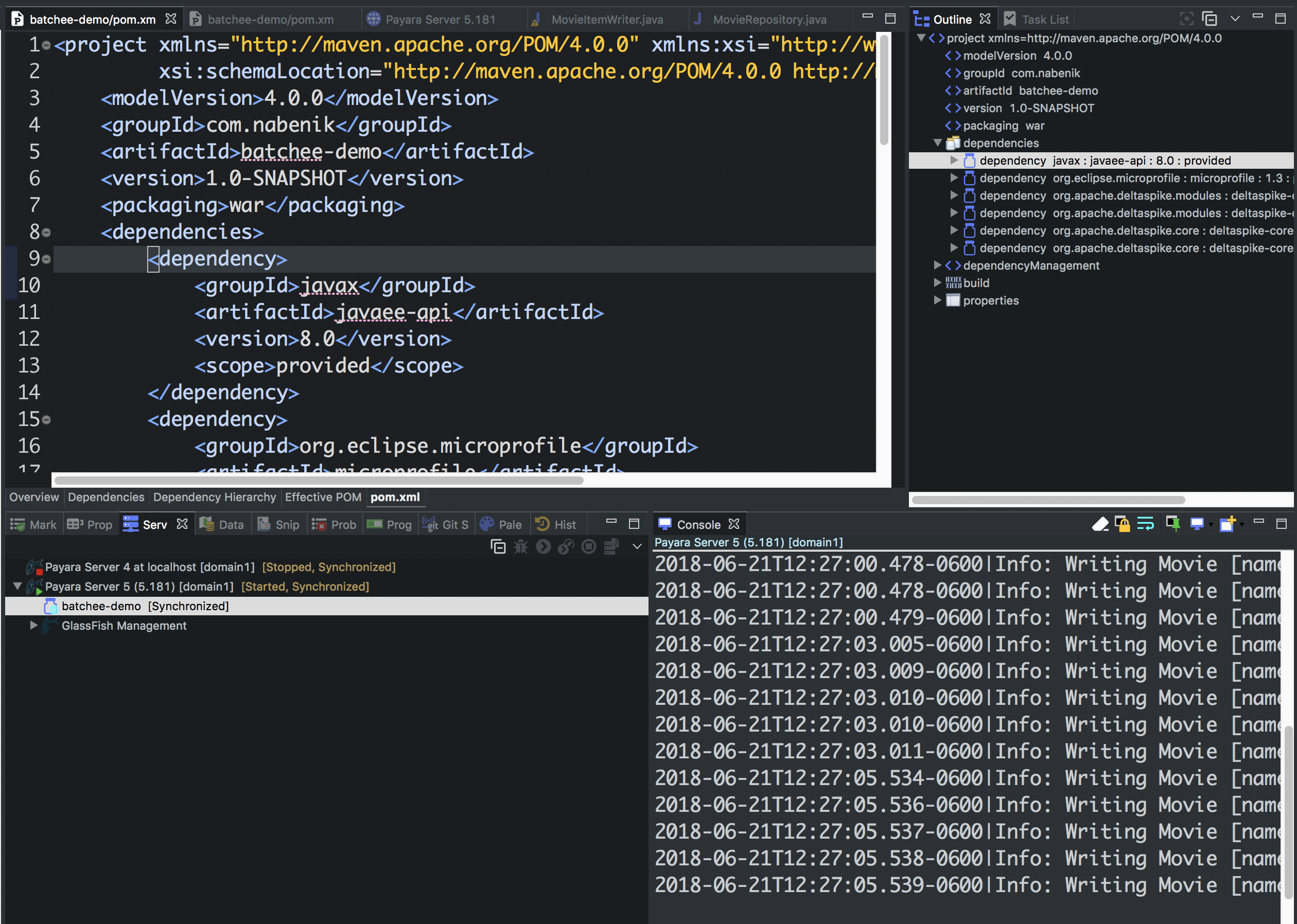

We could swap the Java EE API:

<dependency>

<groupId>javax</groupId>

<artifactId>javaee-api</artifactId>

<version>8.0.1</version>

<scope>provided</scope>

</dependency>

For Jakarta EE API:

<dependency>

<groupId>jakarta.platform</groupId>

<artifactId>jakarta.jakartaee-api</artifactId>

<version>8.0.0</version>

<scope>provided</scope>

</dependency>

After that, please include any of these dependencies (if needed):

Java Beans Activation

Java EE

<dependency>

<groupId>javax.activation</groupId>

<artifactId>javax.activation-api</artifactId>

<version>1.2.0</version>

</dependency>

Jakarta EE

<dependency>

<groupId>jakarta.activation</groupId>

<artifactId>jakarta.activation-api</artifactId>

<version>1.2.2</version>

</dependency>

JAXB (Java XML Binding)

Java EE

<dependency>

<groupId>javax.xml.bind</groupId>

<artifactId>jaxb-api</artifactId>

<version>2.3.1</version>

</dependency>

Jakarta EE

<dependency>

<groupId>jakarta.xml.bind</groupId>

<artifactId>jakarta.xml.bind-api</artifactId>

<version>2.3.3</version>

</dependency>

Implementation

<dependency>

<groupId>org.glassfish.jaxb</groupId>

<artifactId>jaxb-runtime</artifactId>

<version>2.3.3</version>

</dependency>

JAX-WS

Java EE

<dependency>

<groupId>javax.xml.ws</groupId>

<artifactId>jaxws-api</artifactId>

<version>2.3.1</version>

</dependency>

Jakarta EE

<dependency>

<groupId>jakarta.xml.ws</groupId>

<artifactId>jakarta.xml.ws-api</artifactId>

<version>2.3.3</version>

</dependency>

Implementation (runtime)

<dependency>

<groupId>com.sun.xml.ws</groupId>

<artifactId>jaxws-rt</artifactId>

<version>2.3.3</version>

</dependency>

Implementation (standalone)

<dependency>

<groupId>com.sun.xml.ws</groupId>

<artifactId>jaxws-ri</artifactId>

<version>2.3.2-1</version>

<type>pom</type>

</dependency>

Java Annotation

Java EE

<dependency>

<groupId>javax.annotation</groupId>

<artifactId>javax.annotation-api</artifactId>

<version>1.3.2</version>

</dependency>

Jakarta EE

<dependency>

<groupId>jakarta.annotation</groupId>

<artifactId>jakarta.annotation-api</artifactId>

<version>1.3.5</version>

</dependency>

Java Transaction

Java EE

<dependency>

<groupId>javax.transaction</groupId>

<artifactId>javax.transaction-api</artifactId>

<version>1.3</version>

</dependency>

Jakarta EE

<dependency>

<groupId>jakarta.transaction</groupId>

<artifactId>jakarta.transaction-api</artifactId>

<version>1.3.3</version>

</dependency>

CORBA

In the particular case of CORBA, I'm aware of its adoption. There is an independent project in eclipse to support CORBA, based on Glassfish CORBA, but this should be investigated further.

Multiple JVMs in production

If everything compiles, tests and executes. You did a successful migration.

Some deployments/environments run multiple application servers over the same Linux installation. If this is your case it is a good idea to install multiple JVMs to allow stepped migrations instead of big bang.

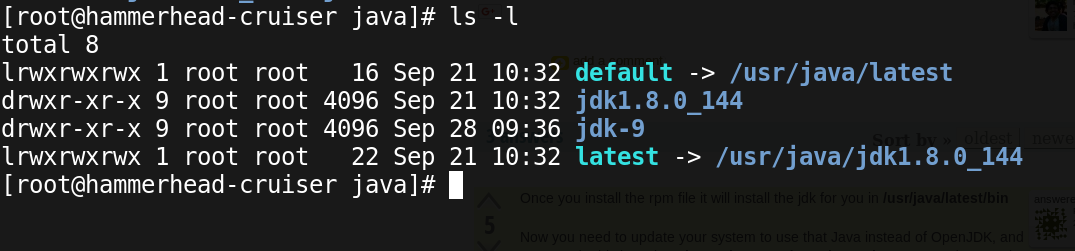

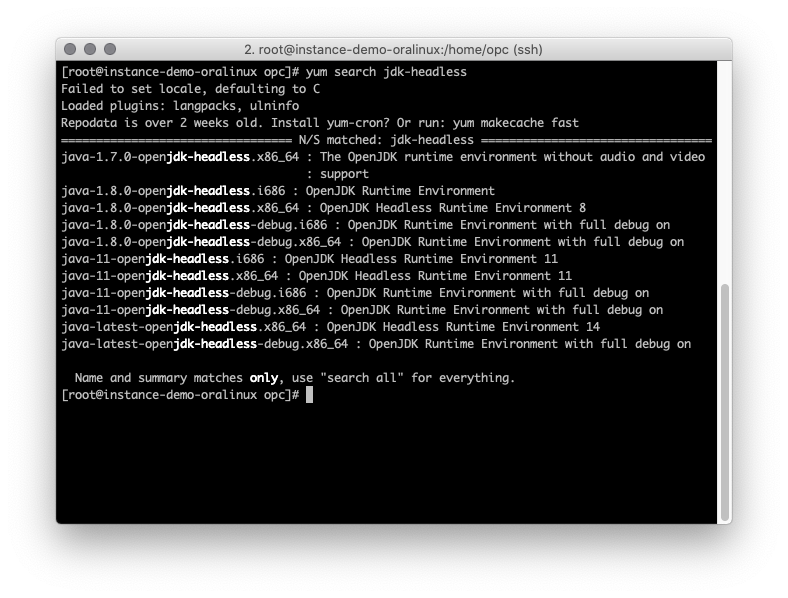

For instance, RHEL based distributions like CentOS, Oracle Linux or Fedora include various JVM versions:

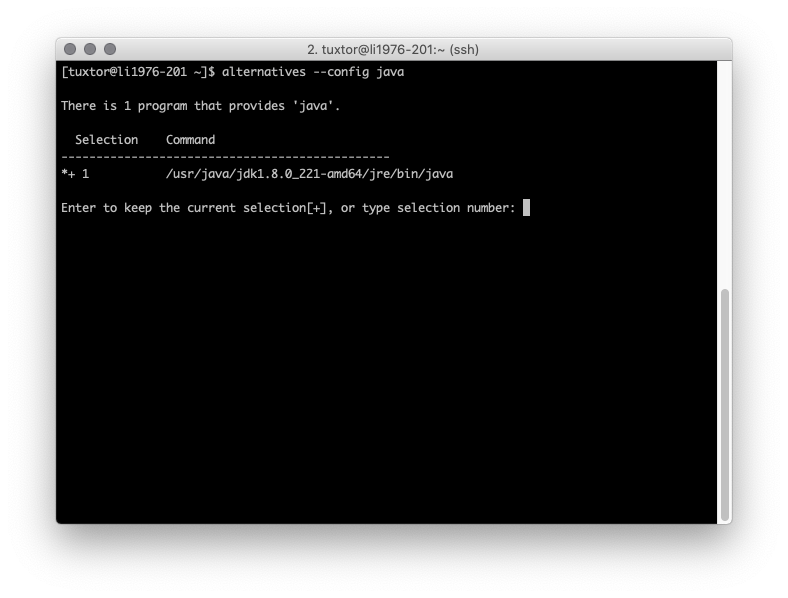

Most importantly, If you install JVMs outside directly from RPMs(like Oracle HotSpot), Java alternatives will give you support:

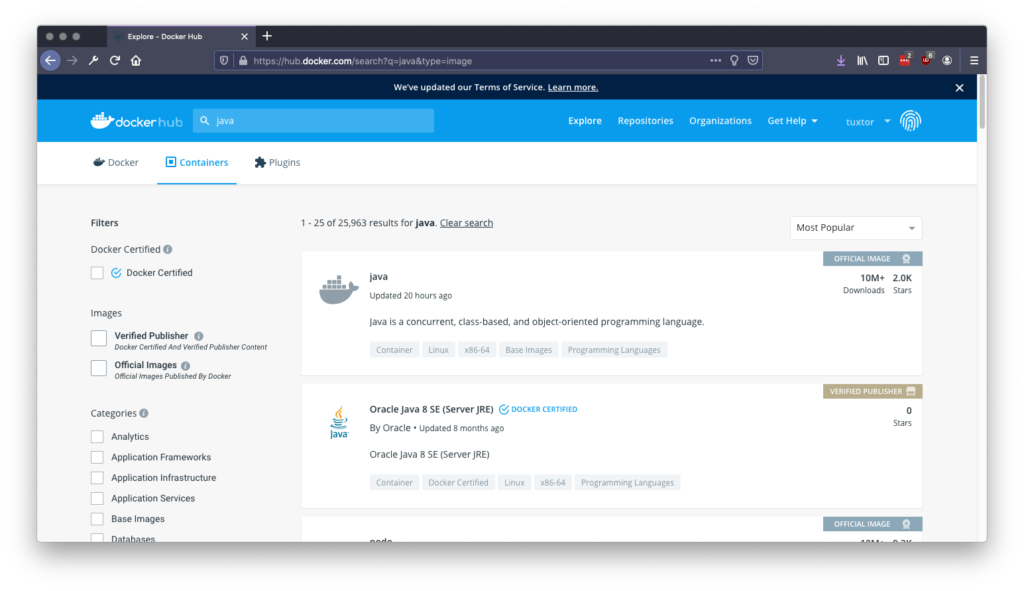

However on modern deployments probably would be better to use Docker, specially on Windows which also needs .bat script to automate this task. Most of the JVM distributions are also available on Docker Hub:

A simple MicroProfile JWT token provider with Payara realms and JAX-RS

02 October 2019

In this tutorial I will demonstrate how to create a "simple" (yet practical) token provider using Payara realms as users/groups store, with a couple of tweaks it's applicable to any MicroProfile implementation (since all implementations support JAX-RS).

In short this guide will:

- Create a public/private key in RSASSA-PKCS-v1_5 format to sign tokens

- Create user, password and fixed groups on Payara file realm (groups will be web and mobile)

- Create a vanilla JakartaEE + MicroProfile project

- Generate tokens that are compatible with MicroProfile JWT specification using Nimbus JOSE

Create a public/private pair

MicroProfile JWT establishes that tokens should be signed by using RSASSA-PKCS-v1_5 signature with SHA-256 hash algorithm.

The general idea behind this is to generate a private key that will be used on token provider, subsequently the clients only need the public key to verify the signature. One of the "simple" ways to do this is by generating an SSH keypair using OpenSSL.

First it is necessary to generate a base key to be signed:

openssl genrsa -out baseKey.pem

From the base key generate the PKCS#8 private key:

openssl pkcs8 -topk8 -inform PEM -in baseKey.pem -out privateKey.pem -nocrypt

Using the private key you could generate a public (and distributable) key

openssl rsa -in baseKey.pem -pubout -outform PEM -out publicKey.pem

Finally some crypto libraries like bouncy castle only accept traditional RSA keys, hence it is safe to convert it using also openssl:

openssl rsa -in privateKey.pem -out myprivateKey.pem

At the end myprivateKey.pem could be used to sign the tokens and publicKey.pem could be distributed to any potential consumer.

Create user, password and groups on Payara realm

According to Glassfish documentation, the general idea of realms is to provide a security policy for domains, being able to contain users and groups and consequently assign users to groups, these realms could be created using:

- File containers

- Certificates databases

- LDAP directories

- Plain old JDBC

- Solaris

- Custom realms

For tutorial purposes a file realm will be used but any properly configured Realm should work.

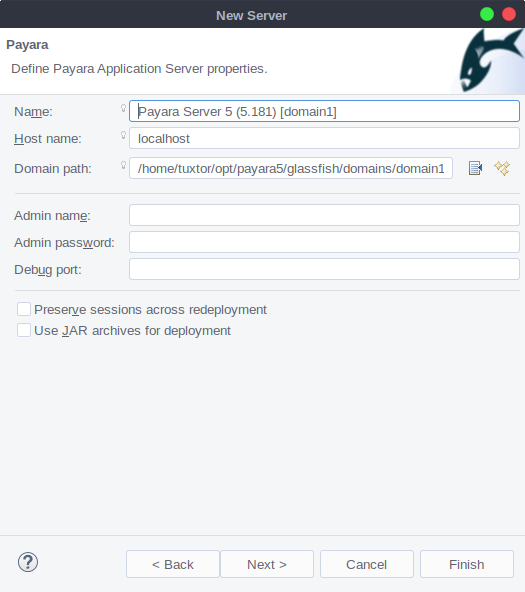

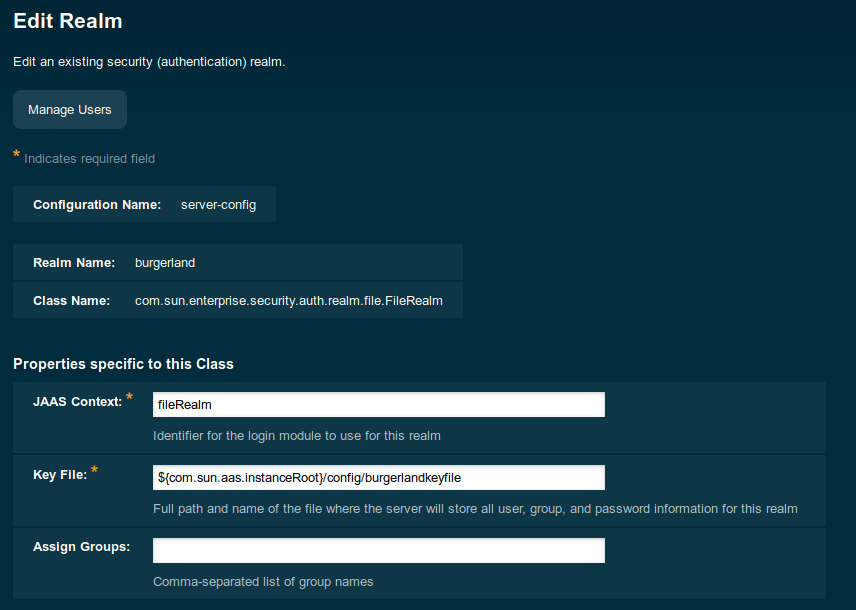

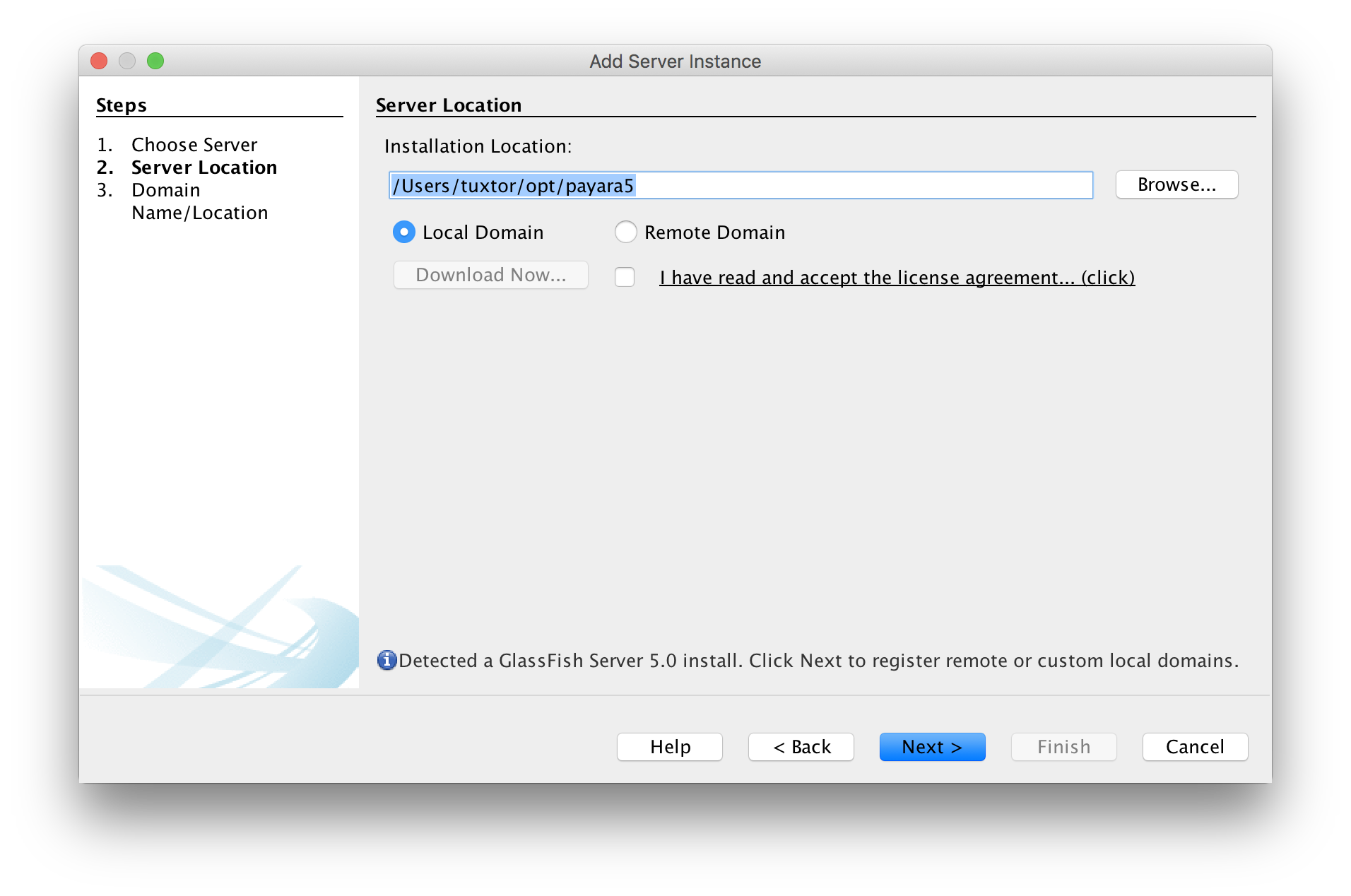

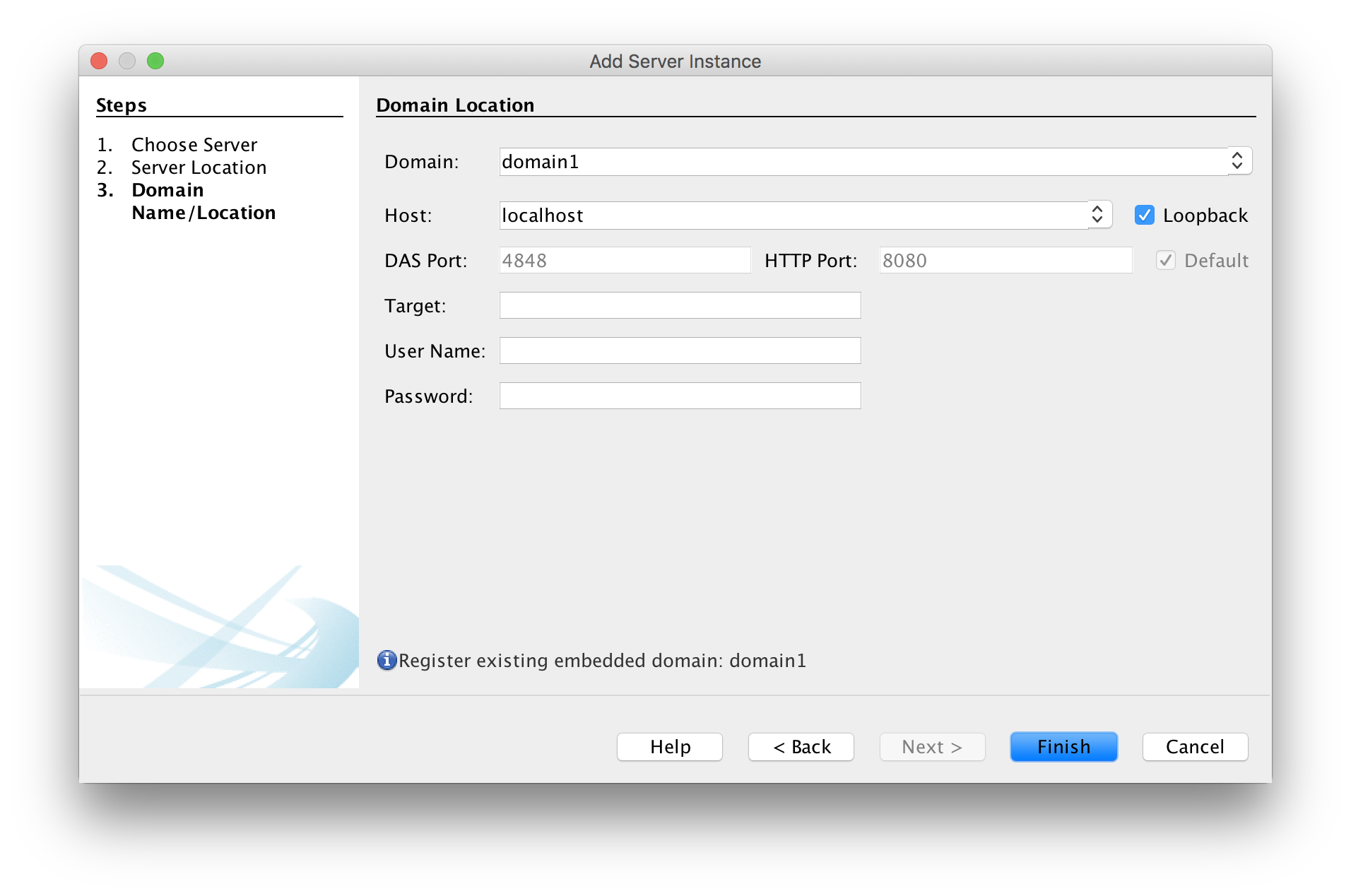

On vanilla Glassfish installations domain 1 uses server-config configuration, thus to create the realm you need to go to server-config -> Security -> Realms and add a new realm, in this tutorial burgerland will be created with the following configuration:

- Name: burgerland

- Class name: com.sun.enterprise.security.auth.realm.file.FileRealm

- JAAS Context: fileRealm

- Key file: ${com.sun.aas.instanceRoot}/config/burgerlandkeyfile

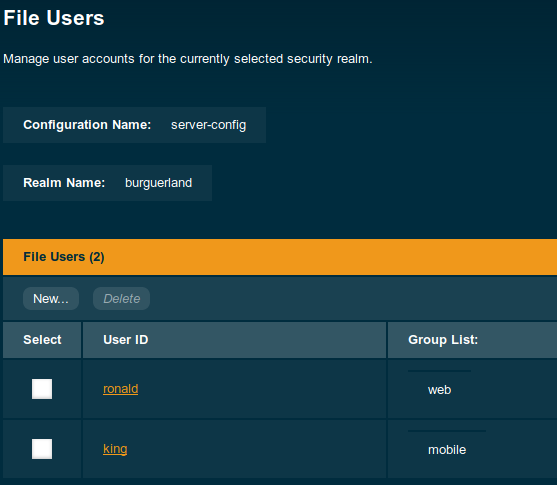

Once the realm is ready we can add two users/password with different roles (web, mobile), being ronald and king, final result should look like this:

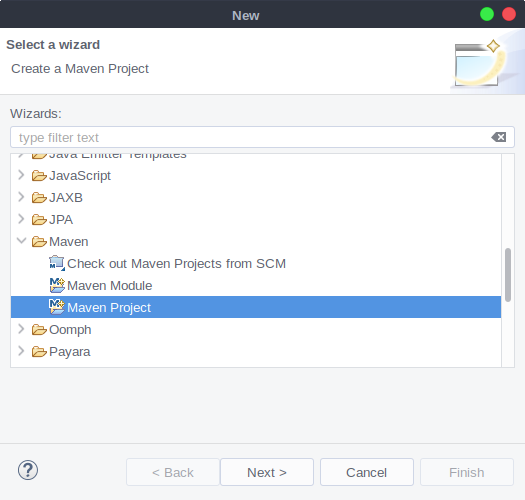

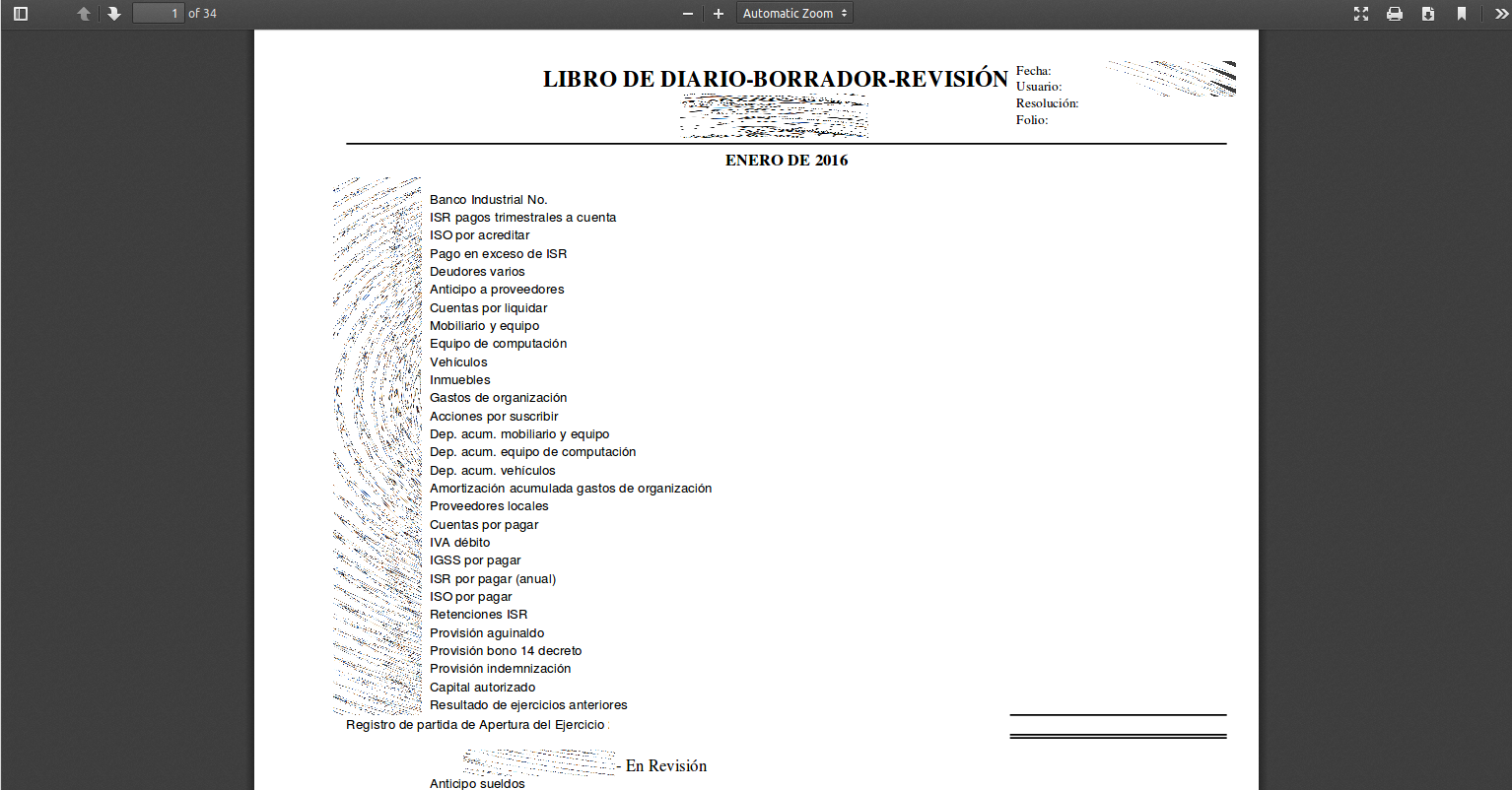

Create a vanilla JakartaEE project

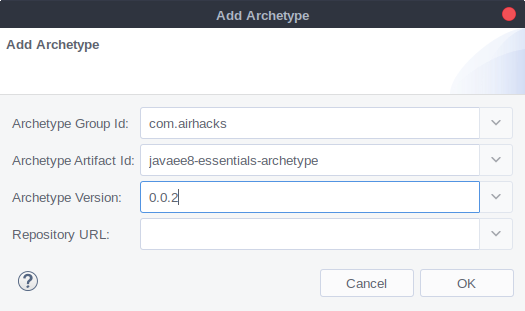

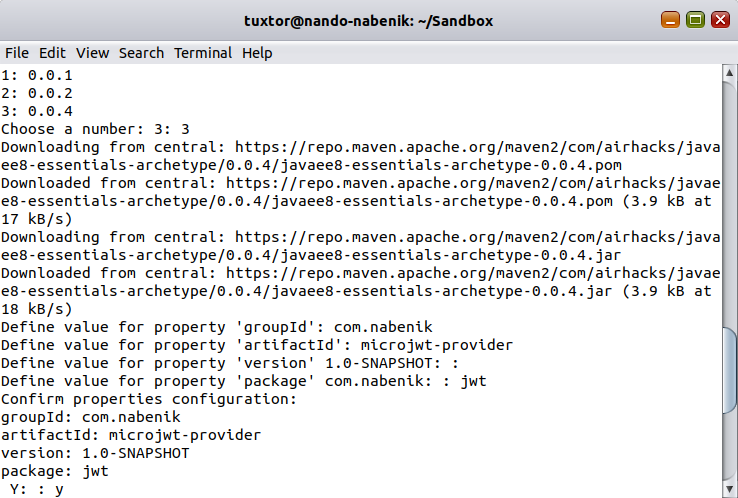

In order to generate the Tokens, we need to create a greenfield application, this could be achieved by using javaee8-essentials-archetype with the following command:

mvn archetype:generate -Dfilter=com.airhacks:javaee8-essentials-archetype -Dversion=0.0.4

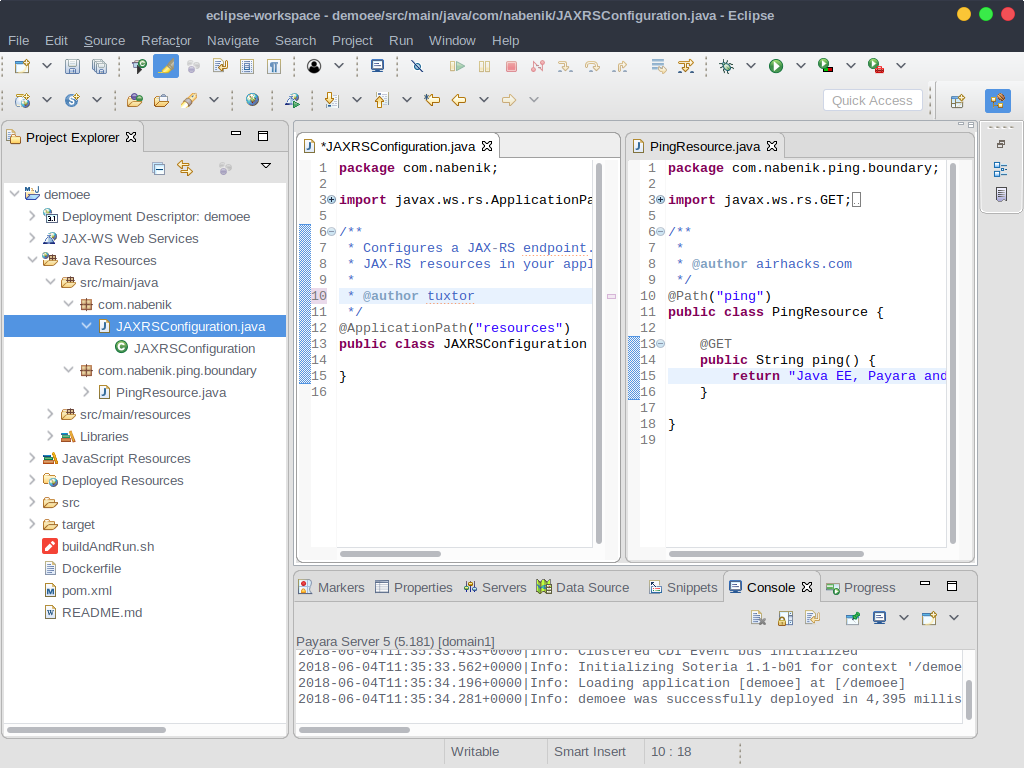

As usual archetype assistant will ask for project details, project will be named microjwt-provider:

Now, it is necessary to copy the myprivateKey.pem file generated at section 1 to project's classpath using Maven structure, specifically to src/main/resources, to avoid any confussion I also renamed this file to privateKey.pem, the final structure will look like this:

microjwt-provider$ tree

.

├── buildAndRun.sh

├── Dockerfile

├── pom.xml

├── README.md

└── src

└── main

├── java

│ └── com

│ └── airhacks

│ ├── JAXRSConfiguration.java

│ └── ping

│ └── boundary

│ └── PingResource.java

├── resources

│ ├── META-INF

│ │ └── microprofile-config.properties

│ └── privateKey.pem

└── webapp

└── WEB-INF

└── beans.xml

You could get rid of source code since application will be bootstrapped using a different package structure :-).

Generating MP compliant tokens from Payara realm

In order to create a provider, we will create a project with a central JAX-RS resource named TokenProviderResource with the following characteristics:

- Receives a POST+Form params petition over

/auth - Resource creates and signs a token using privateKey.pem certificate

- Returns token in response body

- Roles will be established using

web.xmlfile - Roles will be mapped to Payara realm using

glassfish-web.xmlfile - User, password and roles will be checked using Servlet 3+ API

Nimbus JOSE and Bouncy Castle should be added as dependencies in order to read and sign tokens, these should be added at pom.xml file.

<dependency>

<groupId>com.nimbusds</groupId>

<artifactId>nimbus-jose-jwt</artifactId>

<version>5.7</version>

</dependency>

<dependency>

<groupId>org.bouncycastle</groupId>

<artifactId>bcpkix-jdk15on</artifactId>

<version>1.53</version>

</dependency>

Later, a enum will be used to describe the fixed roles in a type safe way:

public enum RolesEnum {

WEB("web"),

MOBILE("mobile");

private String role;

public String getRole() {

return this.role;

}

RolesEnum(String role) {

this.role = role;

}

}

Once dependencies and roles are into project, we will implement a plain old Java bean in chage of token creation. First to be compliant with MicroProfile token structure a MPJWTToken bean is created, this will also contain a fast objet to JSON string converter but you could use any other marshaller implementation.

public class MPJWTToken {

private String iss;

private String aud;

private String jti;

private Long exp;

private Long iat;

private String sub;

private String upn;

private String preferredUsername;

private List<String> groups = new ArrayList<>();

private List<String> roles;

private Map<String, String> additionalClaims;

//Gets and sets go here

public String toJSONString() {

JSONObject jsonObject = new JSONObject();

jsonObject.appendField("iss", iss);

jsonObject.appendField("aud", aud);

jsonObject.appendField("jti", jti);

jsonObject.appendField("exp", exp / 1000);

jsonObject.appendField("iat", iat / 1000);

jsonObject.appendField("sub", sub);

jsonObject.appendField("upn", upn);

jsonObject.appendField("preferred_username", preferredUsername);

if (additionalClaims != null) {

for (Map.Entry<String, String> entry : additionalClaims.entrySet()) {

jsonObject.appendField(entry.getKey(), entry.getValue());

}

}

JSONArray groupsArr = new JSONArray();

for (String group : groups) {

groupsArr.appendElement(group);

}

jsonObject.appendField("groups", groupsArr);

return jsonObject.toJSONString();

}

Once JWT structure is complete, a CypherService is implemented to create and sign the token. This service will implement the JWT generator and also a key "loader" that reads privateKey file from classpath using Bouncy Castle.

public class CypherService {

public static String generateJWT(PrivateKey key, String subject, List<String> groups) {

JWSHeader header = new JWSHeader.Builder(JWSAlgorithm.RS256)

.type(JOSEObjectType.JWT)

.keyID("burguerkey")

.build();

MPJWTToken token = new MPJWTToken();

token.setAud("burgerGt");

token.setIss("https://burger.nabenik.com");

token.setJti(UUID.randomUUID().toString());

token.setSub(subject);

token.setUpn(subject);

token.setIat(System.currentTimeMillis());

token.setExp(System.currentTimeMillis() + 7*24*60*60*1000); // 1 week expiration!

token.setGroups(groups);

JWSObject jwsObject = new JWSObject(header, new Payload(token.toJSONString()));

// Apply the Signing protection

JWSSigner signer = new RSASSASigner(key);

try {

jwsObject.sign(signer);

} catch (JOSEException e) {

e.printStackTrace();

}

return jwsObject.serialize();

}

public PrivateKey readPrivateKey() throws IOException {

InputStream inputStream = CypherService.class.getResourceAsStream("/privateKey.pem");

PEMParser pemParser = new PEMParser(new InputStreamReader(inputStream));

JcaPEMKeyConverter converter = new JcaPEMKeyConverter().setProvider(new BouncyCastleProvider());

Object object = pemParser.readObject();

KeyPair kp = converter.getKeyPair((PEMKeyPair) object);

return kp.getPrivate();

}

}

CypherService will be used from TokenProviderResource as injectable CDI bean. One of my motivations to separate key reading from signing process is that key reading should be implemented respecting resource lifecycle, hence the key will be loaded at CDI @PostConstruct callback.

Here, full resource code:

@Singleton

@Path("/auth")

public class TokenProviderResource {

@Inject

CypherService cypherService;

private PrivateKey key;

@PostConstruct

public void init() {

try {

key = cypherService.readPrivateKey();

} catch (IOException e) {

e.printStackTrace();

}

}

@POST

@Produces(MediaType.APPLICATION_JSON)

@Consumes(MediaType.APPLICATION_FORM_URLENCODED)

public Response doTokenLogin(@FormParam("username") String username, @FormParam("password")String password,

@Context HttpServletRequest request){

List<String> target = new ArrayList<>();

try {

request.login(username, password);

if(request.isUserInRole(RolesEnum.MOBILE.getRole()))

target.add(RolesEnum.MOBILE.getRole());

if(request.isUserInRole(RolesEnum.WEB.getRole()))

target.add(RolesEnum.WEB.getRole());

}catch (ServletException ex){

ex.printStackTrace();

return Response.status(Response.Status.UNAUTHORIZED)

.build();

}

String token = cypherService.generateJWT(key, username, target);

return Response.status(Response.Status.OK)

.header(AUTHORIZATION, "Bearer ".concat(token))

.entity(token)

.build();

}

}

JAX-RS endpoints in the end are abstractions over Servlet API, consequently you could inject the HttpServletRequest or HttpServletResponse object on any method (doTokenLogin). In this case it is usefull since I'm triggering a manual login using Servlet 3+ login method.

As noticed by many users, Servlet API does not allow to read user roles in a portable way, hence I'm just checking if a given user is included in fixed roles using the previously defined enum and adding these roles to the target ArrayList.

In this code the parameters were declared as @FormParam consuming x-www-form-urlencoded data making it usefull for plain HTML forms, but this configuration is completely optional.

Mapping project to Payara realm

The main motivation to use Servlet's login method is basically because it is already integrated with Java EE security schemes, hence using the realm will be a simple two-step configuration:

- Add the realm/roles configuration at

web.xmlfile in the project - Map Payara groups to application roles using

glassfish-web.xmlfile

If you wanna know the full description of this mapping I found a useful post here.

First, I need to map the application to burgerland realm and declare the two roles. Since I'm not selecting an auth method, project will fallback to BASIC method, however I'm not protecting any resource so, credentials won't be explicitly required on any HTTP request:

<?xml version="1.0" encoding="UTF-8"?>

<web-app xmlns="http://xmlns.jcp.org/xml/ns/javaee"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://xmlns.jcp.org/xml/ns/javaee http://xmlns.jcp.org/xml/ns/javaee/web-app_3_1.xsd"

version="3.1">

<login-config>

<realm-name>burgerland</realm-name>

</login-config>

<security-role>

<role-name>web</role-name>

</security-role>

<security-role>

<role-name>mobile</role-name>

</security-role>

</web-app>

Payara groups and Java web application roles are not the same concepts, but these could actually be mapped using glassfish descriptor glassfish-web.xml:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE glassfish-web-app PUBLIC "-//GlassFish.org//DTD GlassFish Application Server 3.1 Servlet 3.0//EN" "http://glassfish.org/dtds/glassfish-web-app_3_0-1.dtd">

<glassfish-web-app error-url="">

<security-role-mapping>

<role-name>mobile</role-name>

<group-name>mobile</group-name>

</security-role-mapping>

<security-role-mapping>

<role-name>web</role-name>

<group-name>web</group-name>

</security-role-mapping>

</glassfish-web-app>

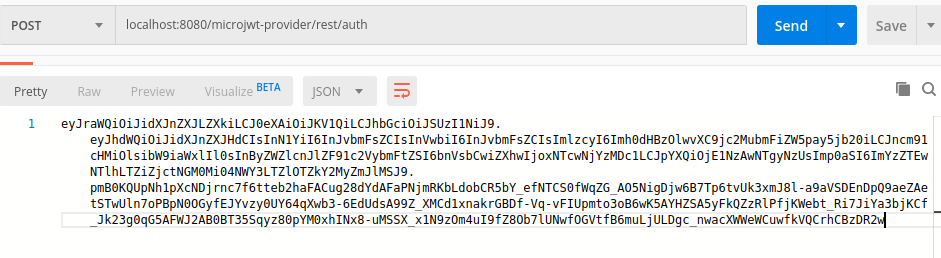

Finally the new application is deployed and a simple test demonstrates the functionality of token provider:

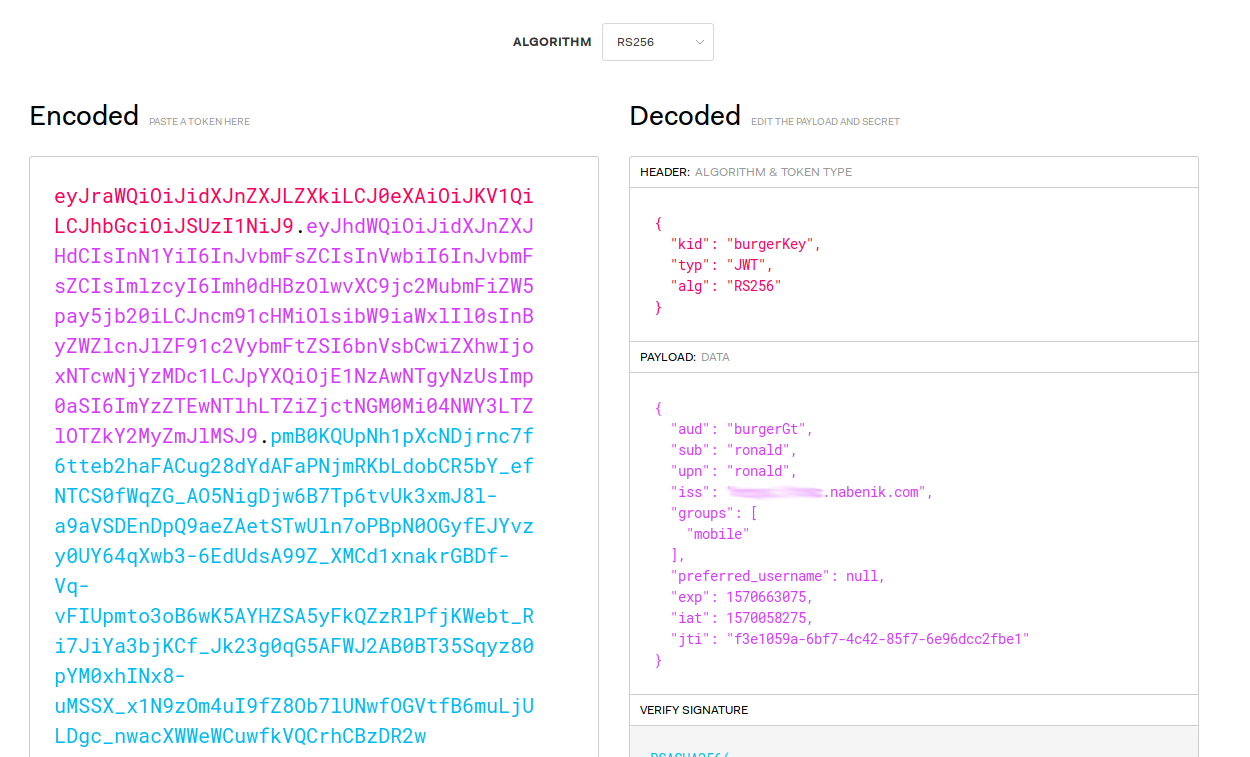

The token could be explored using any JWT tool, like the popular jwt.io, here the token is a compatible JWT implementation:

And as stated previously the signature could be checked using only the PUBLIC key:

As always, full implementation is available at GitHub.

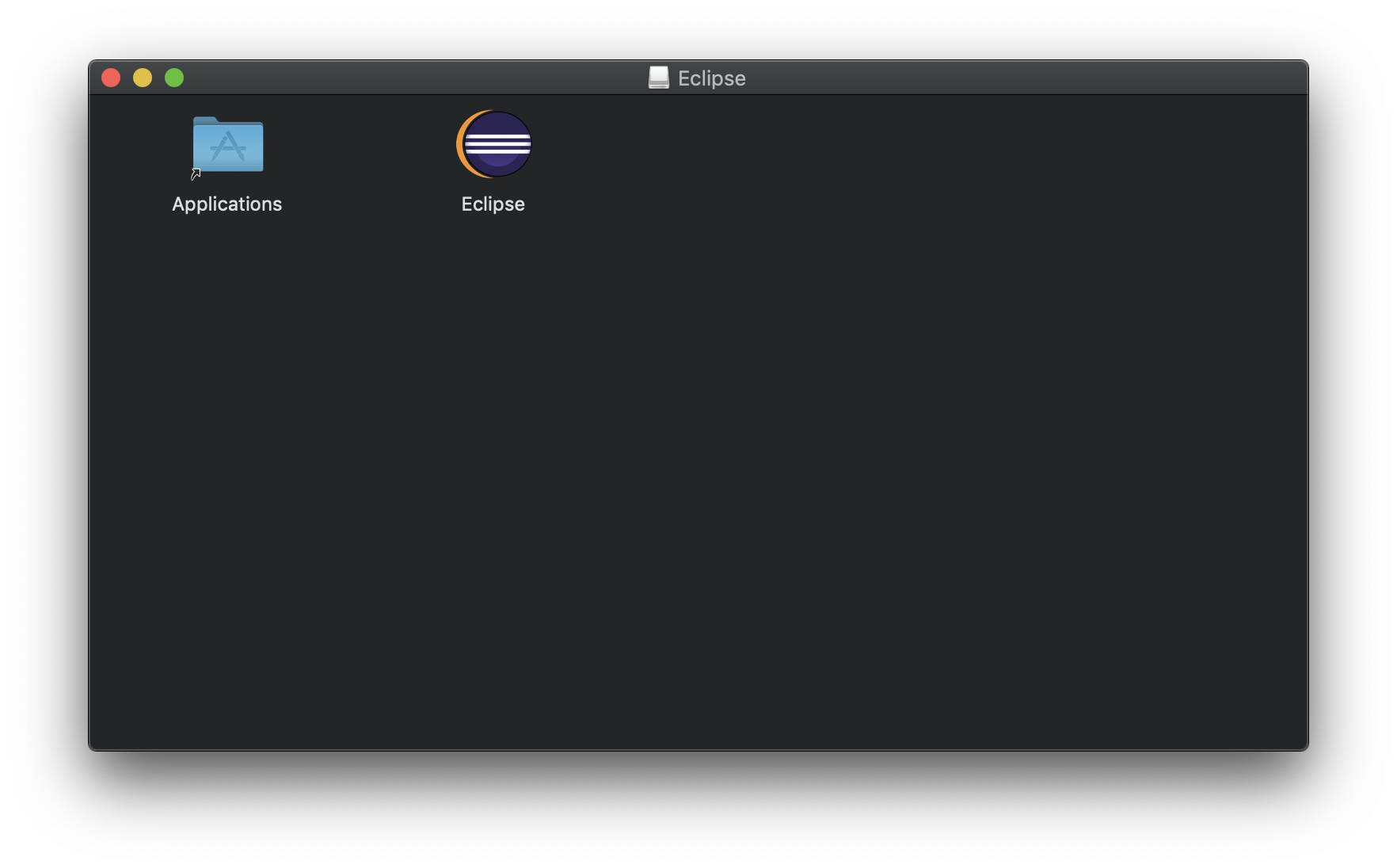

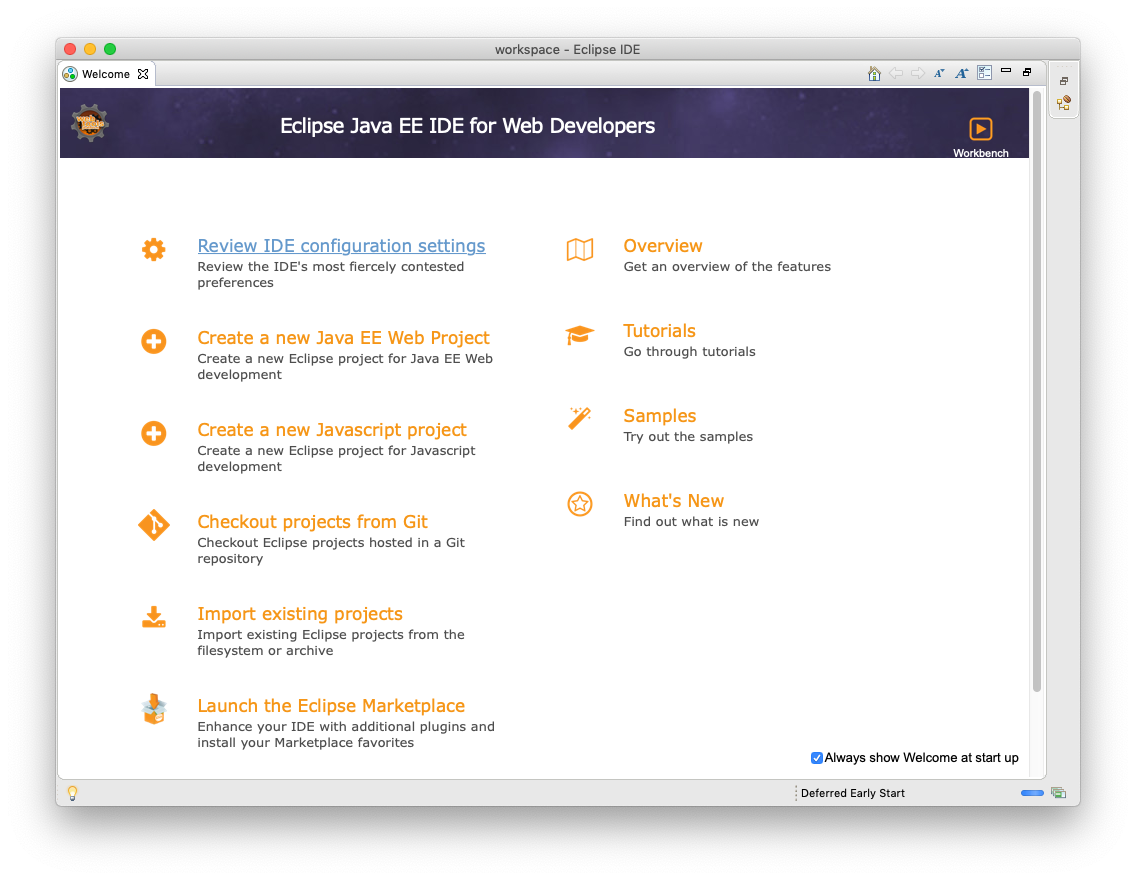

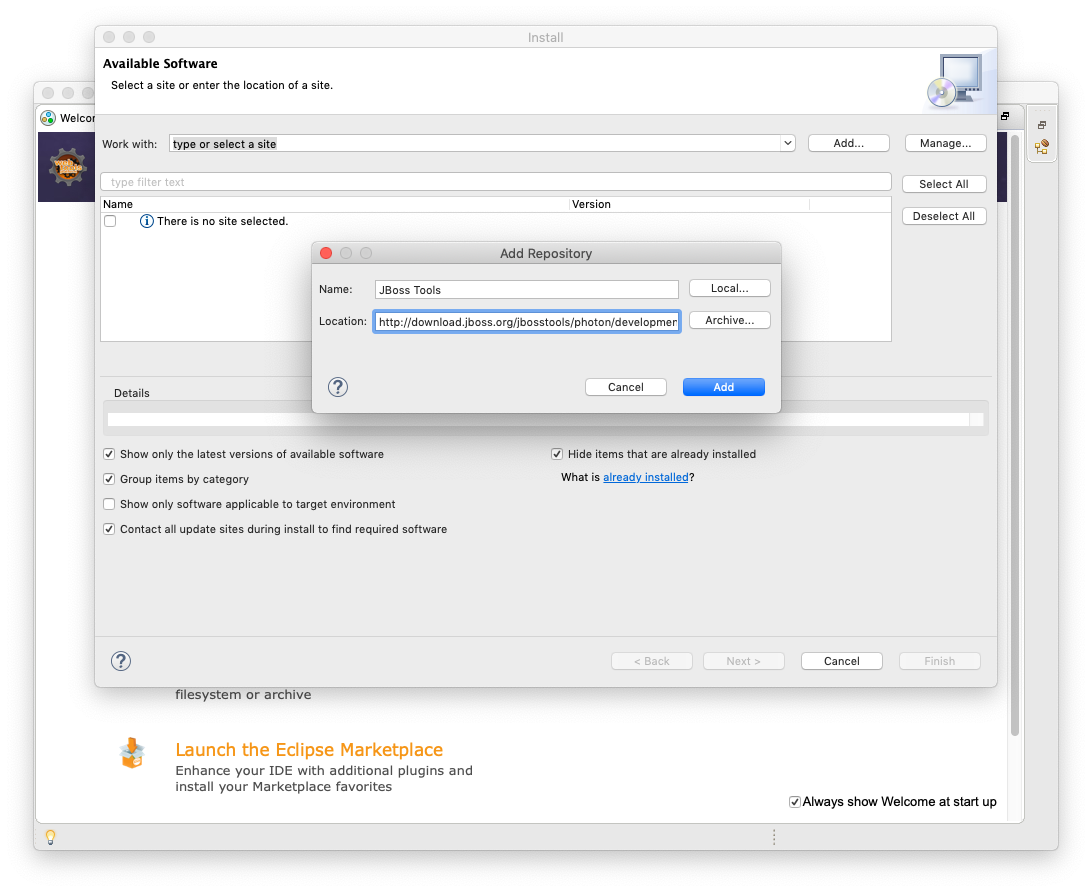

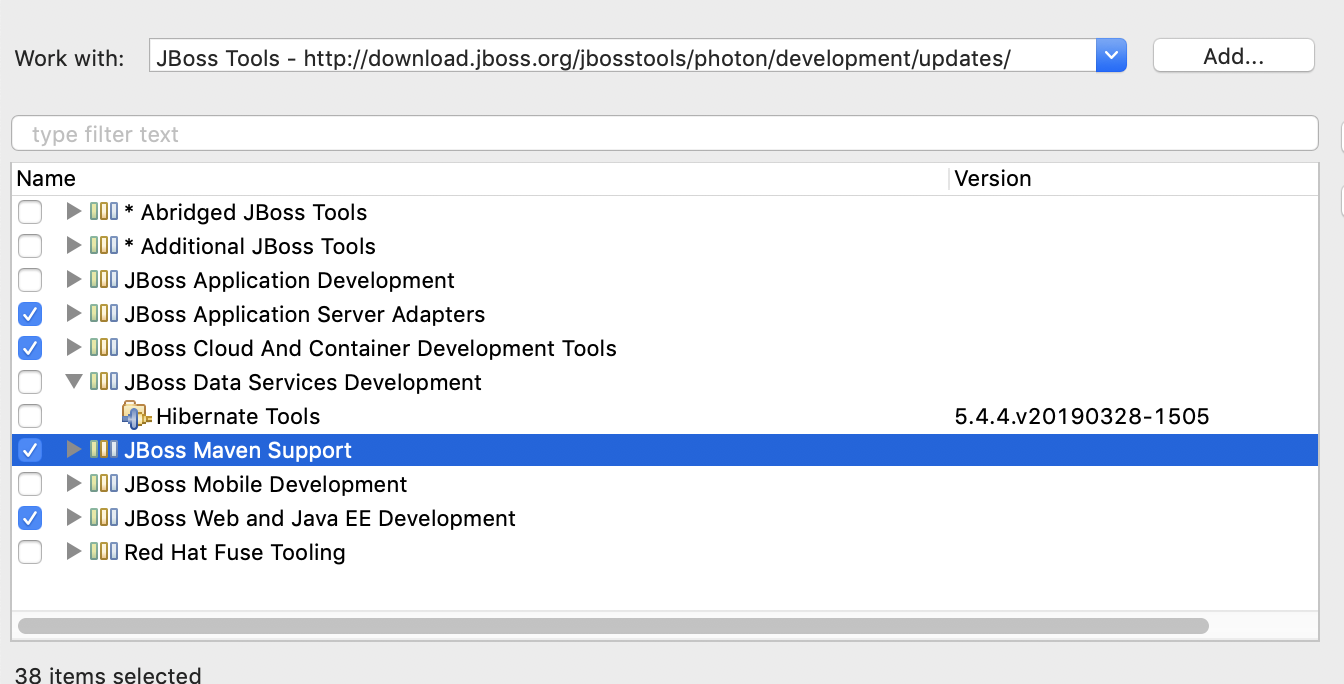

How to pack Angular 8 applications on regular war files

11 September 2019

From time to time it is necessary to distribute SPA applications using war files as containers, in my experience this is necessary when:

- You don't have control over deployment infrastructure

- You're dealing with rigid deployment standards

- IT people is reluctant to publish a plain old web server

Anyway, and as described in Oracle's documentation one of the benefits of using war files is the possibility to include static (HTML/JS/CSS) files in the deployment, hence is safe to assume that you could distribute any SPA application using a war file as wrapper (with special considerations).

Creating a POC with Angular 8 and Java War

To demonstrate this I will create a project that:

- Is compatible with the big three Java IDEs (NetBeans, IntelliJ, Eclipse) and VSCode

- Allows you to use the IDEs as JavaScript development IDEs

- Allows you to create a SPA modern application (With all npm, ng, cli stuff)

- Allows you to combine Java(Maven) and JavaScript(Webpack) build systems

- Allows you to distribute a minified and ready for production project

Bootstrapping a simple Java web project

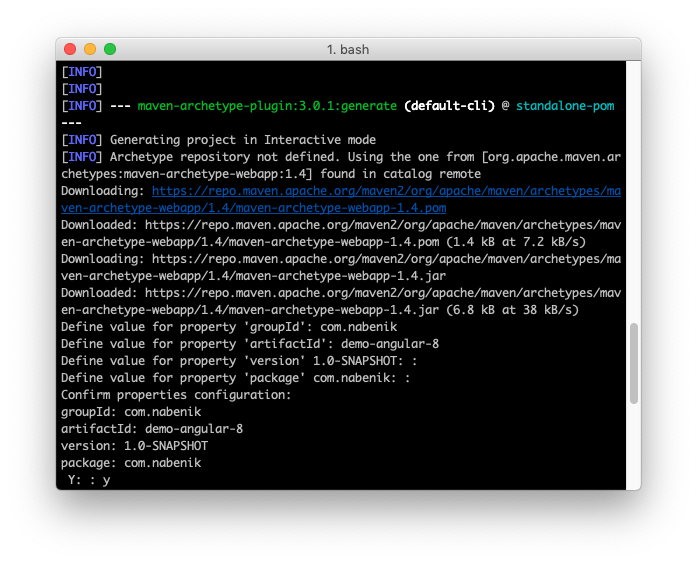

To bootstrap the Java project, you could use the plain old maven-archetype-webapp as basis:

mvn archetype:generate -DarchetypeGroupId=org.apache.maven.archetypes -DarchetypeArtifactId=maven-archetype-webapp -DarchetypeVersion=1.4

The interactive shell will ask you for you project characteristics including groupId, artifactId (project name) and base package.

In the end you should have the following structure as result:

demo-angular-8$ tree

.

├── pom.xml

└── src

└── main

└── webapp

├── WEB-INF

│ └── web.xml

└── index.jsp

4 directories, 3 files

Now you should be able to open your project in any IDE. By default the 'pom.xml' will include locked down versions for maven plugins, you could safely get rid of those since we won't personalize the entire Maven lifecycle, just a couple of hooks.

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.nabenik</groupId>

<artifactId>demo-angular-8</artifactId>

<version>1.0-SNAPSHOT</version>

<packaging>war</packaging>

<name>demo-angular-8 Maven Webapp</name>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.7</maven.compiler.source>

<maven.compiler.target>1.7</maven.compiler.target>

</properties>

</project>

Besides that index.jsp is not necessary, just delete it.

Bootstrapping a simple Angular JS project

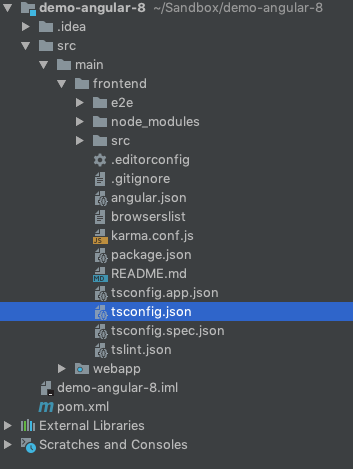

As an opinionated approach I suggest to isolate the Angular project at its own directory (src/main/frontend), on the past and with simple frameworks (AngularJS, Knockout, Ember) it was possible to bootstrap the entire project with a couple of includes in the index.html file, however nowadays most of the modern front end projects use some kind of bundler/linter in order to enable modern (>=ES6) features like modules, and in the case of Angular, it uses Webpack under the hood for this.

For this guide I assume that you already have installed all Angular CLI tools, hence we could go inside our source code structure and bootstrap the Angular project.

demo-angular-8$ cd src/main/

demo-angular-8/src/main$ ng new frontend

This will bootstrap a vanilla Angular project, and in fact you could consider the src/main/frontend folder as a separate root (and also you could open this directly from VSCode), the final structure will be like this:

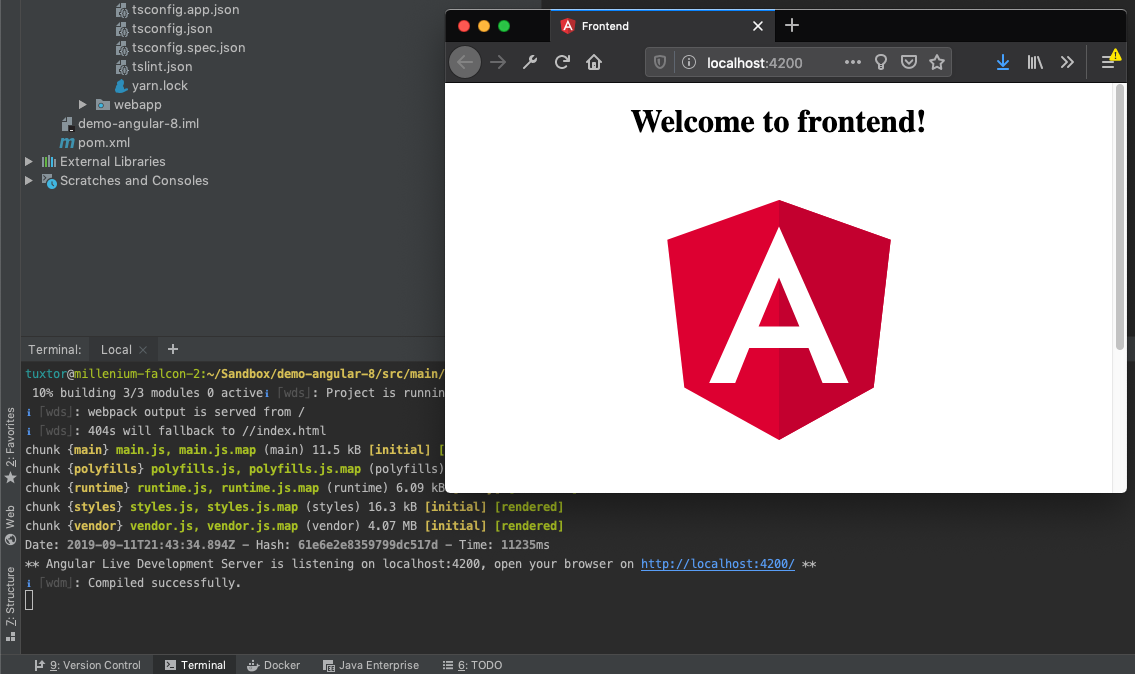

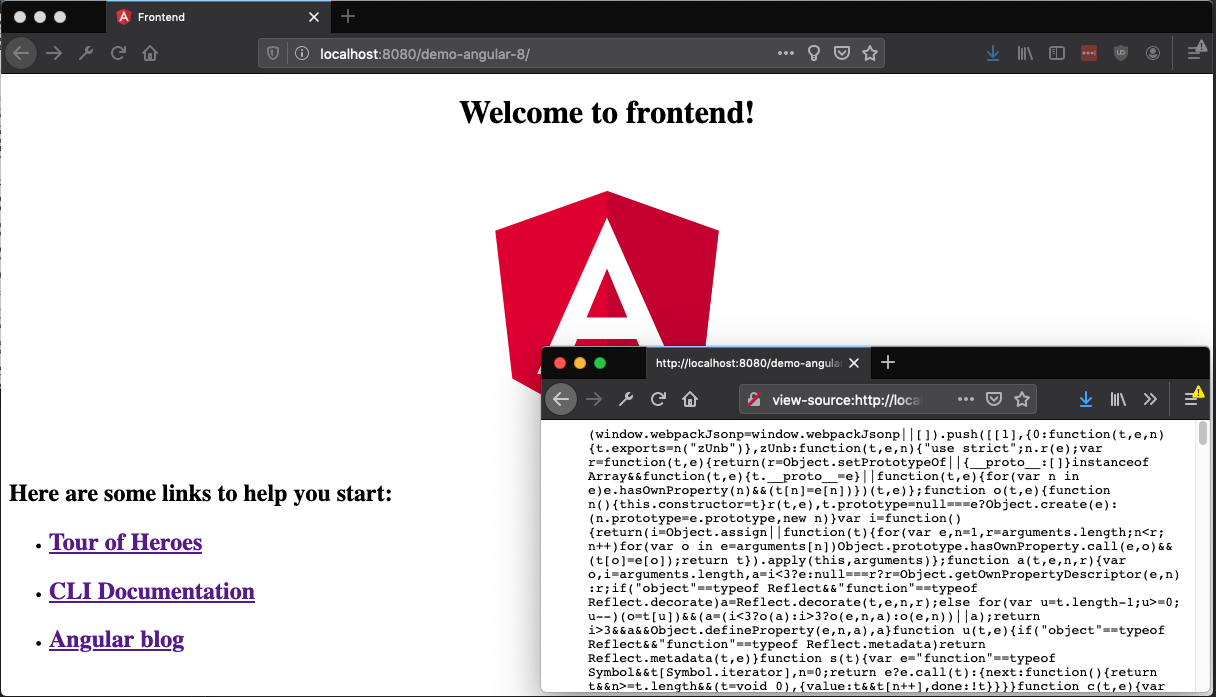

As a first POC I started the application directly from CLI using IntelliJ IDEA and ng serve --open, all worked as expected.

Invoking Webpack from Maven

One of the useful plugins for this task is frontend-maven-plugin which allows you to:

- Download common JS package managers (npm, cnpm, bower, yarn)

- Invoke JS build systems and tests (grunt, gulp, webpack or npm itself, karma)

By default Angular project come with hooks from npm to ng but we need to add a hook in package.json to create a production quality build (buildProduction), please double check the base-href parameter since I'm using the default root from Java conventions (same as project name)

...

"scripts": {

"ng": "ng",

"start": "ng serve",

"build": "ng build",

"buildProduction": "ng build --prod --base-href /demo-angular-8/",

"test": "ng test",

"lint": "ng lint",

"e2e": "ng e2e"

}

...

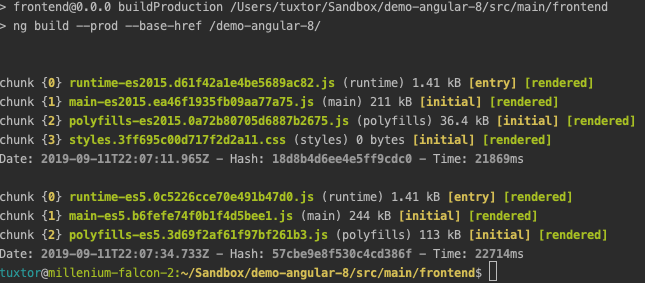

To test this build we could execute npm run buildProduction at webproject's root (src/main/frontend), the output should be like this:

Finally It is necessary to invoke or new target with maven, hence our configuration should:

- Install NodeJS (and NPM)

- Install JS dependencies

- Invoke our new hook

- Copy the result to our final distributable war

To achieve this, the following configuration should be enough:

<build>

<finalName>demo-angular-8</finalName>

<plugins>

<plugin>

<groupId>com.github.eirslett</groupId>

<artifactId>frontend-maven-plugin</artifactId>

<version>1.6</version>

<configuration>

<workingDirectory>src/main/frontend</workingDirectory>

</configuration>

<executions>

<execution>

<id>install-node-and-npm</id>

<goals>

<goal>install-node-and-npm</goal>

</goals>

<configuration>

<nodeVersion>v10.16.1</nodeVersion>

</configuration>

</execution>

<execution>

<id>npm install</id>

<goals>

<goal>npm</goal>

</goals>

<configuration>

<arguments>install</arguments>

</configuration>

</execution>

<execution>

<id>npm build</id>

<goals>

<goal>npm</goal>

</goals>

<configuration>

<arguments>run buildProduction</arguments>

</configuration>

<phase>generate-resources</phase>

</execution>

</executions>

</plugin>

<plugin>

<artifactId>maven-war-plugin</artifactId>

<version>3.2.2</version>

<configuration>

<failOnMissingWebXml>false</failOnMissingWebXml>

<!-- Add frontend folder to war package -->

<webResources>

<resource>

<directory>src/main/frontend/dist/frontend</directory>

</resource>

</webResources>

</configuration>

</plugin>

</plugins>

</build>

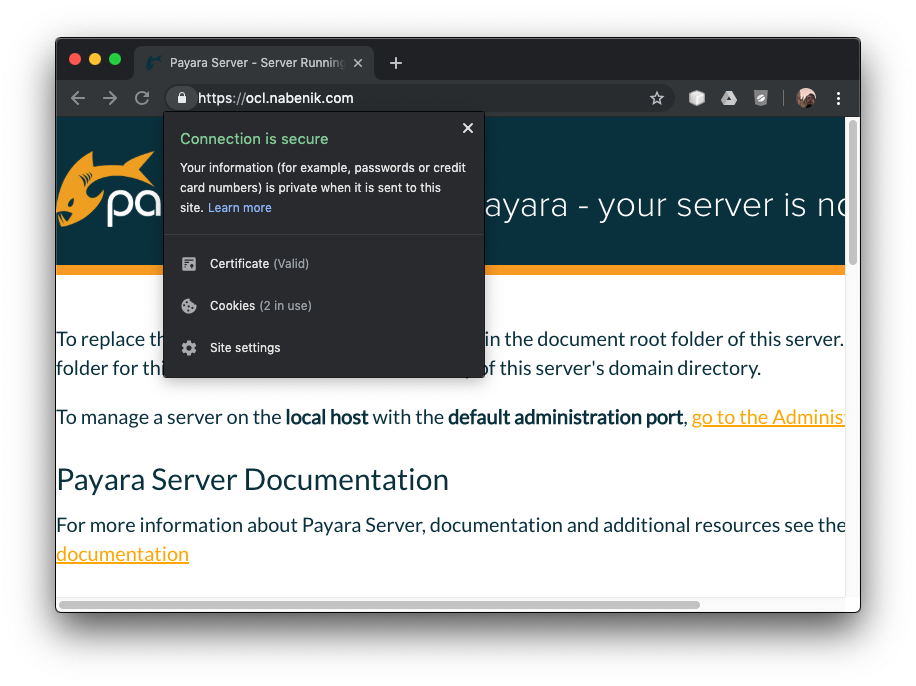

And that's it!. Once you execute mvn clean package you will obtain as result a portable war file that will run over any Servlet container runtime. For Instance I tested it with Payara Full 5, working as expected.

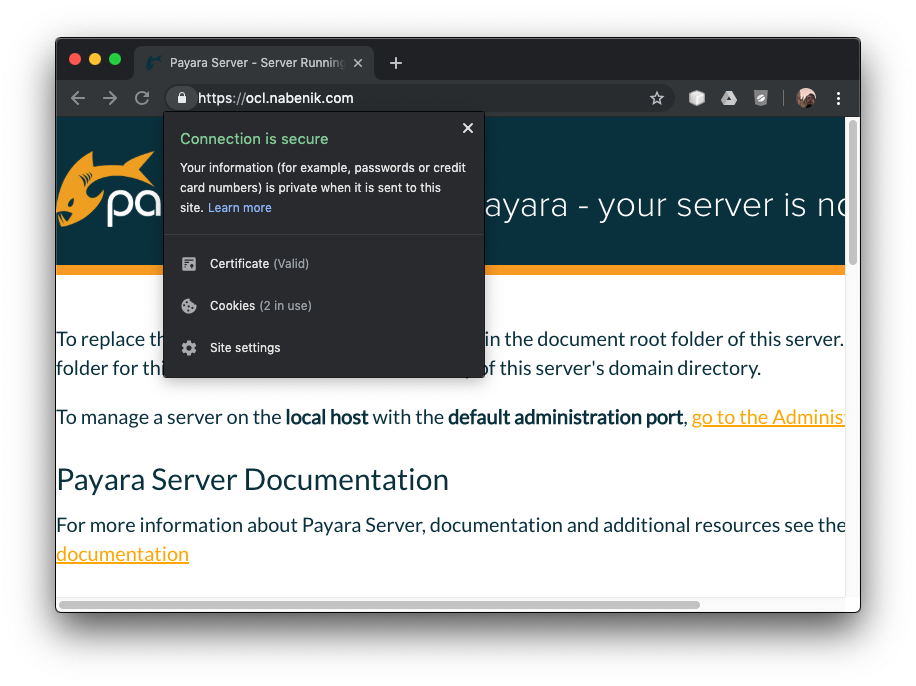

How to install Payara 5 with NGINX and Let's Encrypt over Oracle Linux 7.x

02 May 2019

From field experiences I must affirm that one of the greatest and stable combinations is Java Application Servers + Reverse Proxies, although some of the functionality is a clear overlap, I tend to put reverse proxies in front of application servers for the following reasons (please see NGINX page for more details):

- Load balancing: The reverse proxy acts as traffic cop and could be used as API gateway for clustered instances/backing services

- Web acceleration: Most of our applications nowadays use SPA frameworks, hence it is worth to cache all the js/css/html files and free the application server from this responsibility

- Security: Most of the HTTP requests could be intercepted by the reverse proxy before any attempt against the application server, increasing the opportunity to define rules

- SSL Management: It is easier to install/manage/deploy OpenSSL certificates in Apache/NGINX if compared to Java KeyStores. Besides this, Let's Encrypt officially support NGINX with plugins.

Requirements

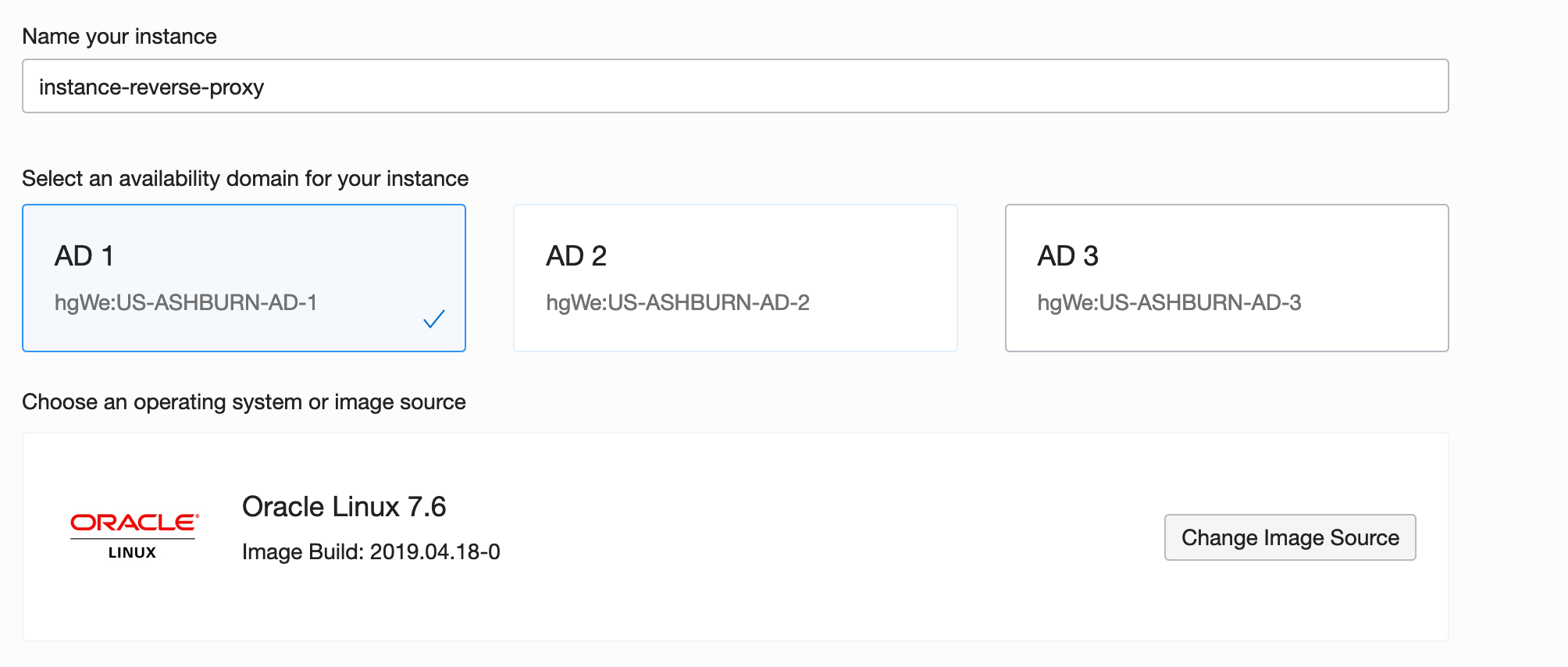

To demonstrate this functionality, this tutorial combines the following stack in a classic (non-docker) way, however most of the concepts could be useful for Docker deployments:

- Payara 5 as application server

- NGINX as reverse proxy

- Let's encrypt SSL certificates

It is assumed that a clean Oracle Linux 7.x (7.6) box will be used during this tutorial and tests will be executed over Oracle Cloud with root user.

Preparing the OS

Since Oracle Linux is binary compatible with RHEL, EPEL repository will be added to get access to Let's Encrypt. It is also useful to update the OS as a previous step:

yum -y update

yum -y install https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

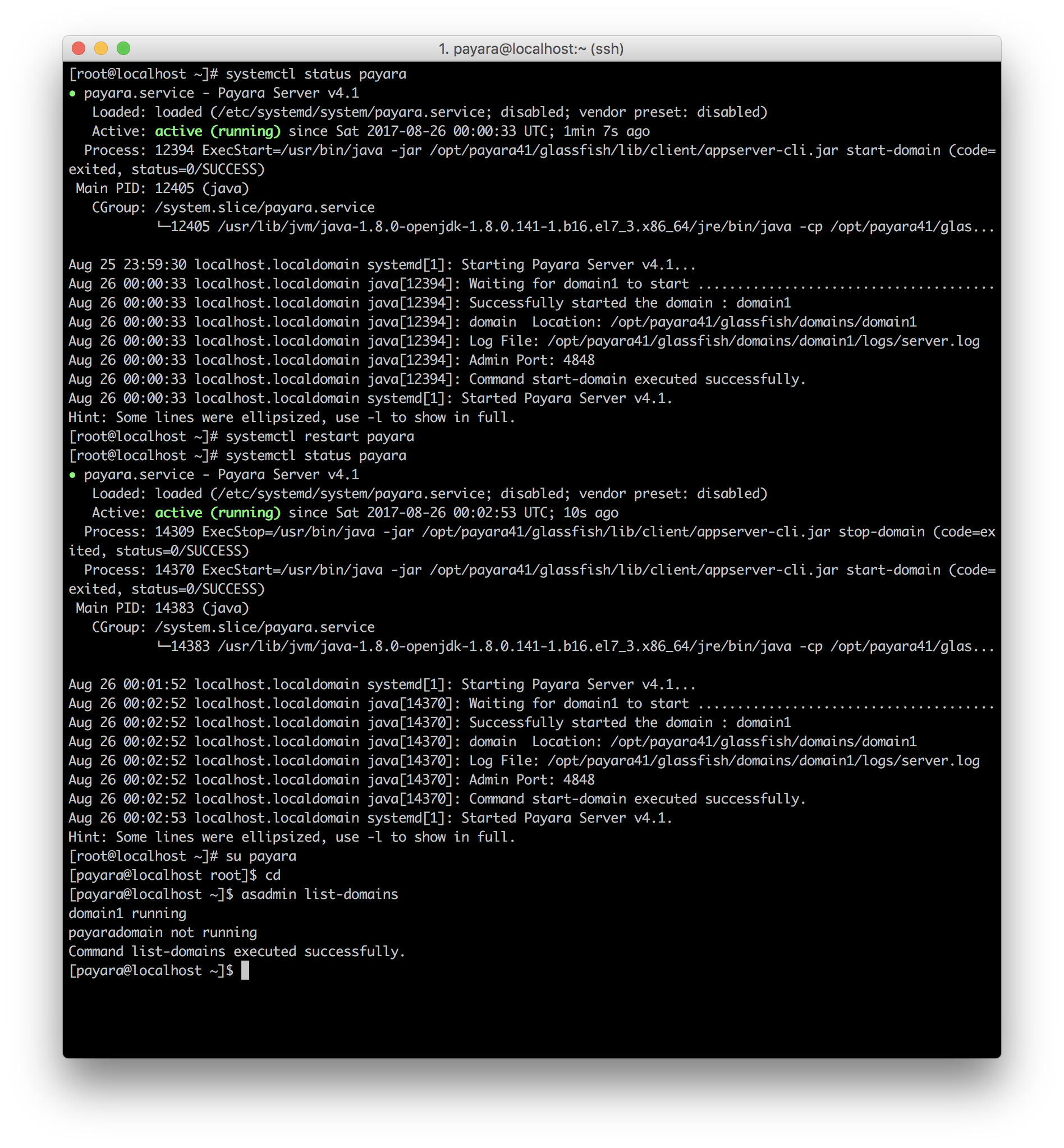

Setting up Payara 5

In order to install Payara application server a couple of dependencies will be needed, specially a Java Developer Kit. For instance OpenJDK is included at Oracle Linux repositories.

yum -y install java-1.8.0-openjdk-headless

yum -y install wget

yum -y install unzip

Once all dependencies are installed, it is time to download, unzip and install Payara. It will be located at /opt following standard Linux conventions for external packages:

cd /opt

wget -O payara-5.191.zip https://search.maven.org/remotecontent?filepath=fish/payara/distributions/payara/5.191/payara-5.191.zip

unzip payara-5.191.zip

rm payara-5.191.zip

It is also useful to create a payara user for administrative purposes, to administrate the domain(s) or to run Payara as Linux service with systemd:

adduser payara

chown -R payara:payara payara5

echo 'export PATH=$PATH:/opt/payara5/glassfish/bin' >> /home/payara/.bashrc

chown payara:payara /home/payara/.bashrc

A systemd unit is also needed:

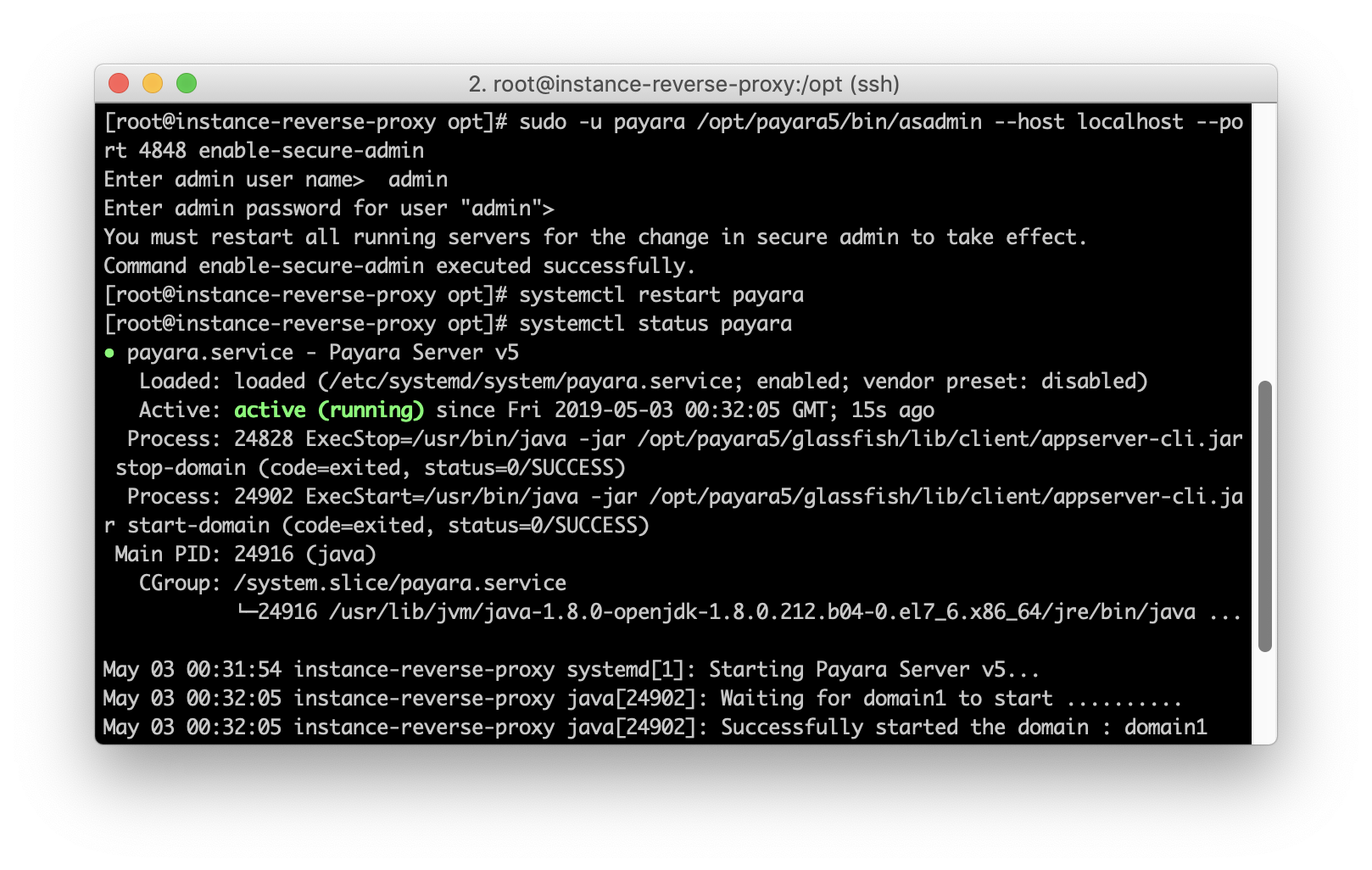

echo '[Unit]

Description = Payara Server v5

After = syslog.target network.target

[Service]

User=payara

ExecStart = /usr/bin/java -jar /opt/payara5/glassfish/lib/client/appserver-cli.jar start-domain